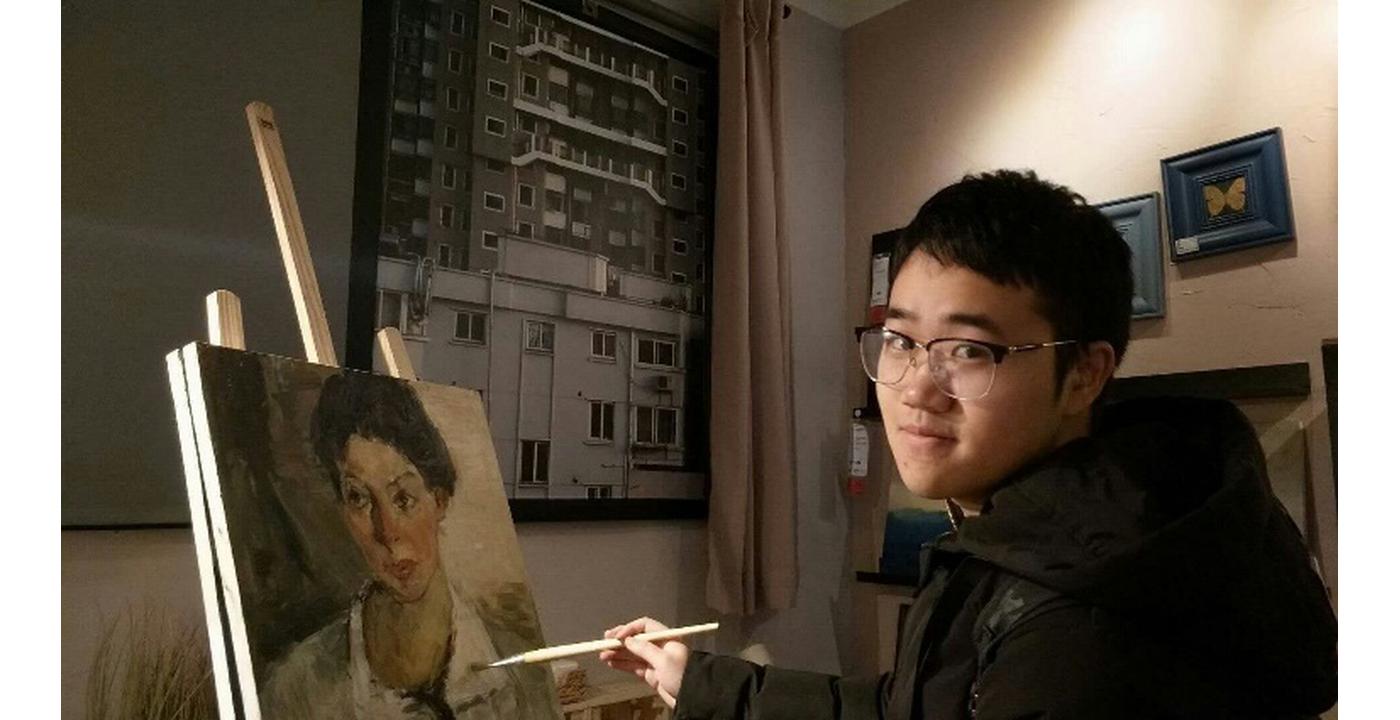

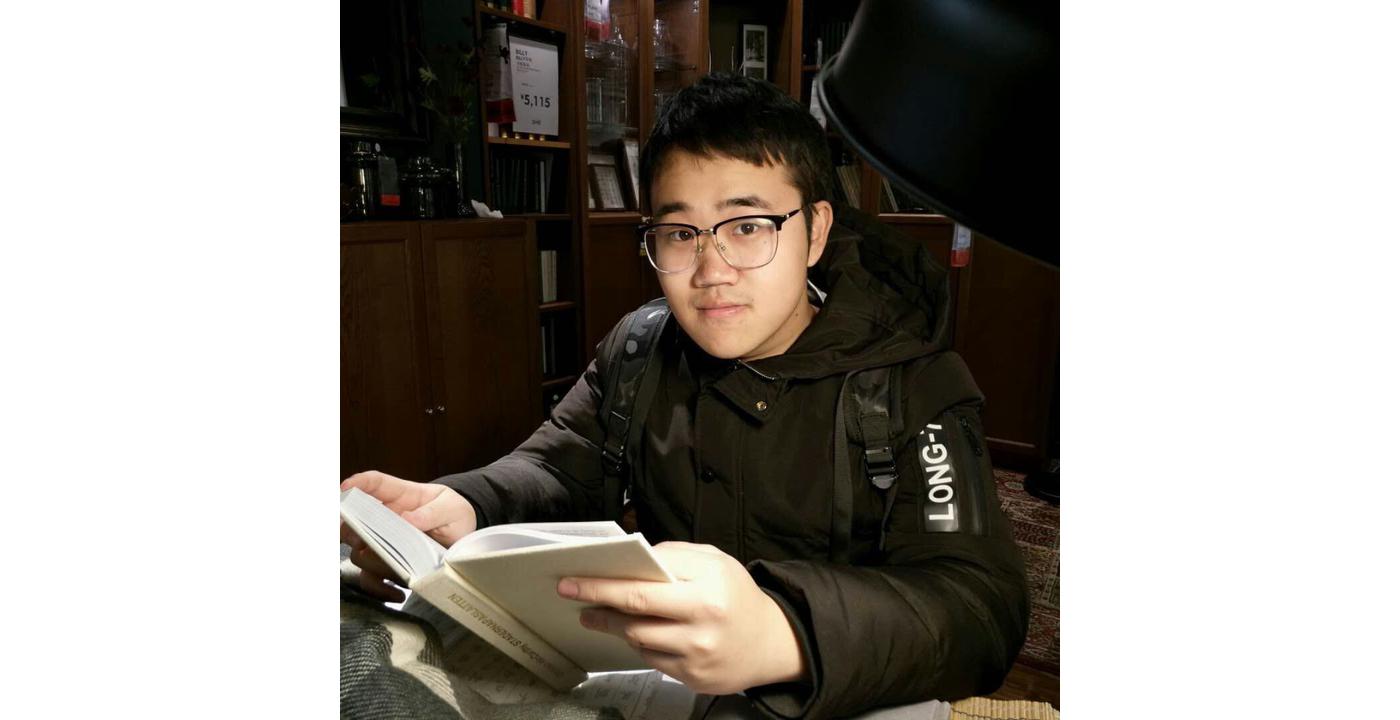

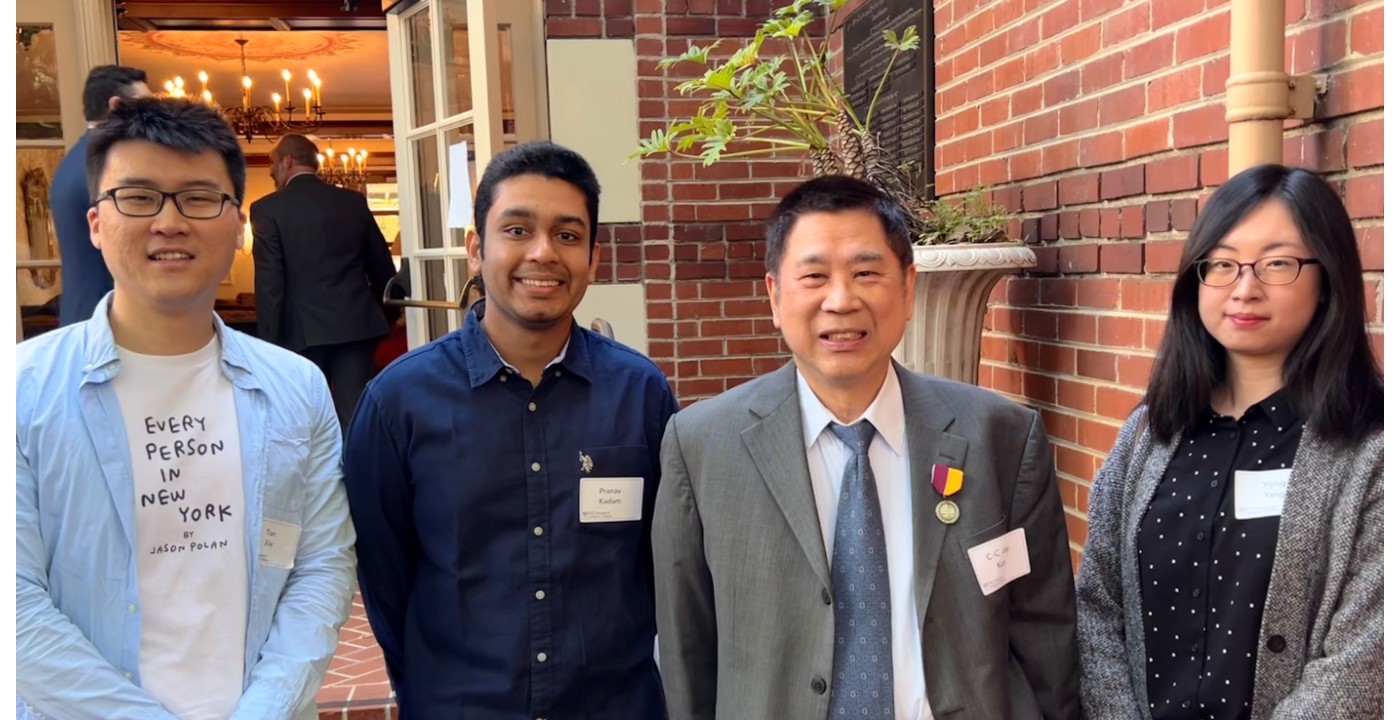

Welcome Jintang Xue to Join MCL as A Summer Intern

In Summer 2022, we have a new MCL member, Jintang Xue, joining our big family. Here is a short interview with Jintang with our great welcome.

1. Could you briefly introduce yourself and your research interests?

My name is Jintang Xue. I am currently pursuing my Master’s degree in Electrical Engineering at USC. I got my Bachelor’s degree from Shanghai University, majoring in communication engineering. My research interests include machine learning and computer vision. I will work on point cloud classification this summer at MCL. I think it is an excellent opportunity to dive deeper into this field.

2. What is your impression about MCL and USC?

This is my first year at USC. The campus is beautiful. The people here are all very kind. I learned a lot from the valuable courses provided by USC, especially EE 569. MCL is a warm family. People in MCL are friendly, hardworking, and intelligent. They are willing to help each other. I am glad to work with them.

3. What is your future expectation and plan in MCL?

I want to make friends and learn from the members of MCL. I find point clouds very interesting. I will work hard on this topic to gain more knowledge and improve my programming skill. I hope I can contribute to MCL. I believe the experience at MCL is valuable in my life.