Professor Kuo Talked about Deep Learning in MHI Emerging Trends Series

Professor Kuo Gave a Talk on Deep Learning at Ming Hsieh Institute

The Ming Hsieh Institute has launched an MHI Emerging Trends Series. MCL Director, Professor C.-C. Jay Kuo, was the first speaker in this series. Professor Kuo gave his talk on deep learning on April 10 (Monday), 2017.

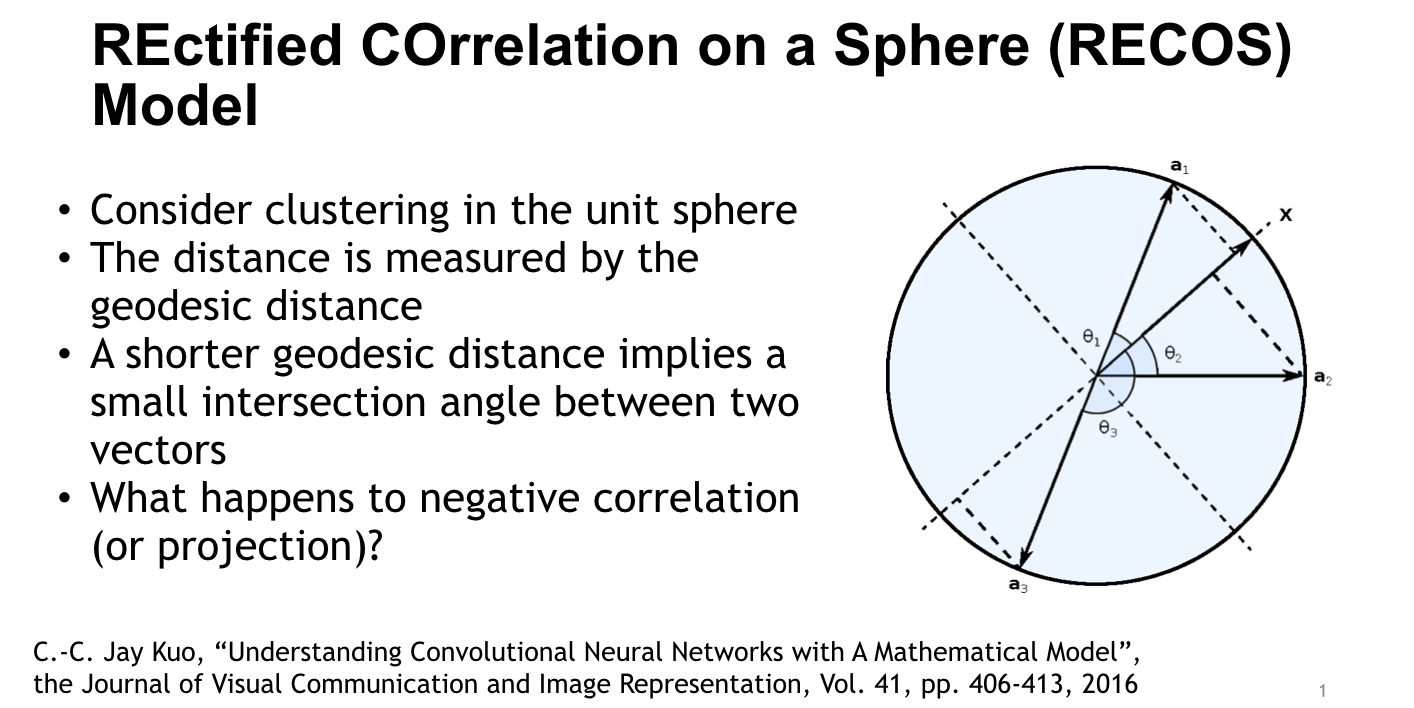

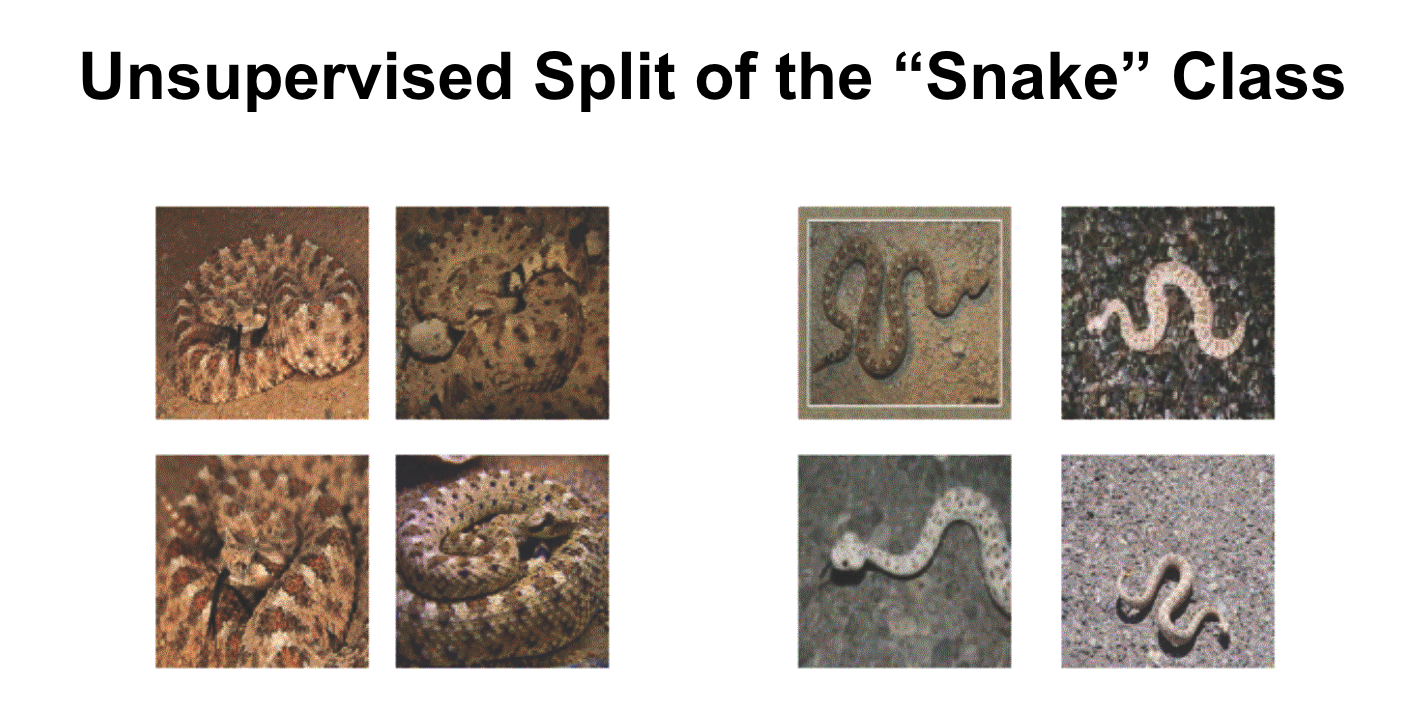

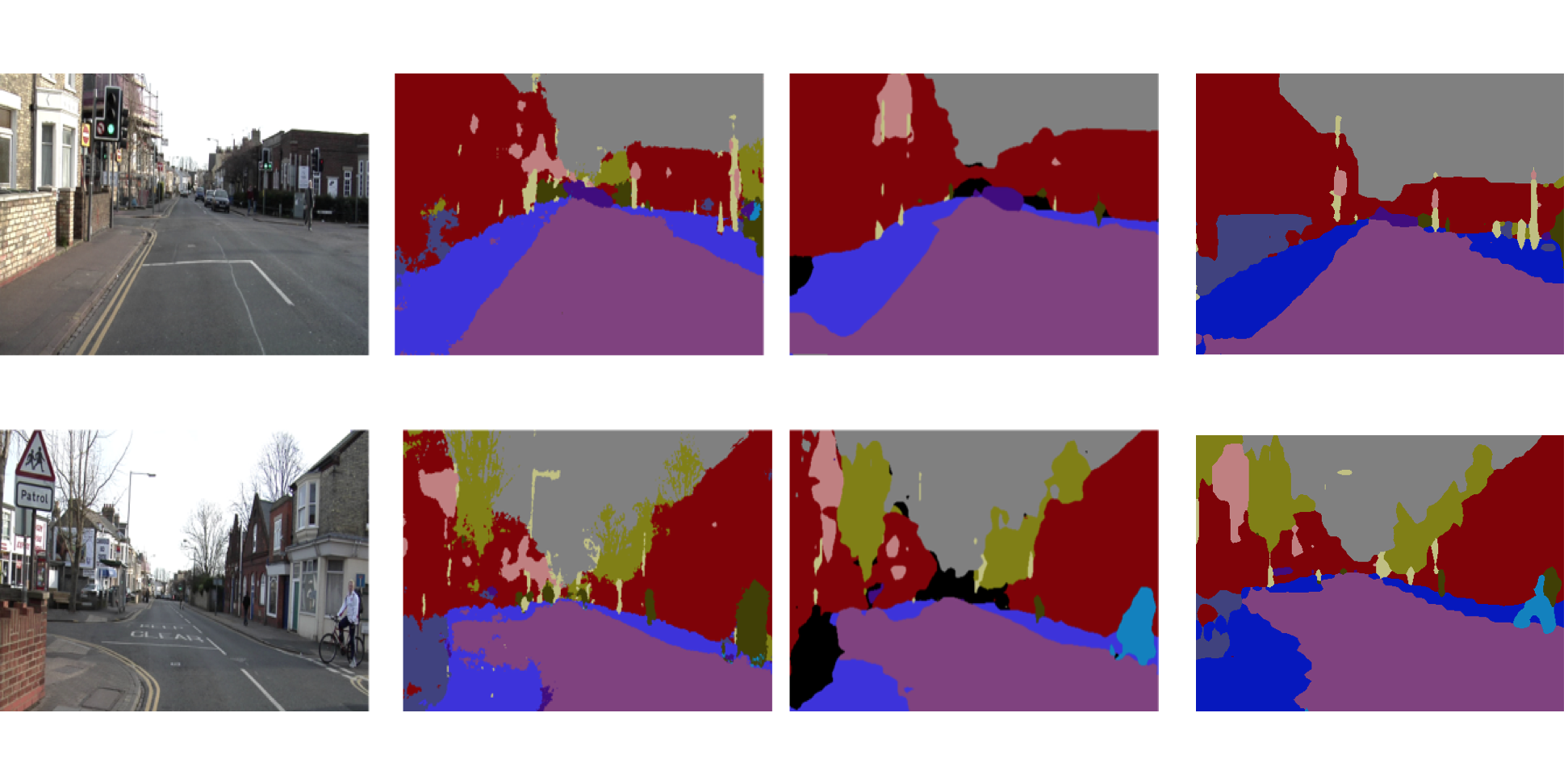

There is a resurging interest in developing a neural-network-based solution to supervised machine learning in the last 5 years. However, little theoretical work was reported in this area. In his talk, Professor Kuo attempted to provide some theoretical foundation to the working principle of the convolutional neural network (CNN) from a signal processing viewpoint. First, he introduced the RECOS transform as a basic building block for CNNs. The term “RECOS” is an acronym for “REctified-COrrelations on a Sphere”. It consists of two main concepts: data clustering on a sphere and rectification. Then, a CNN is interpreted as a network that implements the guided multi-layer RECOS transform. Along this line, he also compared the traditional single-layer and modern multi-layer signal analysis approaches. Furthermore, he discussed how guidance can be provided by data labels through backpropagation in the training with an attempt to offer a smooth transition from weakly to heavily supervised learning. Finally, he pointed out several future research directions at the end.

There were about 80 people attending Professor Kuo’s seminar. Many questions were asked after his talk. Professor Kuo said that he enjoyed the interaction with the audience very much and it demonstrated the strong interest of the audience on this topic.