MCL Research on Motion YOLO

Video object detection is a demanding computer vision topic that extends static image-based detection by introducing camera motion and temporal dynamics. This brings significant challenges, such as occlusion, scene blurriness, and dynamic shape changes caused by object and camera movement. Nevertheless, the temporal correlations between frames and the motion patterns of objects also provide rich and valuable information. The object detection and tracking over time enables machines to understand dynamic scenes and make informed decisions in complex environments. Nowadays, video object detection has become essential for many real-world applications, including autonomous driving, intelligent surveillance, human-computer interaction, and video content analysis.

Existing image-based detection models have achieved remarkable success, offering excellent accuracy and real-time detection capabilities in static scenarios. However, directly applying these models to video introduces several issues. Specifically, image-based models treat video frames independently, ignoring temporal relationships across frames, which often leads to unstable detection results in complex scenes and redundant computations for similar consecutive frames. Moreover, in real-world scenarios, videos are typically stored in compressed formats before being uploaded or transmitted. Fully decompression of the video further increases the computational overhead.

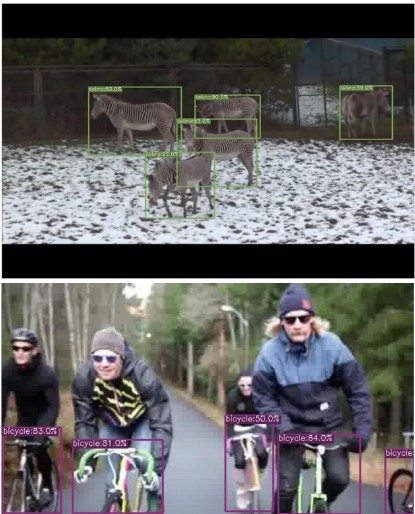

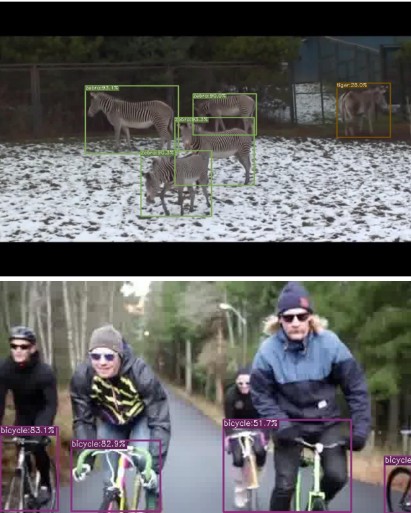

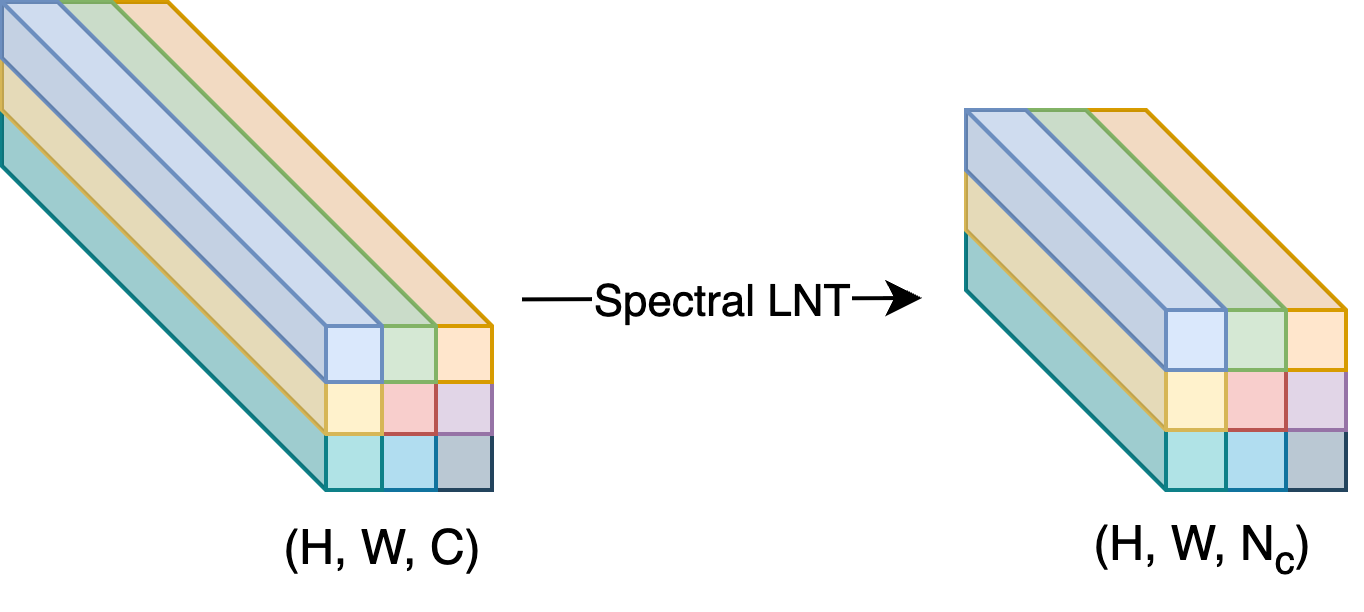

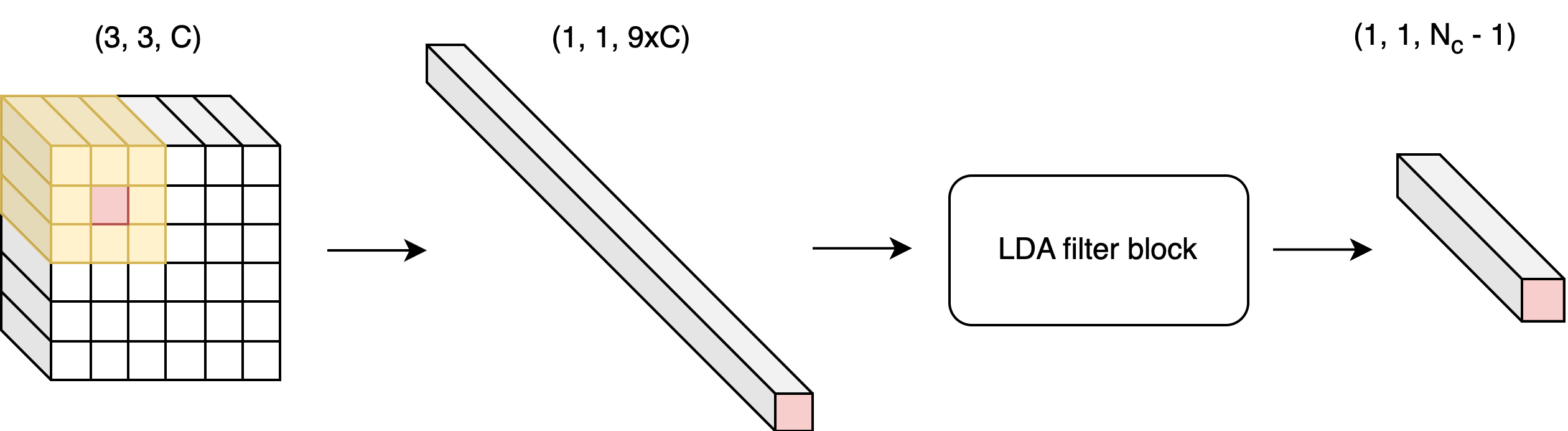

We propose Motion-Assisted YOLO (MA-YOLO), an efficient video object detection strategy that leverages the motion information naturally embedded in compressed video streams while utilizing existing image-based detection models to address the aforementioned challenges. Specifically, we adopt YOLO-X variants as our base detector for static images. Rather than performing detection on every video frame, we detect objects only on selected keyframes and propagate the predictions to estimate detection results for intermediate frames. The proposed framework consists of three modules: (1) keyframe selection and sparse inference, (2) motion vector extraction and pixel-wise assignment, and (3) motion-guided decision propagation. By incorporating the keyframe-based detection and motion [...]