Welcome New MCL Member Pranav Kadam

We are so happy to welcome our new MCL member, Pranav Kadam! Here is a short interview with Pranav.

1. Could you briefly introduce yourself and your research interests?

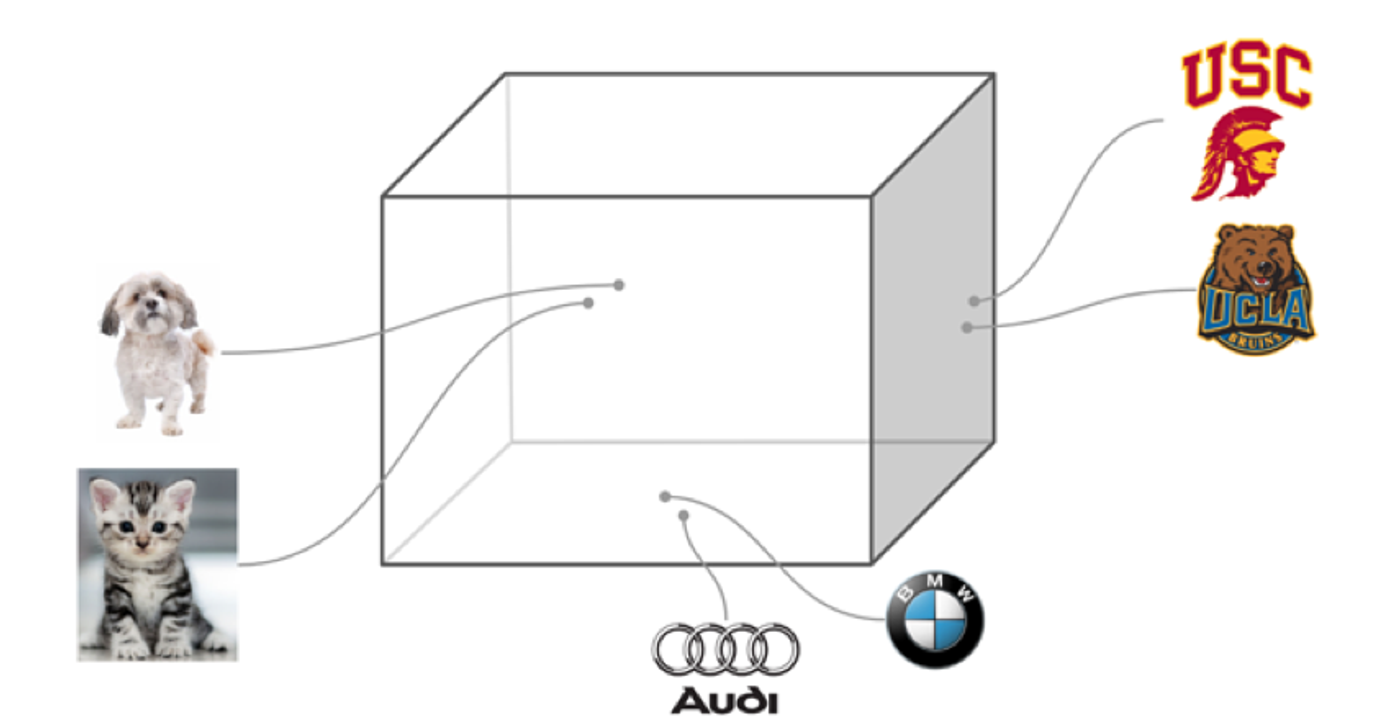

My name is Pranav Kadam and am a graduate student at USC pursuing Master’s degree in Electrical Engineering. I grew up in a city called Pune which is in the western part of India. Prior to joining USC in Fall 2018, I completed my Bachelor’s degree in Electronics and Telecommunications Engineering from Savitribai Phule Pune University. In my undergraduate coursework, I found topics like image processing and neural networks quite interesting. I did some projects in these fields and read a lot a regarding recent developments then, such as CNNs. I am continuing my research and specialization in Computer Vision and Machine Learning at USC.

2. What is your impression about MCL and USC?

USC is a great urban campus with excellent educational and research facilities. A lot of focus is given on mastering the fundamental topics and concepts for a long-term career in the field of interest. In addition, Los Angeles is a large metropolis and there are plenty of options for entertainment, food and sightseeing, all very nearby campus, making it an ideal city to be in during graduate studies. MCL is a team of talented and passionate students headed by Prof. Kuo who is very experienced, insightful and supportive. Lab activities like research meetings and weekly seminars ensure that everyone is aware of each other’s work and there is a good bonding among members.

3. What is your future expectation and plan in MCL?

I would like to work under the guidance of Prof. Kuo and develop the necessary skills to be a successful researcher. [...]