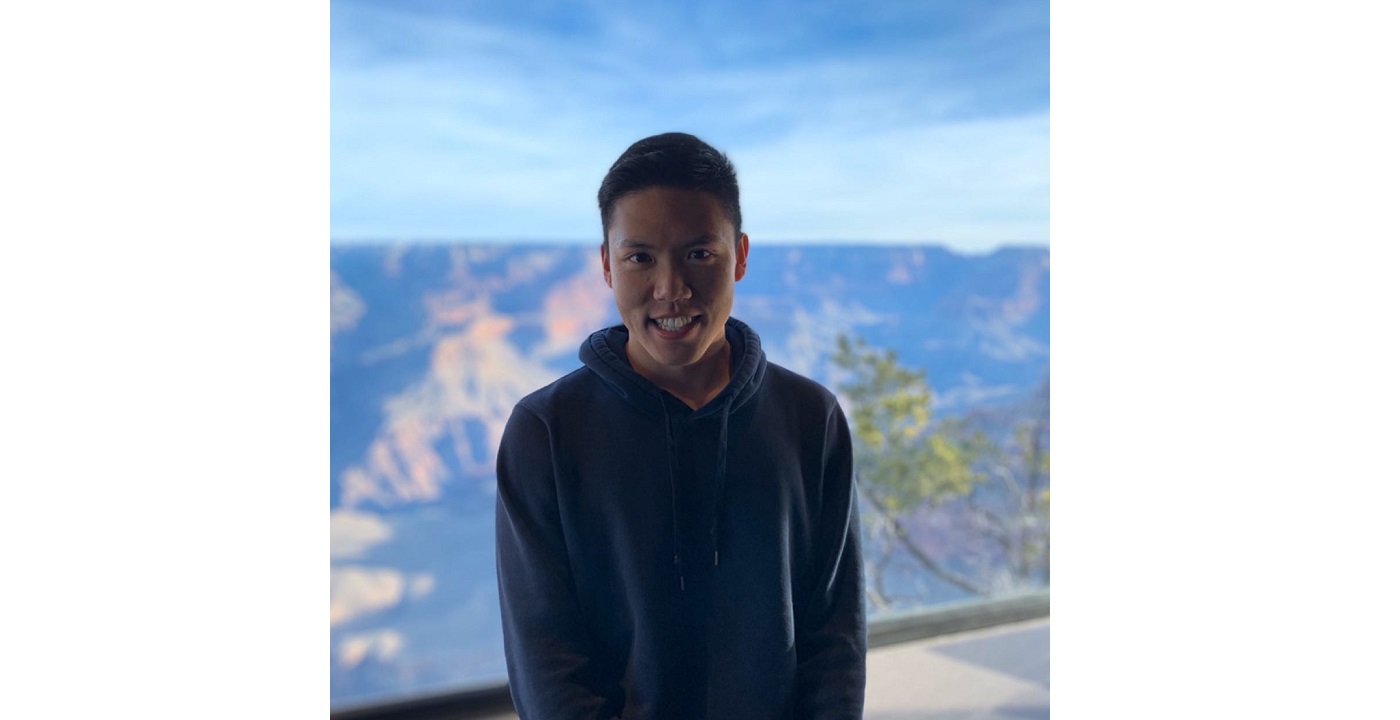

Welcome MCL New Member Ganning Zhao

Could you briefly introduce yourself and your research interests?

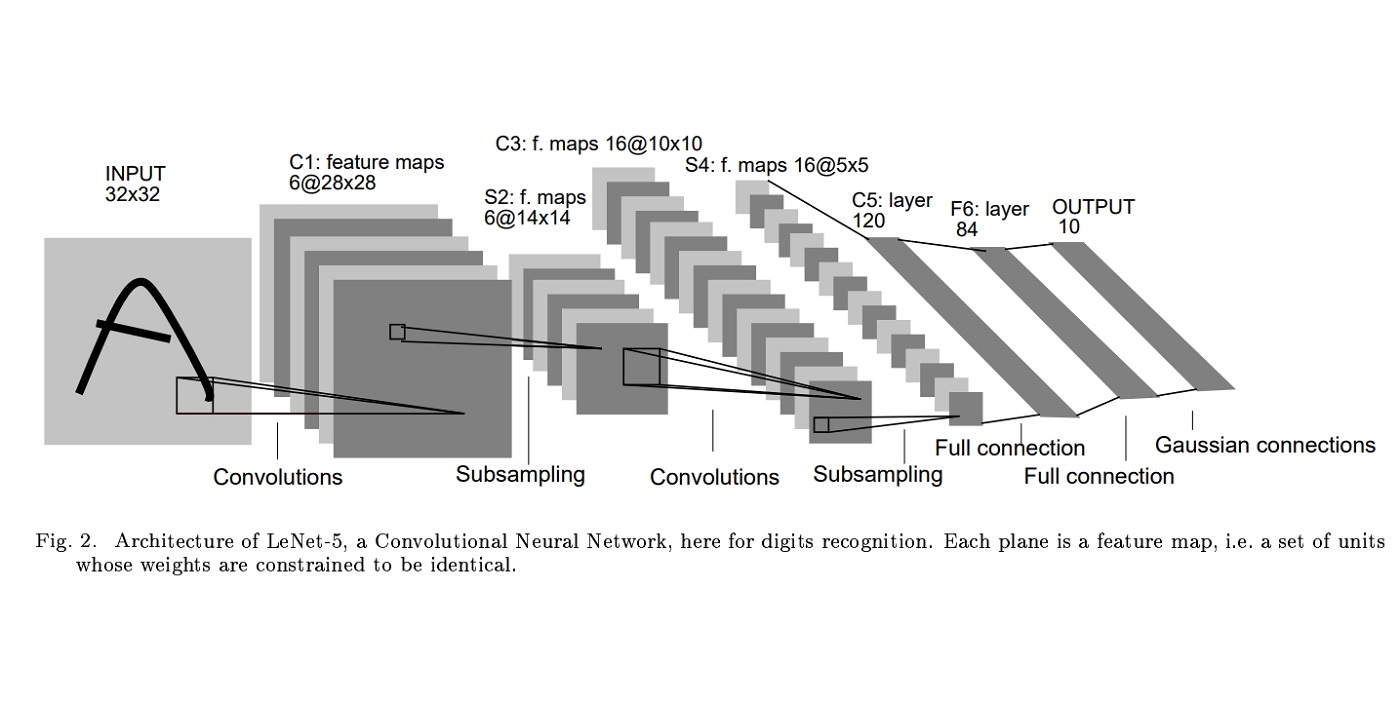

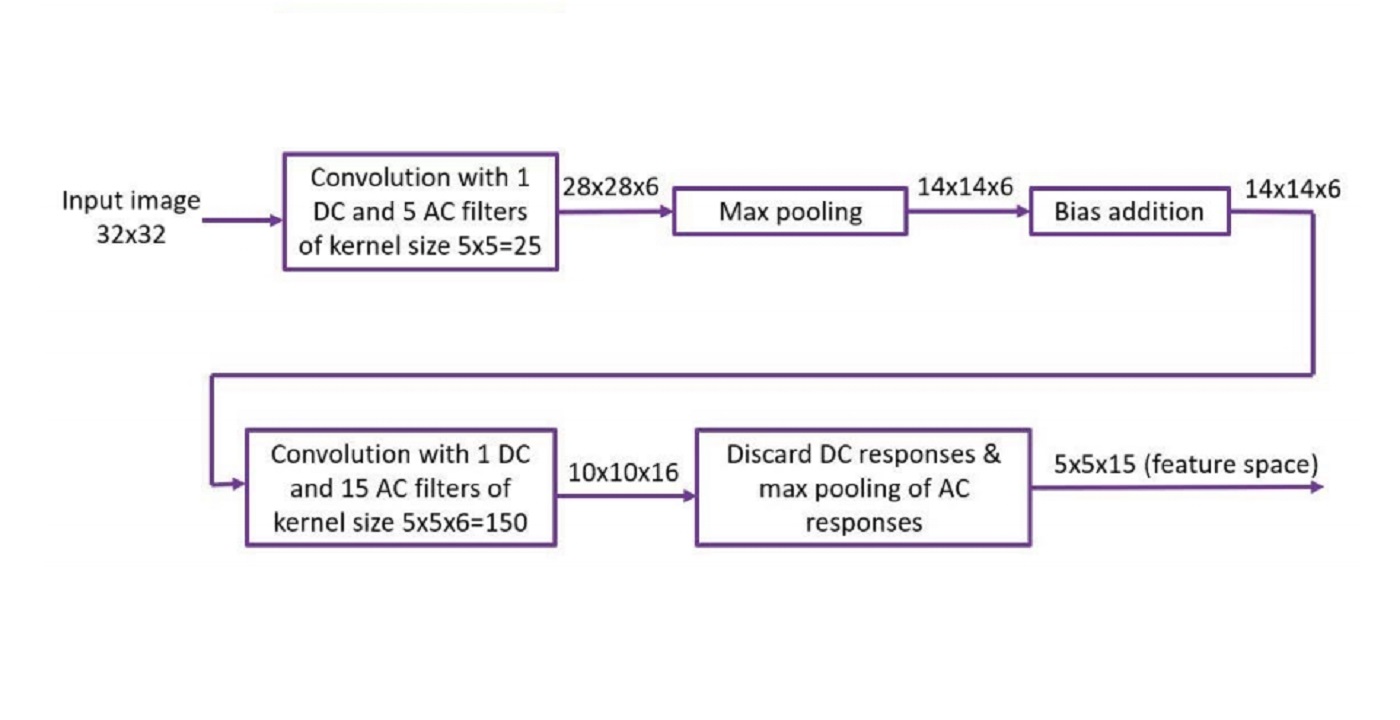

My name is Ganning Zhao. I was born in Weifang, China. This is my first semester here in USC, pursuing master’s degree on Electrical Engineering. I graduated from Guangdong University of Technology, majoring in automation. In my undergraduate, I did a project about audio signal processing using convolutional neural network with my friends and I became interested in signal processing and machine learning since then. My current research interest is image processing and computer vision.

What is your impression about MCL and USC?

I like the campus of USC, because the buildings are beautiful. Particularly, there are many libraries in USC and many of them are exquisite. People in MCL are intelligent and work very hard. I’m also impressed that they are very nice and always willing to help with each other. Professor Kuo is also very nice and caring about everyone in lab. I like the atmosphere here in MCL.

What is your future expectation and plan in MCL?

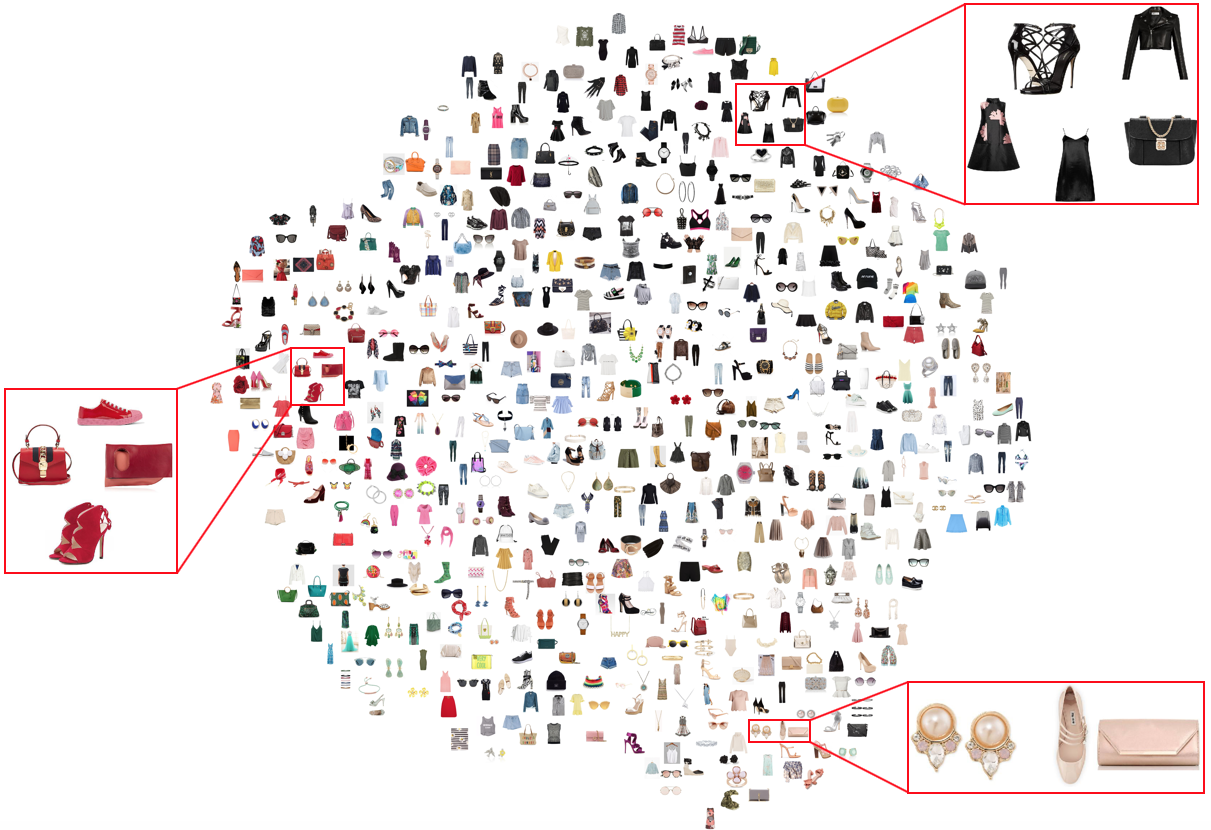

My research topic in this summer is texture synthesis. I hope I can do a good job in this topic. More importantly, because everyone in MCL are intelligent, I hope I can learn a lot from them during communication and improve my research ability. Also, I want to make more friends here in MCL.