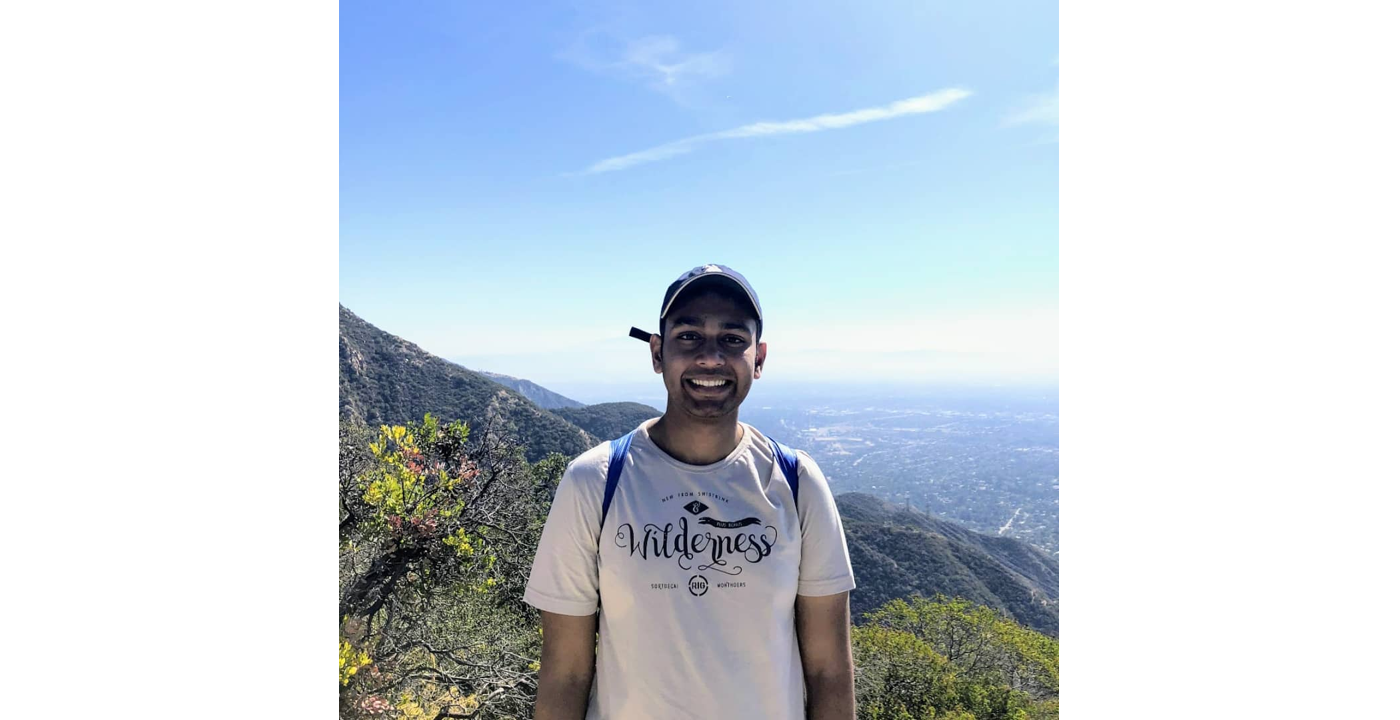

Welcome New MCL Member Vaishnavi Krishnamurthy

We are so glad to welcome our new MCL member, Vaishnavi Krishnamurthy! Here is a short interview with Vaishnavi:

1. Could you briefly introduce yourself and your research interests?

My name is Vaishnavi Krishnamurthy and I am a graduate student at USC pursuing Masters in Electrical engineering with focus on image processing and machine learning. I hail from a city called Bengaluru which is located in the southern part of India. I completed my Bachelors degree in Electronics and Communication Engineering at Rashtreeya Vidyalay College of Engineering, Bengaluru. Post my undergrad studies and prior to joining USC, I worked as a Senior Executive at Bharti Airtel, Gurugram in Networks for a year.

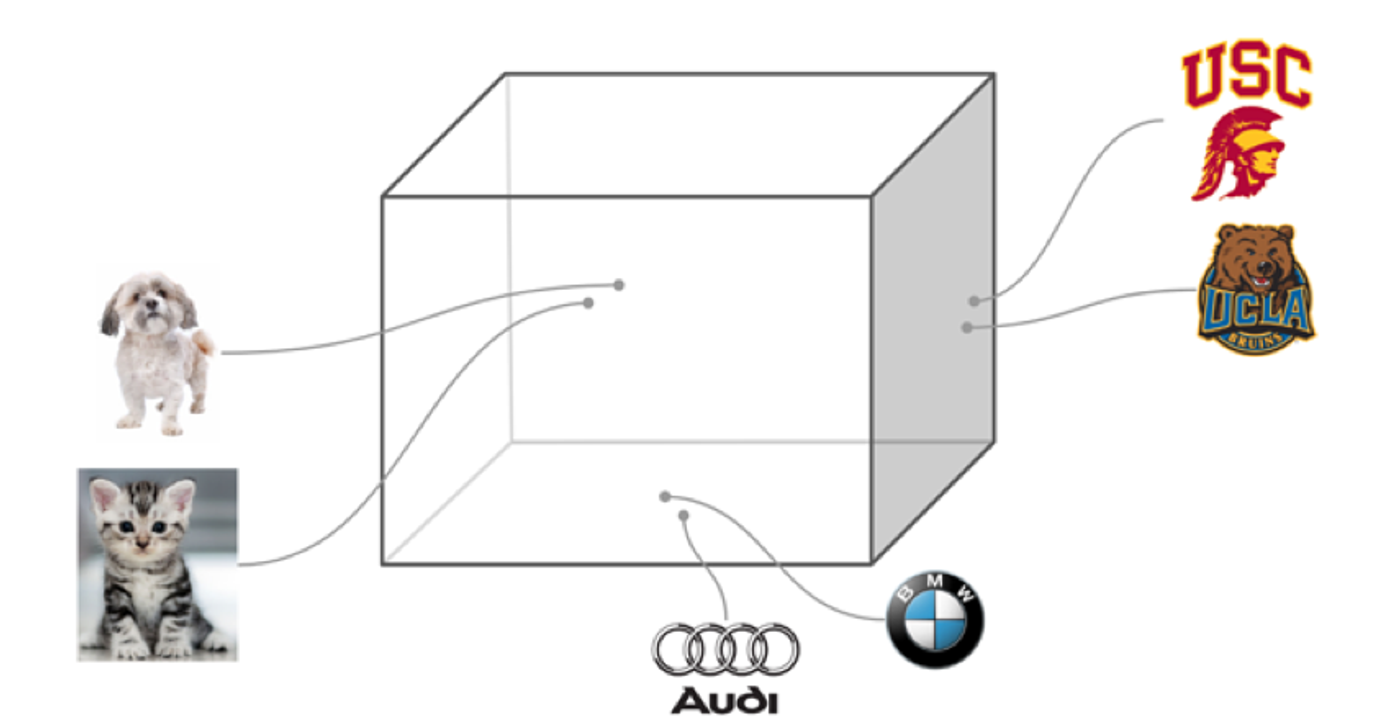

Broadly, my research interest lies in solving the current challenges in image processing using deep learning approach. I developed interest in this field when I was working on a biomedical imaging project that aimed at brain tissue segmentation for tumor detection. Currently, I am continuing my research on semantic image segmentation at the MCL lab in USC.

2. What is your impression about MCL and USC?

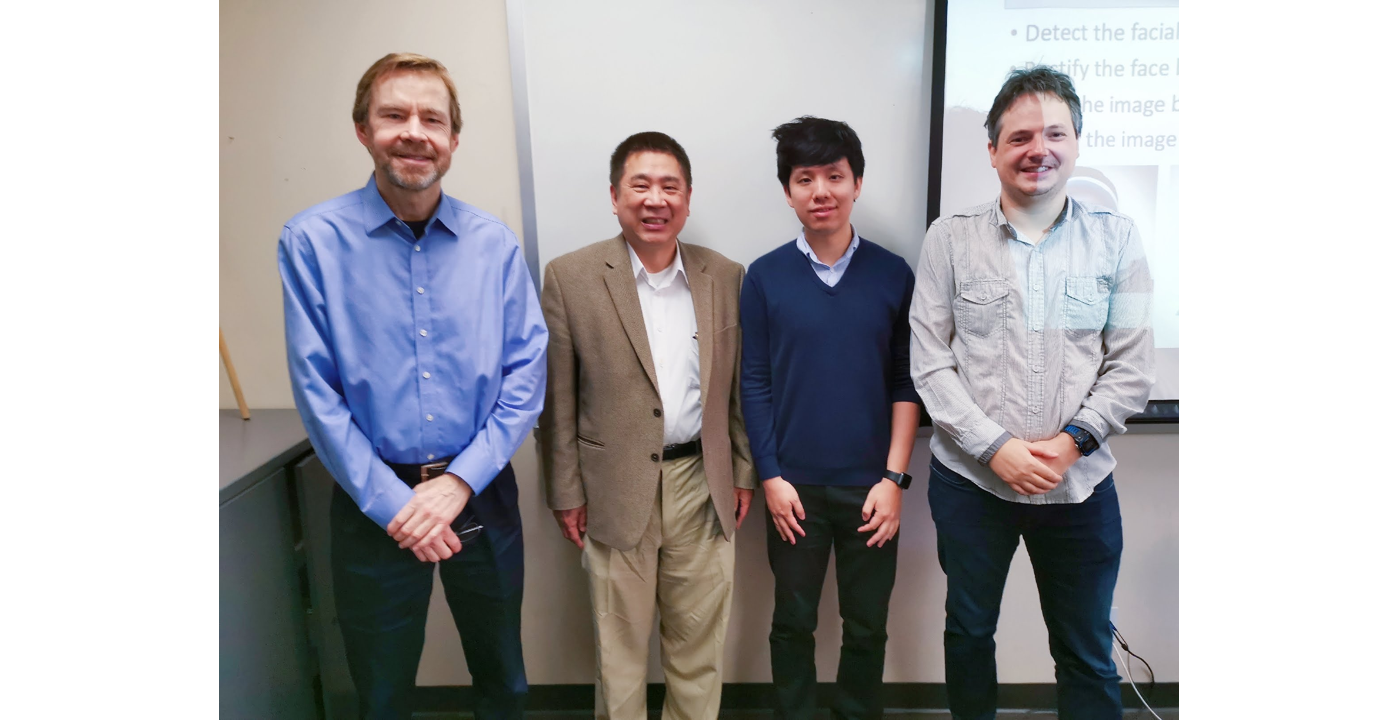

In my initial days at USC, I felt that the coursework was challenging and it took a while for me to cope with the fast paced curriculum. The student life at USC has taught me that consistency is the key to achieve excellence in any given field. For a toddler in research like me, MCL lab has provided an extremely conducive environment to be in. Working under the guidance of Prof. Jay Kuo and his extremely motivated and helpful bunch of PhD students has been an amazing experience and I wish to utilize this opportunity to learn more and hone my research skills.

3. What is [...]