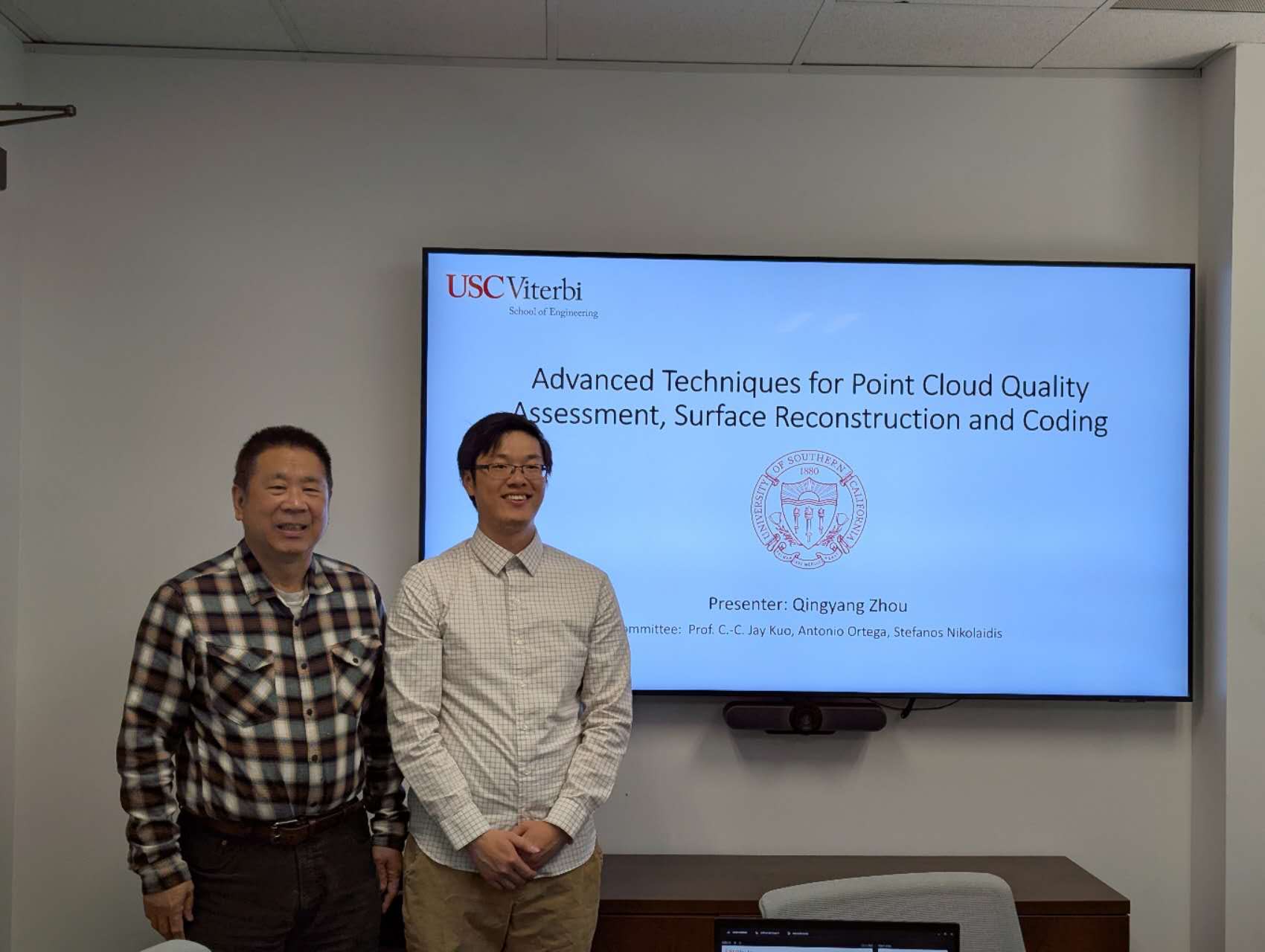

Welcome New MCL Member James Zhan

We are very happy to welcome a new MCL member, James Zhan. Here is a quick interview with James:

1. Could you briefly introduce yourself and your research interests?

My name is Yunkai Zhan, and people always call me James. I’m currently a rising junior undergraduate student major in computer science. My research interest includes computer vision, machine learning, and computational social science. Outside of academia, I’m a basketball and football (soccer but it should be football) fan and player. I also play poker and love watching movies.

2. What is your impression of MCL and USC?

I’m very grateful to join MCL to work with Dr. Kuo and all the students. Dr. Kuo is always supporting his students and providing meaning advises and inspiring ideas. The lab has a very vibrant vibe. I enjoy talking to everyone during the pizza time before the seminar. If I haven’t talk to you, feel free to reach out to me next time.

3. What is your future expectation and plan in MCL?

I’m looking forward to contribute to projects starting this fall to learn from the process of self studying and collaborate with experienced researchers. I’m really excited to work on transparent and interpretable Green Learning.