Author: Jia He, Xiang Fu, Shangwen Li, Chang-Su Kim, and C.-C. Jay Kuo

Research Problem

Visual attention of an image, known as saliency of the image, is defined as the regions and contents of the image that attract human eyes’ attention, such as regions with high-contrast, bright luminance, vivid color, clear scene structure and so on, or can be the semantic objects that human expect to see. Our research is to learn the visual attention of the image database, and then develop image characterization and classification algorithms according to the learned visual attention features. These algorithms will be applied into image compression, retargeting, annotation, segmentation, image retrieval, etc.

Main Ideas

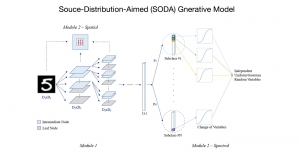

Recently, the image saliency has been widely studied. However, most work focuses on extracting the salience map of the image using a bottom-up context computation framework [1~5]. The saliency of the image does not always match exactly the visual attention of human, since human tend to “be attracted” by things of their particular interests. To bridge the gap, the learning of visual attention should combine both bottom-up and top-down frameworks. To achieve this goal, we are building a hierarchical human perception tree and learning the image visual attentions with detailed image characteristics, including the salient region’s appearance, semantics, attention priority and intensity. And then the image classification will be based on the content of the saliency area and its saliency intensity. Our system will capture not only the locations of visual attention regions in an image but also estimate their priorities and intensities.

Future Challenges

Building a hierarchical human perceptual tree for visual attention learning will be challenging because of its complication, and little work has been done on this modeling. We aim to model the perceptual tree as close as possible to real human perceptual system.

References

- [1] Judd, Tilke, et al. “Learning to predict where humans look.” Computer Vision, 2009 IEEE 12th international conference on. IEEE, 2009.

- [2] Borji, Ali, and Laurent Itti. “State-of-the-art in visual attention modeling.” (2013): 1-1.

- [3] Achanta, Radhakrishna, et al. “Frequency-tuned salient region detection.” Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on. IEEE, 2009.

- [4] Goferman, Stas, Lihi Zelnik-Manor, and Ayellet Tal. “Context-aware saliency detection.” Pattern Analysis and Machine Intelligence, IEEE Transactions on 34.10 (2012): 1915-1926.

- [5] Cheng, Ming-Ming, et al. “Global contrast based salient region detection.” Computer Vision and Pattern Recognition (CVPR), 2011 IEEE Conference on. IEEE, 2011.

- [6] He, Jia, Chang-Su Kim, and C.-C. Jay Kuo. “Interactive Image Segmentation Techniques: Algorithms and Performance Evaluation.” Springer Singapore, 2014.17-62