Congratulations to Mahtab Movahhedrad for Passing Her Qualifying Exam

Congratulations to Mahtab Movahhedrad for passing her qualifying exam! Her thesis proposal is titled “Explainable Machine Learning for Efficient Image Processing and Enhancement.” Her Qualifying Exam Committee members include Jay Kuo (Chair), Antonio Ortega, Bhaskar Krishnamachari, Justin Haldar, and Mejsam Razaviyayn (Outside Member). Here is a summary of her thesis proposal:

Image Signal Processors (ISPs) are critical components of modern imaging systems, responsible for transforming raw sensor data into high-quality images through a series of processing stages. Key operations such as demosaicking and dehazing directly influence color fidelity, detail preservation, and visual clarity. While traditional methods rely on handcrafted models, deep learning has recently shown strong performance in these tasks, albeit at the expense of computational efficiency and energy consumption.

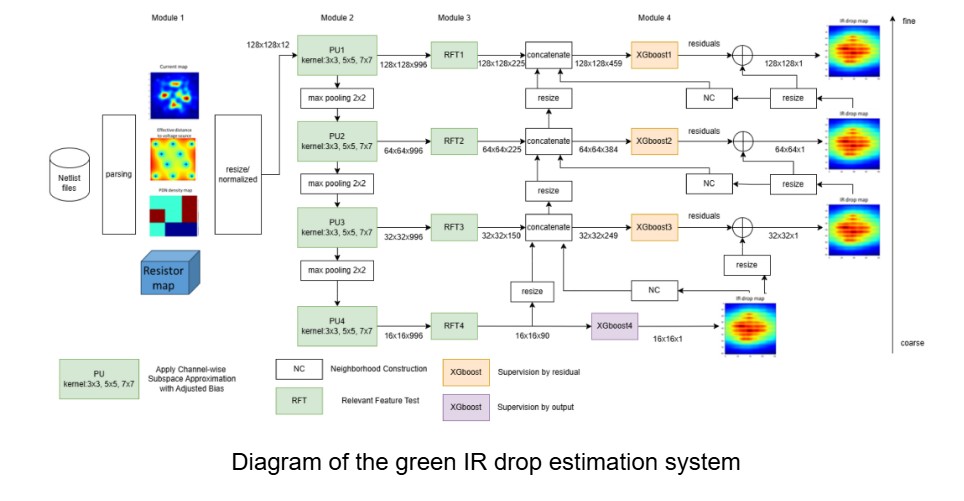

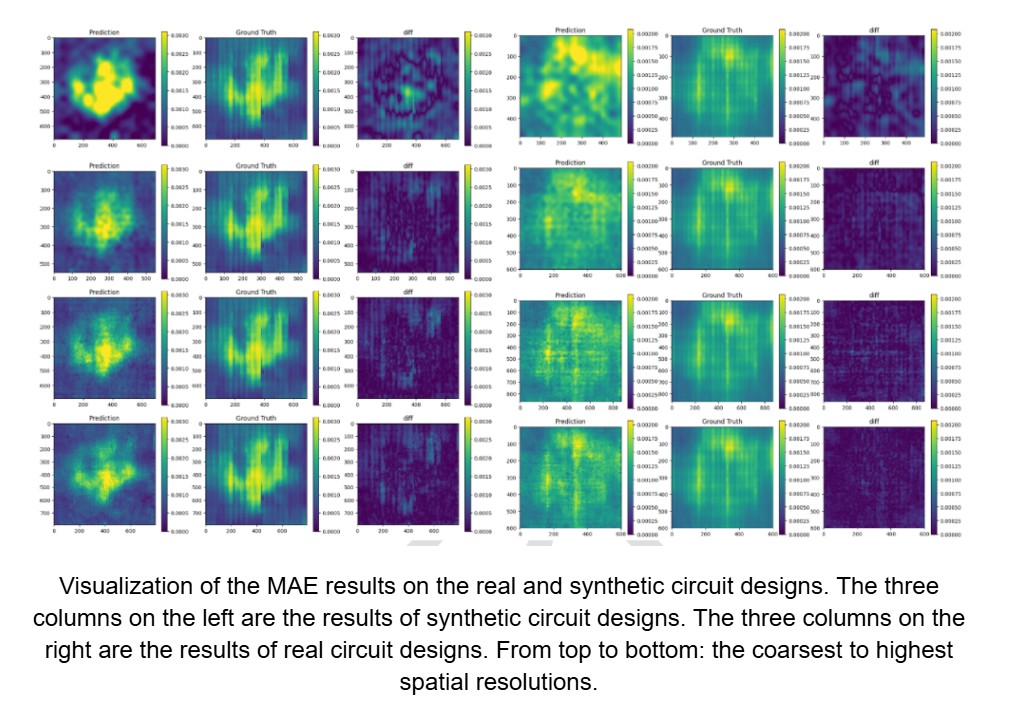

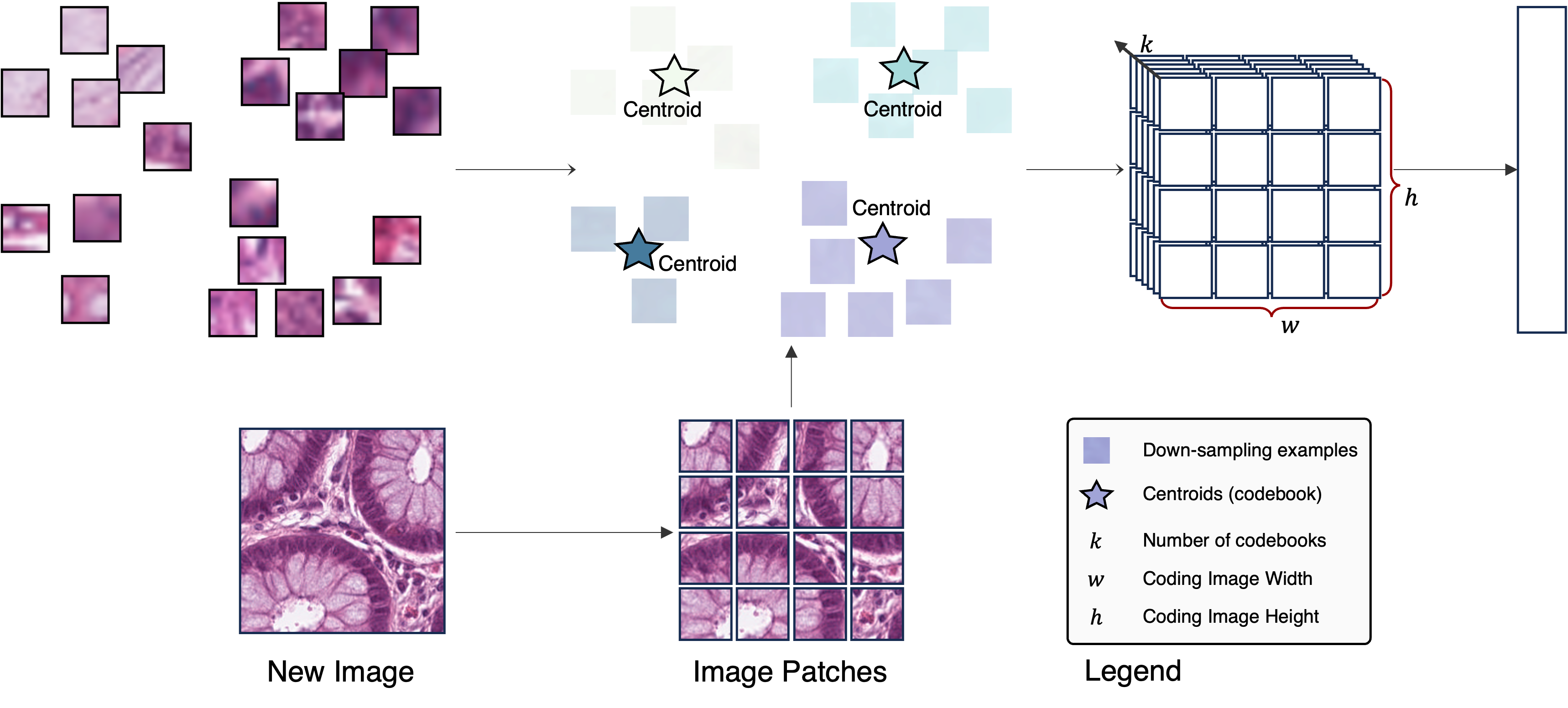

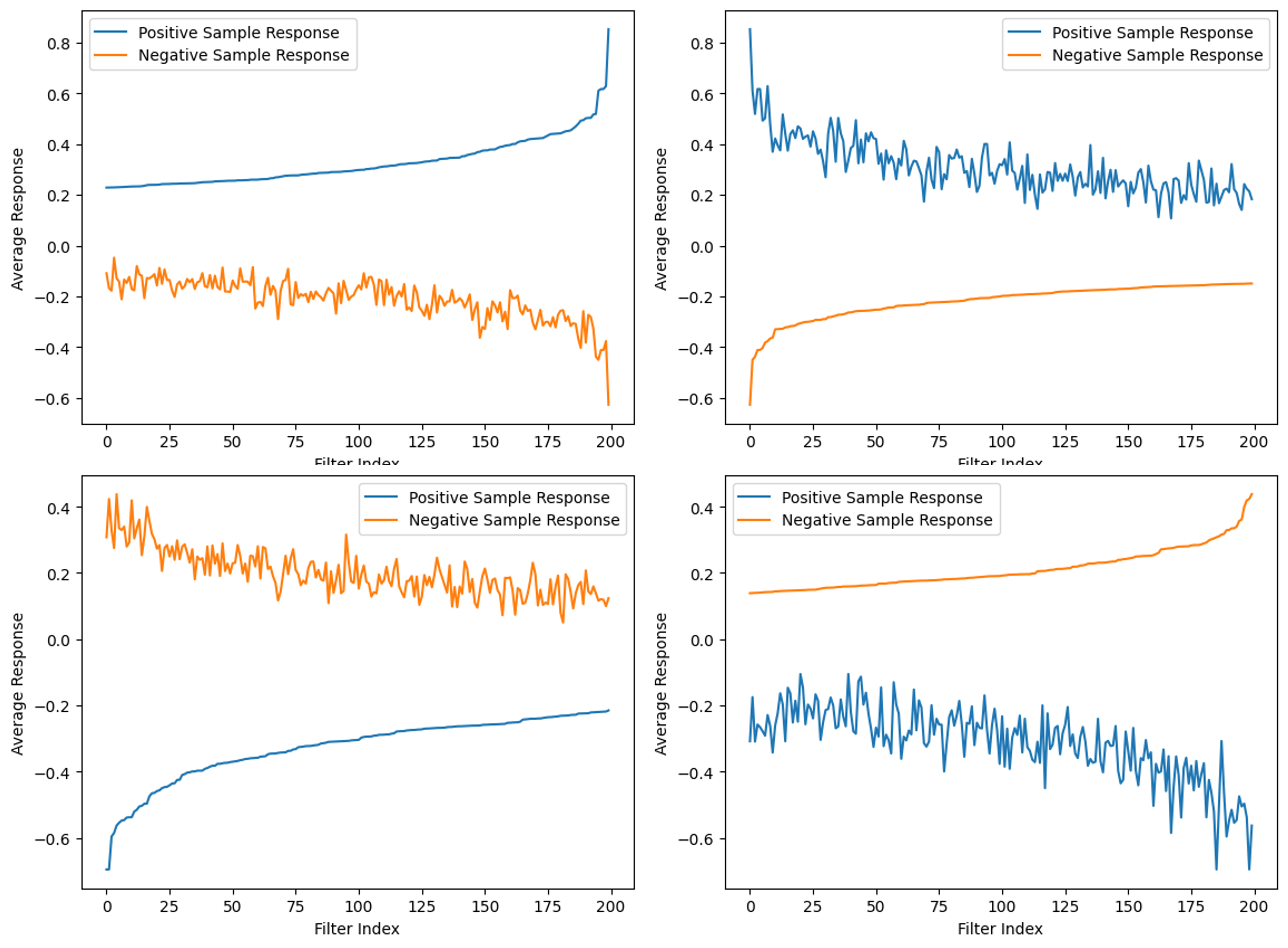

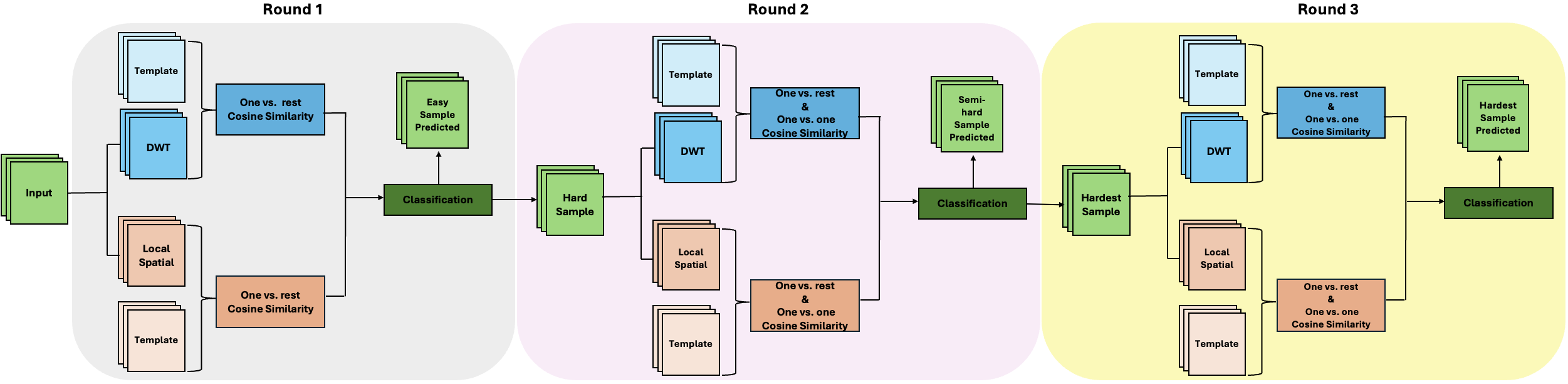

With the increasing demand for mobile photography, balancing image quality with resource efficiency has become essential, particularly for battery-powered devices. This work addresses the challenge by leveraging the principles of green learning (GL), which emphasizes compact model architectures and reduced complexity. The GL framework operates in three cascaded stages—unsupervised representation learning, semi-supervised feature learning, and supervised decision learning—allowing efficient, interpretable, and reusable solutions.

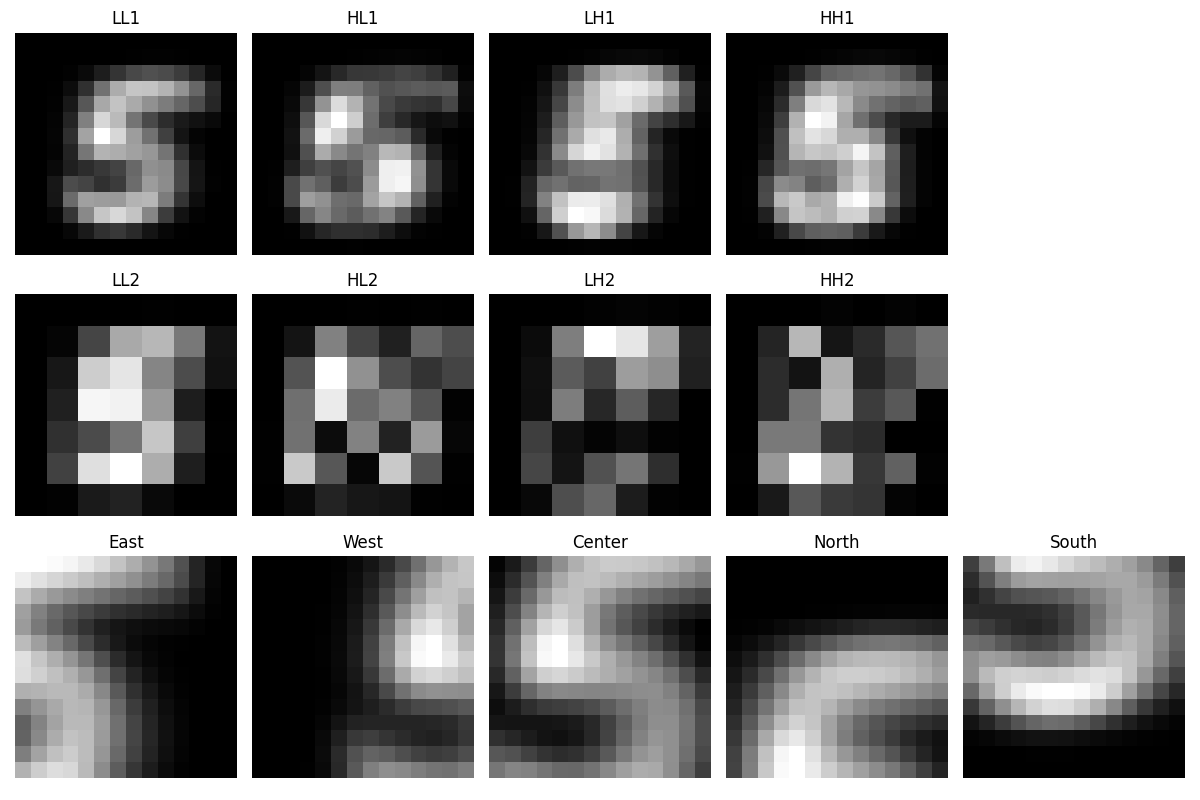

Building on this foundation, my work introduces three methods: Green Image Demosaicking (GID), Green U-Shaped Image Demosaicking (GUSID), and Green U-Shaped Learning Dehazing (GUSL-Dehaze). GID offers a modular, lightweight alternative to conventional deep neural networks, achieving competitive accuracy with minimal resource usage. GUSID extends this efficiency with a U-shaped encoder–decoder design that enhances reconstruction quality while further reducing complexity. Finally, GUSL-Dehaze combines physics-based modeling with green learning principles to restore contrast and natural colors in hazy conditions, rivaling deep learning approaches at a fraction of the cost.

Together, these contributions advance ISP design by delivering high-quality, interpretable, and energy-efficient imaging solutions suitable for mobile and embedded platforms.