Professor Kuo Visited Taiwan and Had Reunions with Local MCL Alumni

Professor Kuo visited Taiwan from December 23, 2022, to January 11, 2023. This is his first visit after the 3-year pandemic period. During the visit, his main responsibility was giving a presentation on “low-light image/video enhancement” to Mediatek. Mediatek sponsored this project for the entire year of 2022. It has been successfully conducted by an MCL member, Zohreh Azizi. Mediatek was impressed by the low complexity and high performance of Zohreh’s proposed solution – the “Self-supervised Adaptive Low-light Video Enhancement (SALVE) “method.

Besides Mediatek, Professor Kuo visited a few universities and organizations in Taiwan, including the National Taiwan Normal University, National Sun-Yat-Sen University, National Cheng-Kung University, National Yang Ming Chiao Tung University, National Taiwan University, Academia Sinica, and Institute of Information Industry. Through seminars, he promoted the green learning technology developed at the MCL in the 7 years.

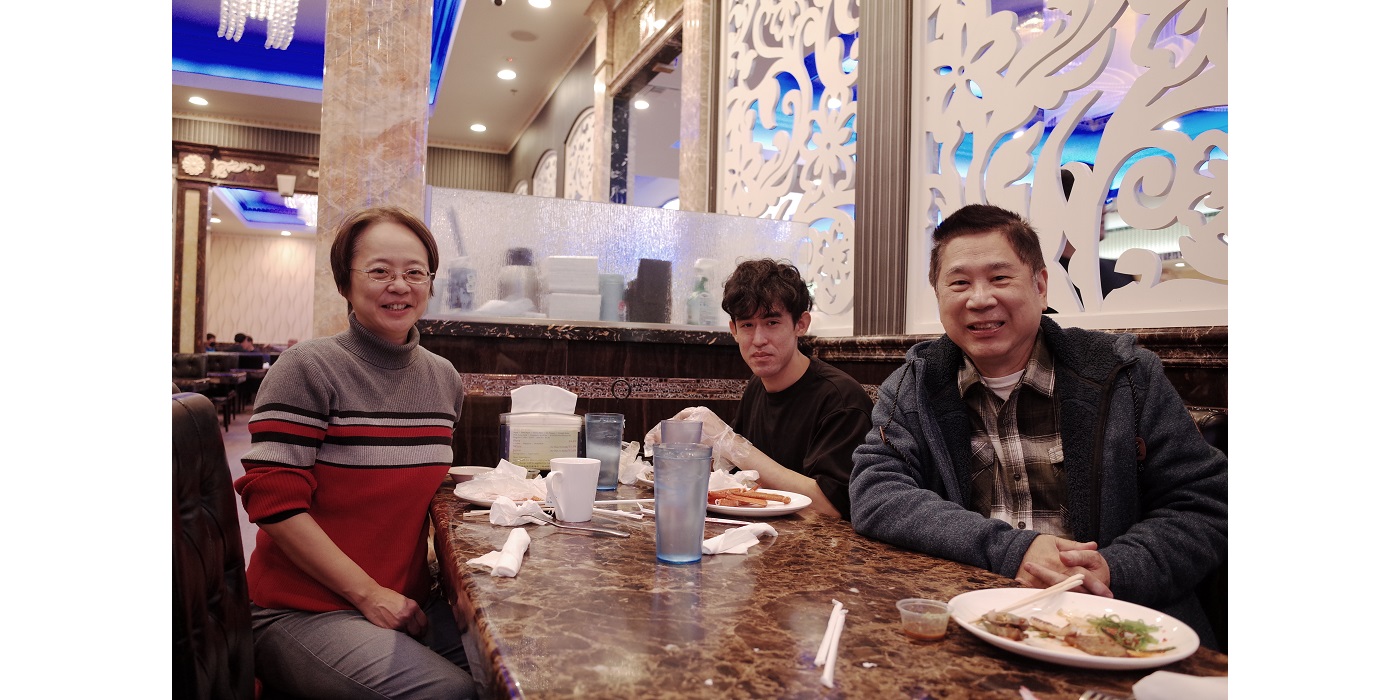

Furthermore, Professor Kuo met quite a few MCL alumni in three cities: Kao-Hsiung (December 25), Hsinchu (January 6), and Taipei (January 7). Most of them graduated from USC/MCL more than one decade ago. Professor Kuo said, “It was wonderful to meet MCL alumni in Taiwan. Glad to know all people are doing very well. It has been my biggest satisfaction to work with so many talented students at USC and see them grow into maturity as researchers/scholars.”