MCL Research Interest in Blind Video Quality Assessment

Blind Video Quality Assessment (BVQA) aims to predict perceptual qualities solely on the received videos. BVQA is essential to applications where source videos are unavailable such as assessing the quality of user-generated content and video conferencing. Early BVQA models were distortion-specific and mainly focused on transmission and compression related artifacts. Recent work tried to consider spatial and temporal distortions jointly and trained a regression model accordingly. Although they can achieve good performance on datasets with synthetic distortions, they do not work well for user-generated content datasets. DL-based BVQA solutions were proposed recently. They outperform all previous BVQA solutions.

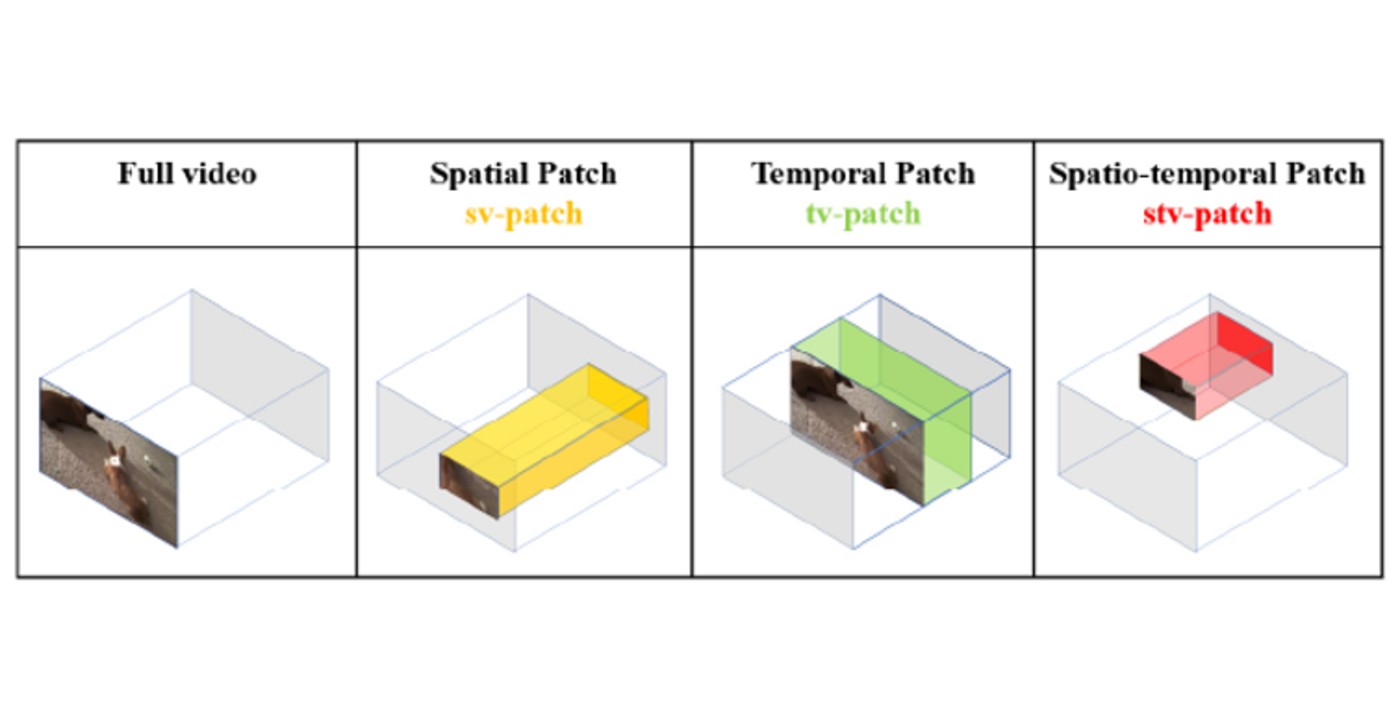

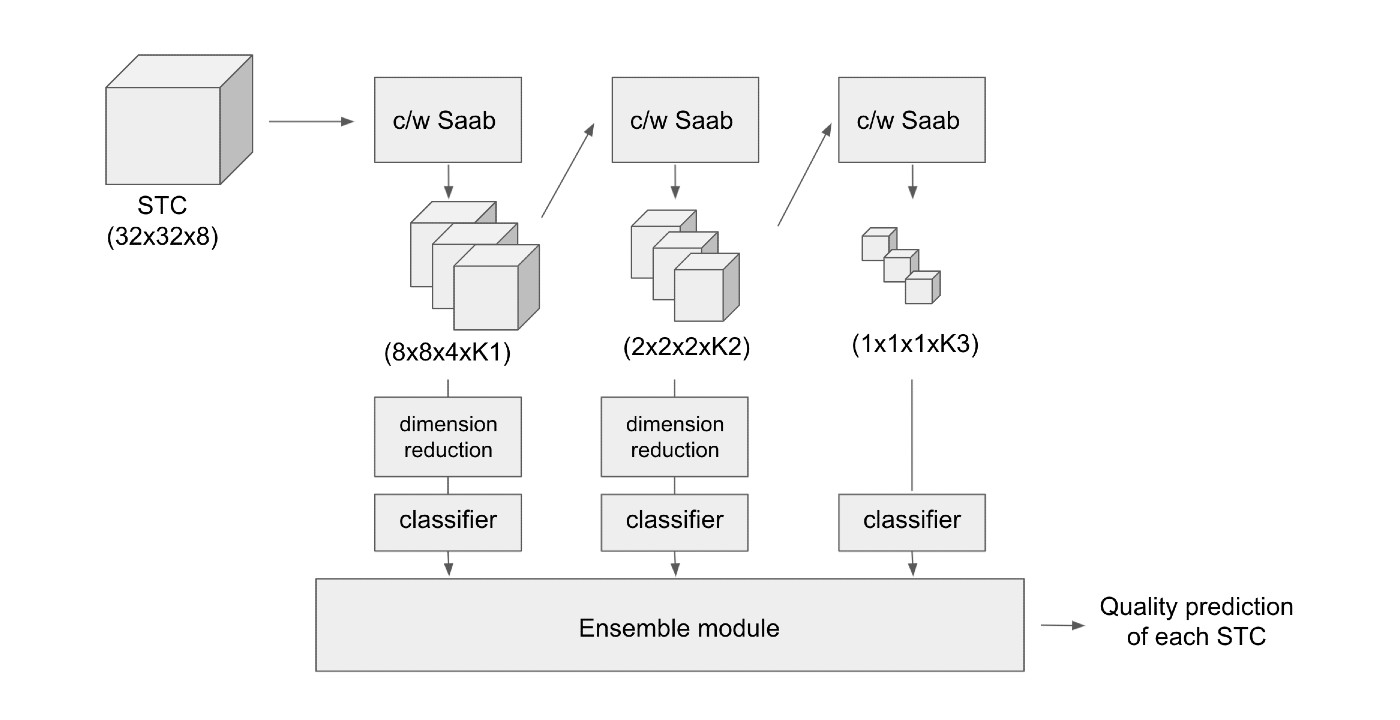

We propose to design a lightweight and interpretable BVQA solution that is suitable for mobile and edge devices while its performance is competitive with that of DL models. We need to select a basic processing unit for quality assessment. For a full video sequence, we can decompose it into smaller units in three ways. First, crop out a fixed spatial location to generate a spatial video (sv) patch. Second, crop out a specific temporal duration with full spatial information as a temporal video (tv) patch. Third, crop out a partial spatial region as well as a small number of frames as a spatial-temporal video (stv) patch. They are illustrated in Fig. 1. We will adopt STCs as the basic units for the proposed BVQA method. We will give each STC a BVQA score and then ensemble their individual scores to generate the ultimate score of the full video. The diagram is shown in Fig. 2.

After the STC features are extracted, we will train a classifier to each output response and then ensemble their decision scores to yield the BVQA score for one STC. For the model training, we [...]