MCL Research on Depth Estimation from Images

The target of the depth estimation is to estimate a high quality dense depth map from a single RGB input image. The depth map is an image containing distance information of surface of scene objects from the camera. Depth estimation is crucial for scene understanding, since for accurate scene analysis, more information is helpful. By using the depth estimation, we will have not only the color information from RGB images, but also distance information.

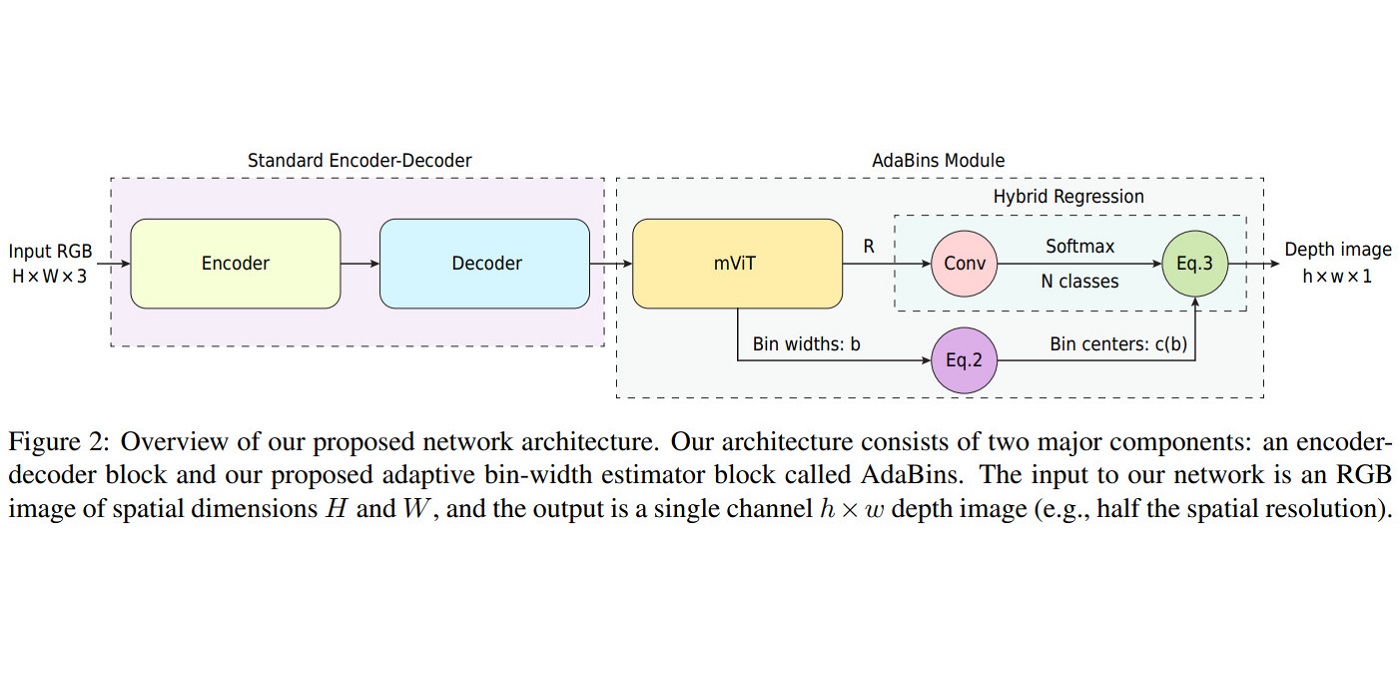

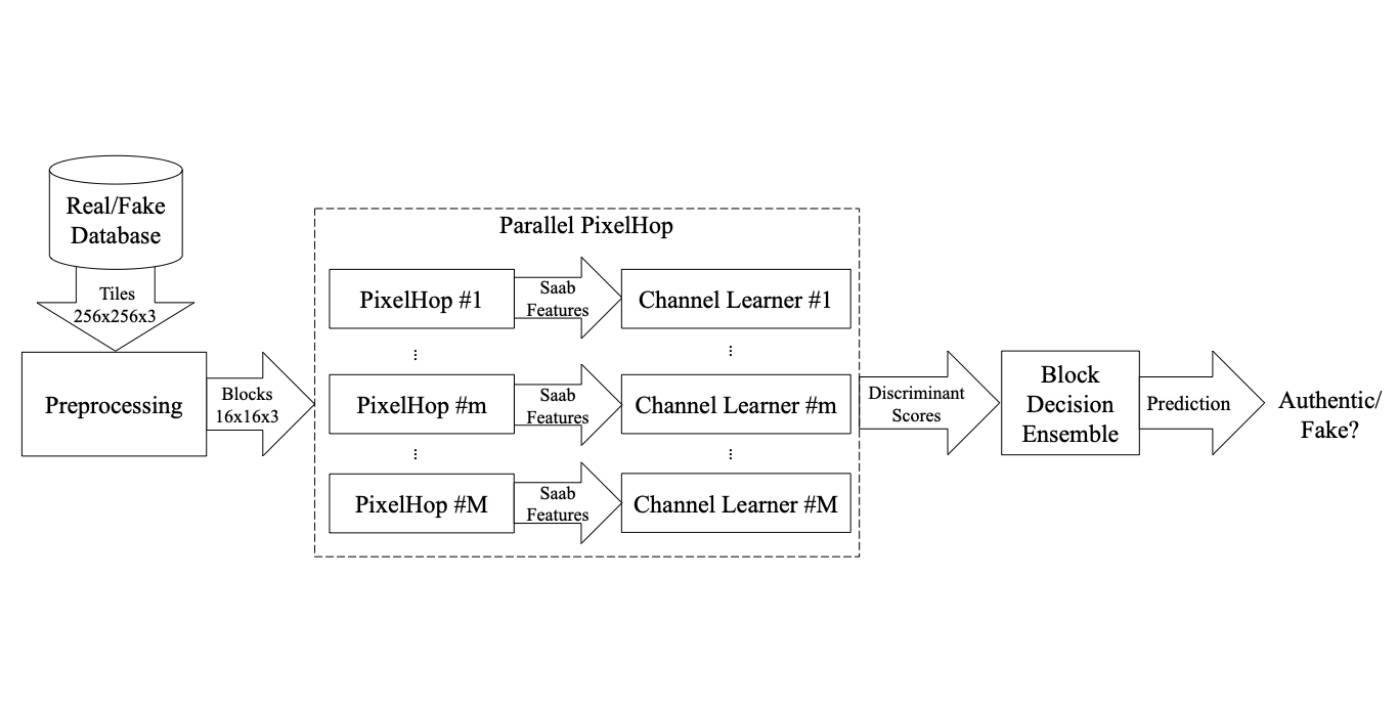

Currently, most depth estimation methods use deep learning by encoder and decoder structure, which are time and computation resources consuming, for example AdaBins[1]. We aim to design a successive subspace learning based method with less computation resources and mathematically explainable, while keeping high performance.

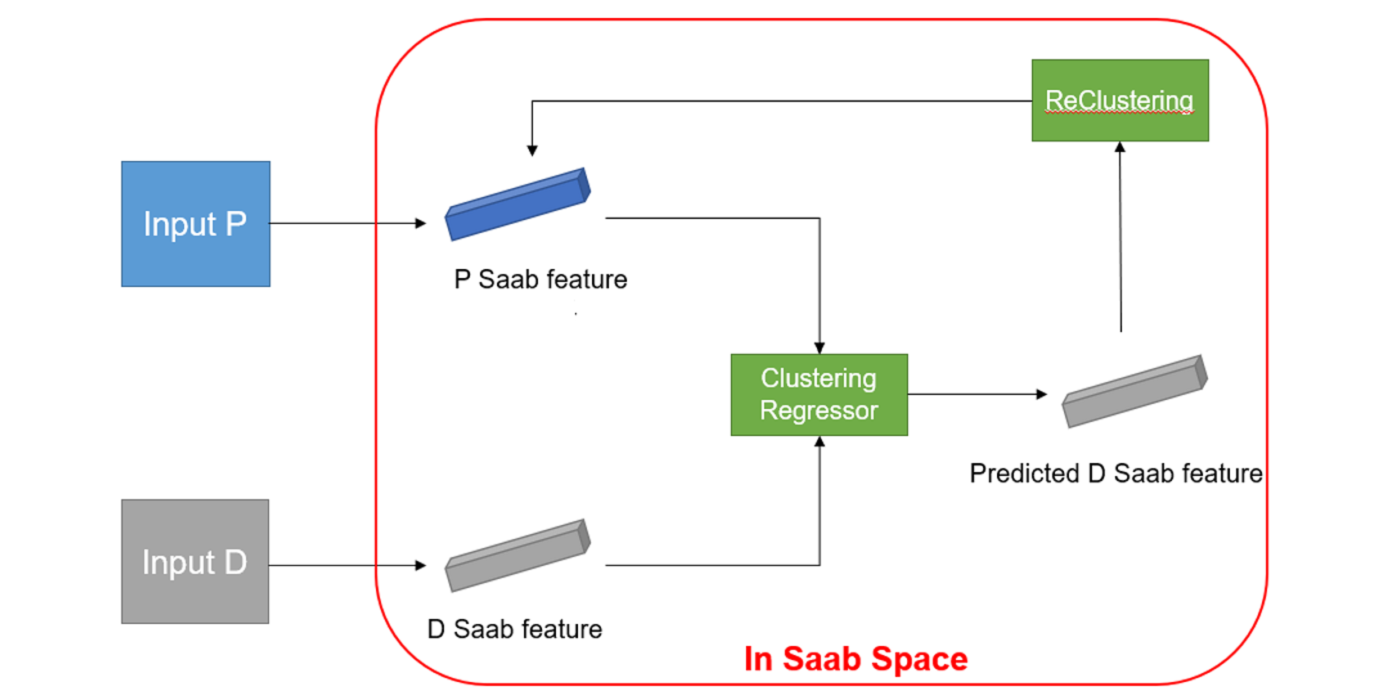

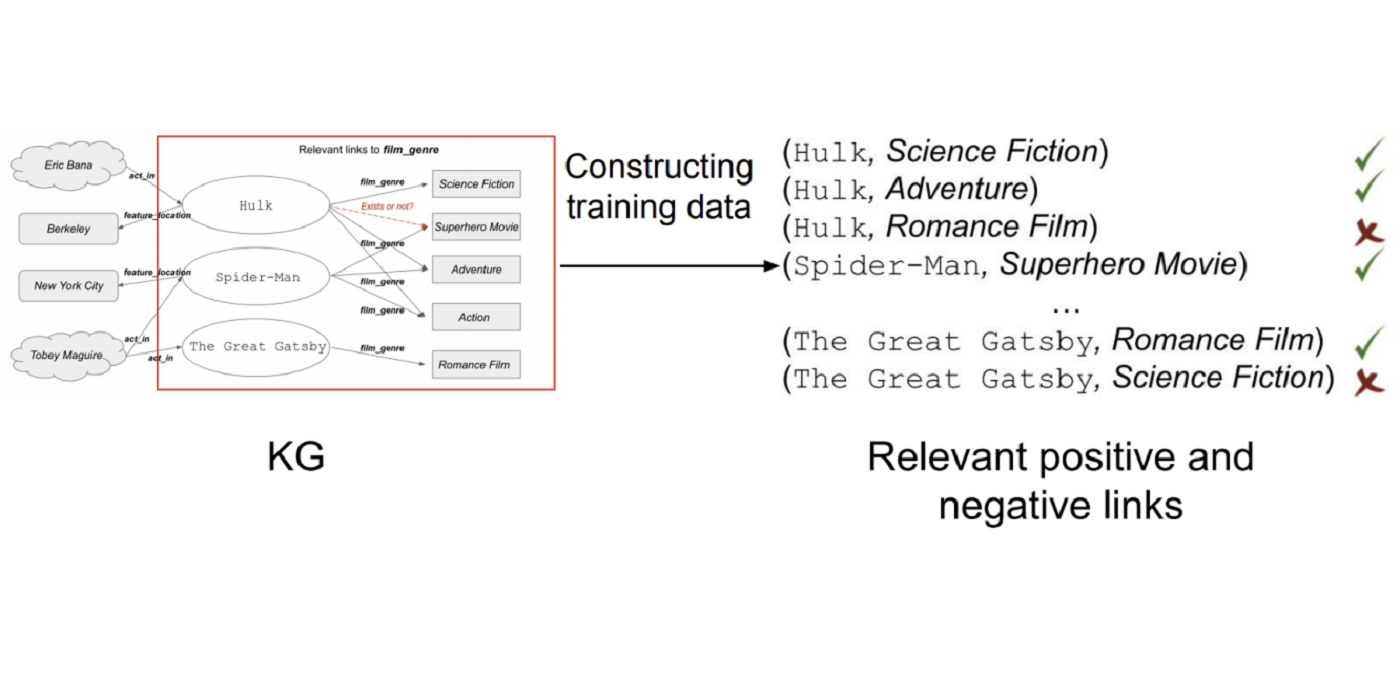

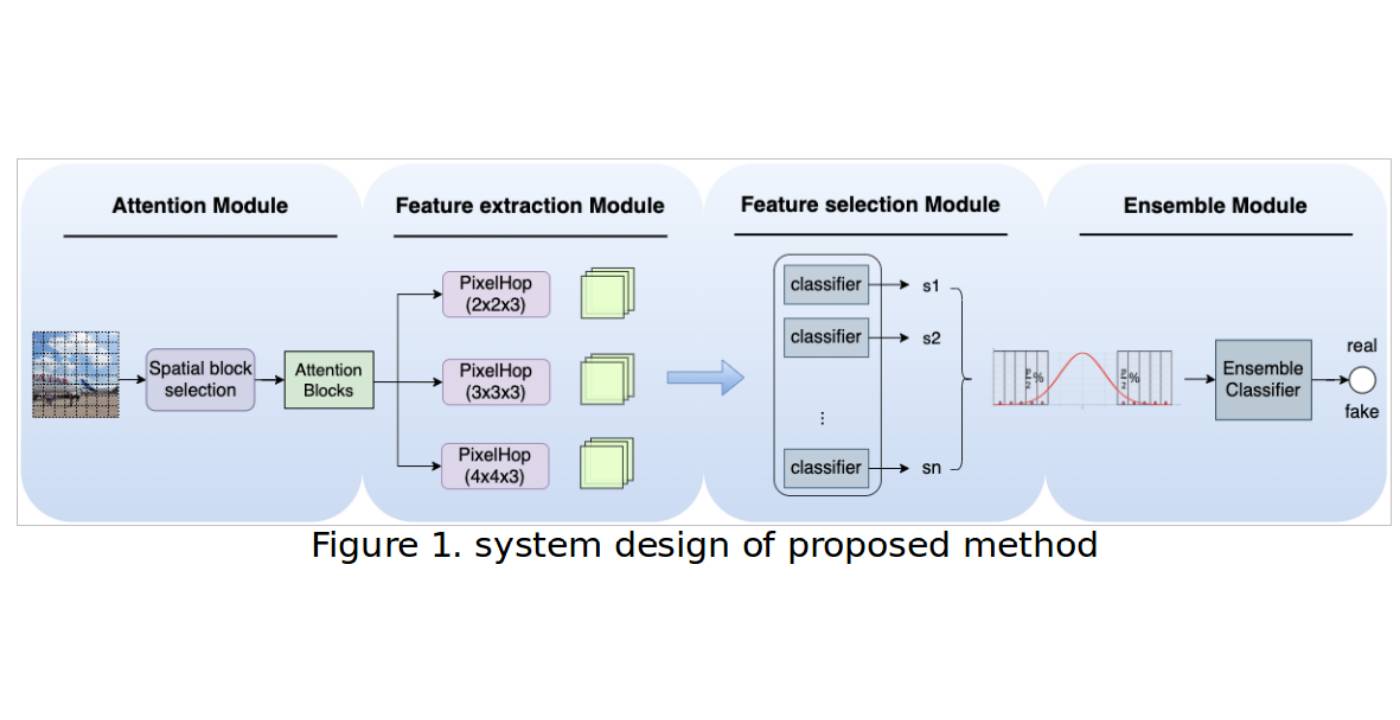

We use the NYU-Depth-v2 dataset[2] for training. We have proposed the method shown in the second image and get some results. In the second image, P represent the RGB images after conversion and D represent the correspondent depth images. In the future, we aim to improve the results by refine the model.

— By Ganning Zhao

Reference:

[1] Bhat, S. F., Alhashim, I., & Wonka, P. (2021). Adabins: Depth estimation using adaptive bins. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 4009-4018).

[2] Silberman, N., Hoiem, D., Kohli, P., & Fergus, R. (2012, October). Indoor segmentation and support inference from rgbd images. In European conference on computer vision (pp. 746-760). Springer, Berlin, Heidelberg.

Image credits:

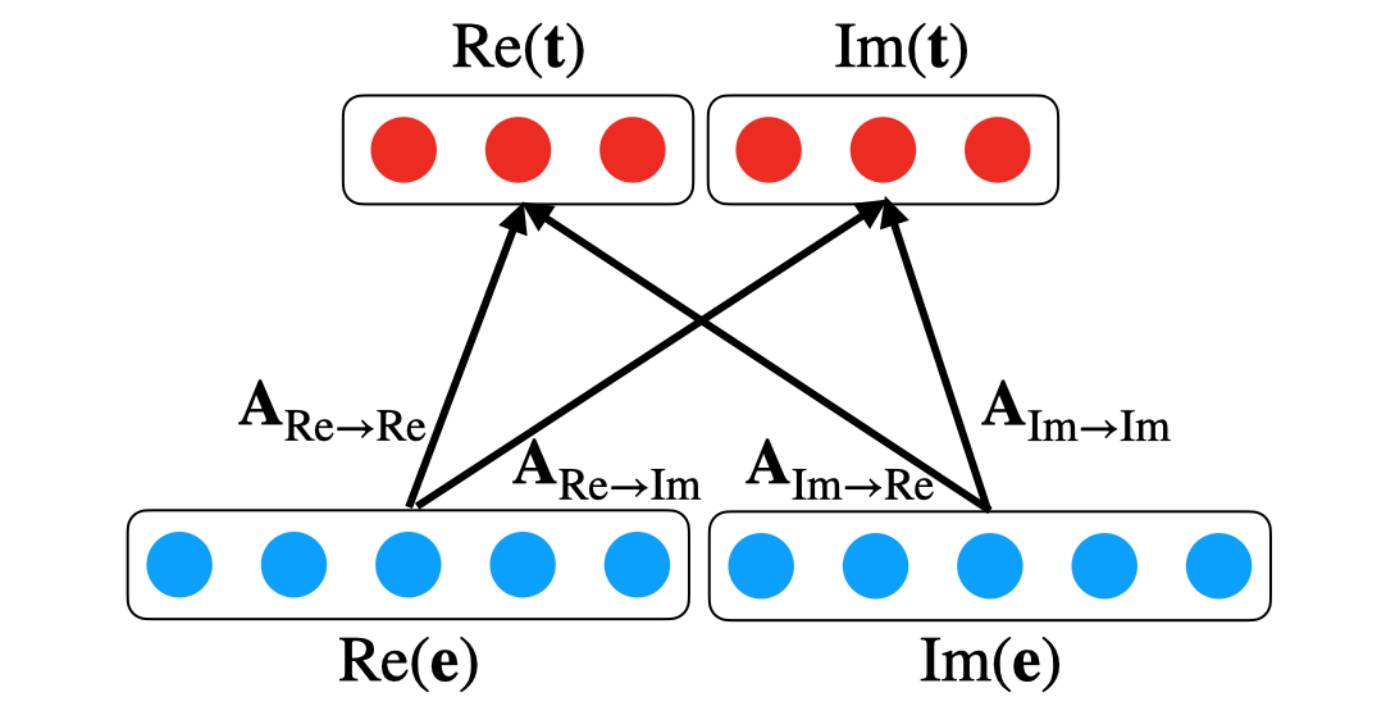

Image showing the architecture of AdaBins is from [1].

Image showing the architecture of our current method.