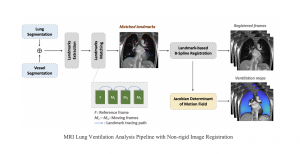

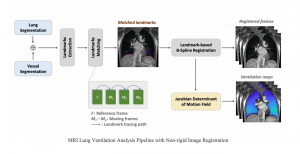

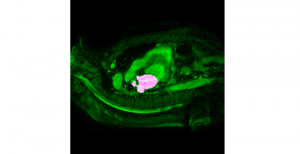

Research related to the future development of Health Care systems is always a significant endeavor, by touching many people lives. AI advancements in the last decade have given rise to new applications, with key aim to increase the automation level of different tasks, currently being carried out by experts. In particular, medical image analysis is a fast-growing area, having also been revolutionized by modern AI algorithms for visual content understanding. Magnetic Resonance Imaging (MRI) is widely used by radiologists in order to shed more light on patient’s health situation. It can provide useful cues to experts, thus assisting to take decisions about the appropriate treatment plan, maintaining also less discomfort for the patient and incurring less economical risks in the treatment process.

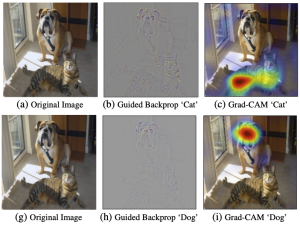

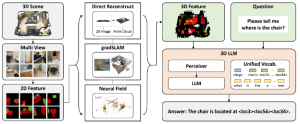

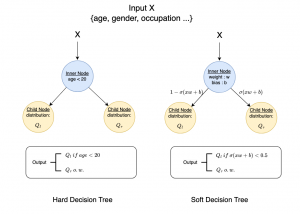

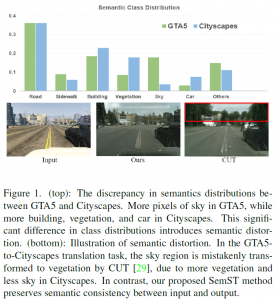

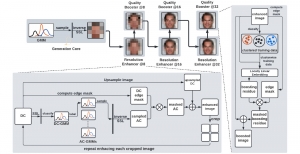

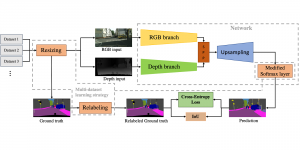

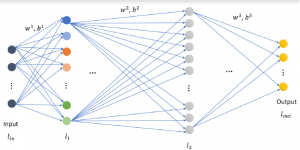

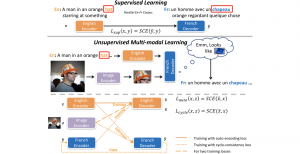

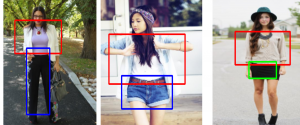

The question arises, how modern AI could contribute to automate the diagnosis process and provide a second and more objective assessment opinion to the experts. Many research ideas from the visual understanding area, adopt the deep learning (DL) paradigm, by training Deep Neural Networks (DNNs) to learn end-to-end representations for tumor classification, lesion areas detection, specific organ segmentation, survival prediction etc. Yet, one could identify some limitations on using DNNs in medical image analysis. It is well known that it is often hard to collect sufficient real samples for training DL models. Furthermore, decisions made by machines need to be transparent to physicians and especially be aware of the factors that led to those decisions, so that they are more trustworthy. DNNs are often perceived as “black-box” models, since their feature representations and decision paths are hard to be interpreted.

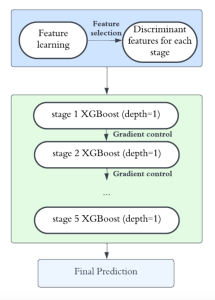

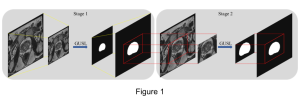

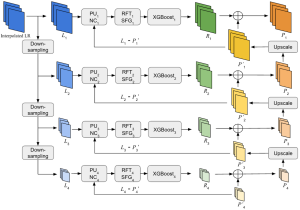

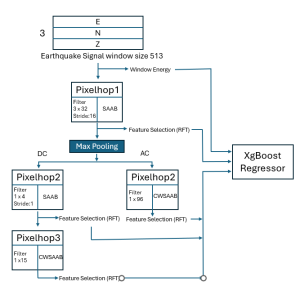

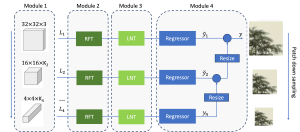

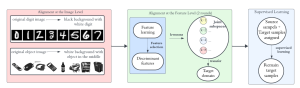

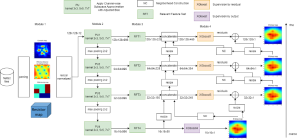

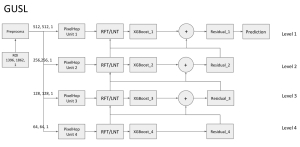

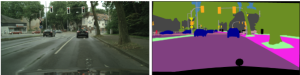

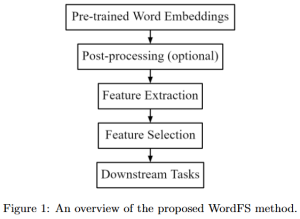

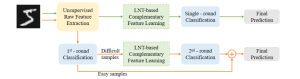

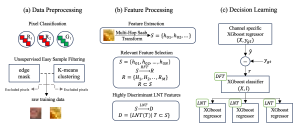

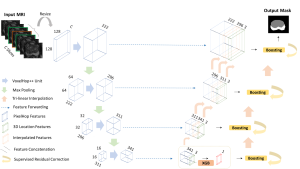

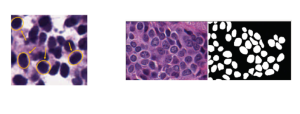

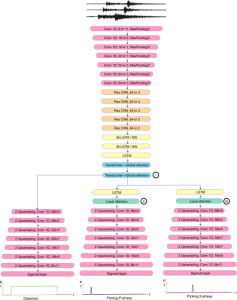

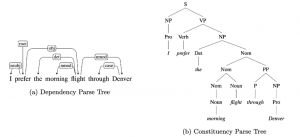

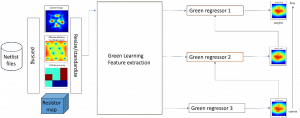

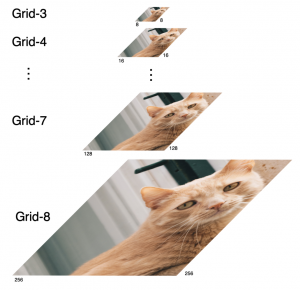

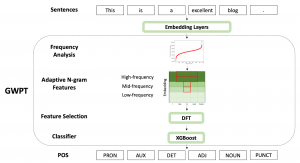

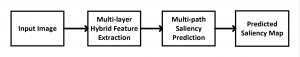

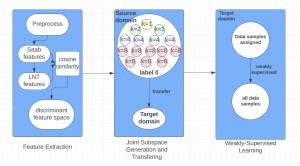

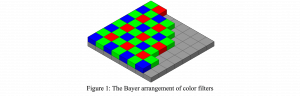

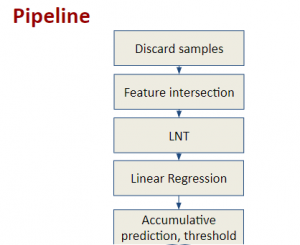

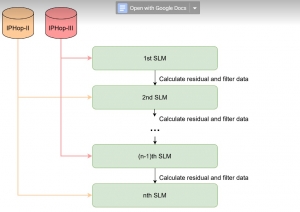

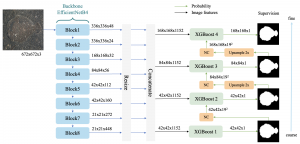

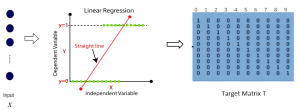

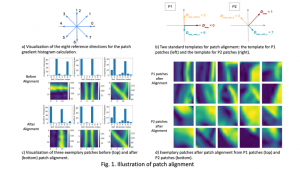

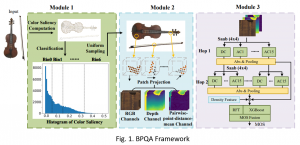

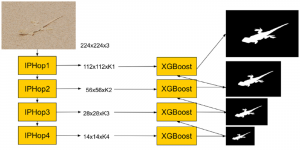

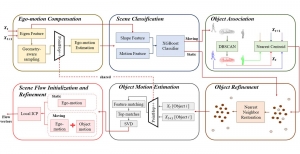

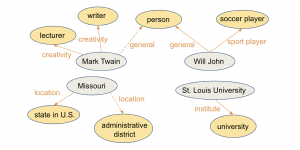

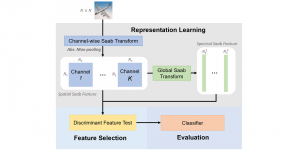

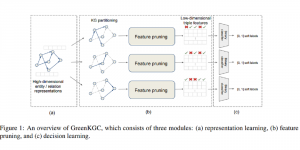

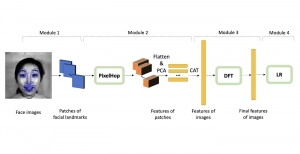

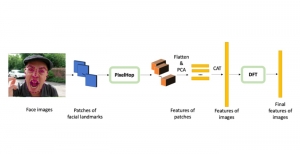

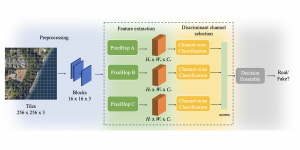

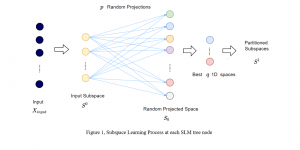

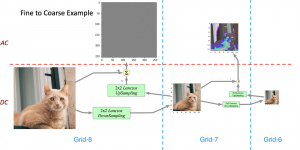

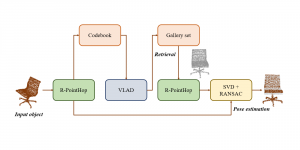

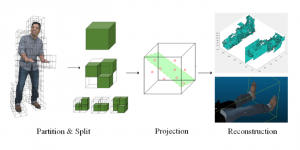

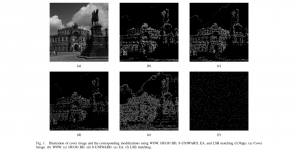

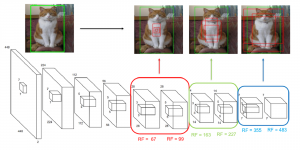

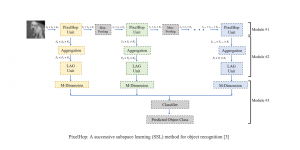

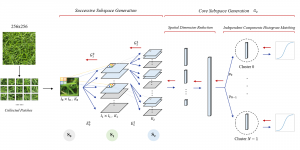

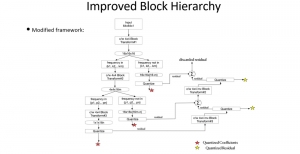

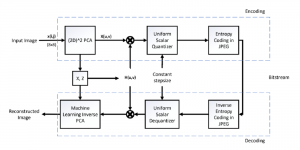

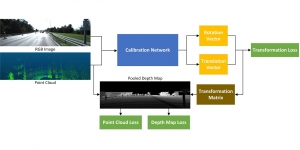

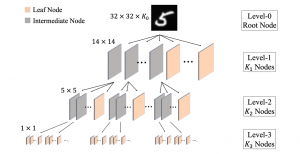

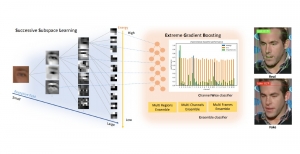

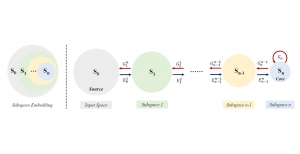

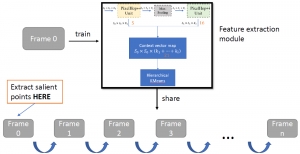

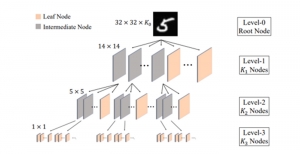

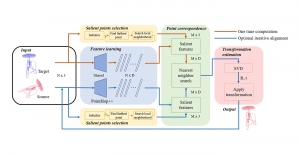

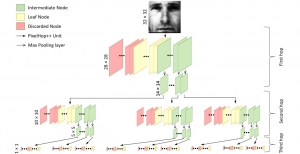

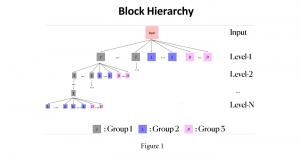

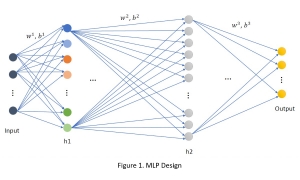

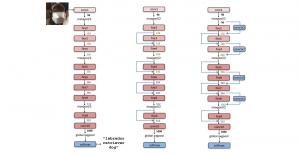

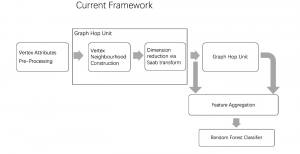

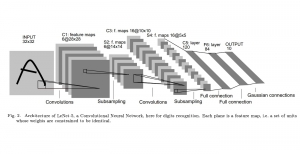

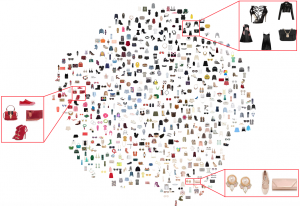

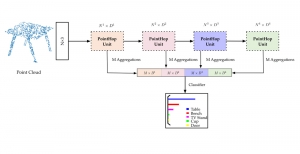

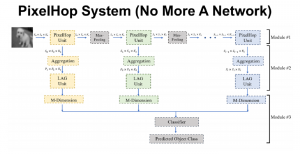

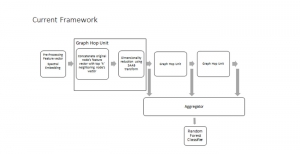

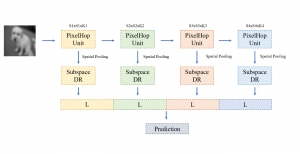

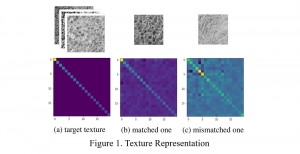

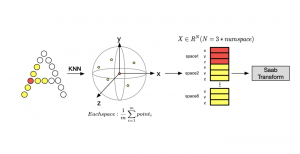

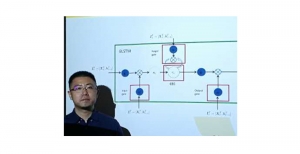

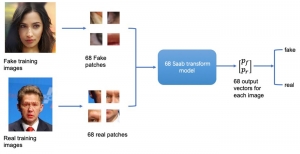

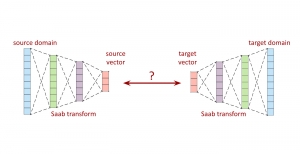

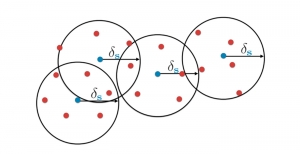

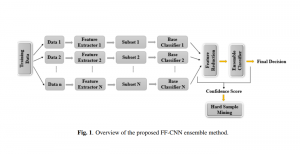

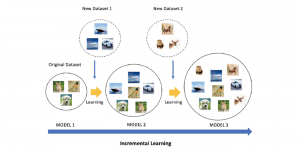

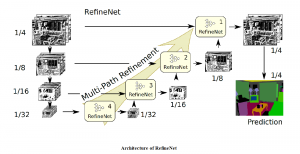

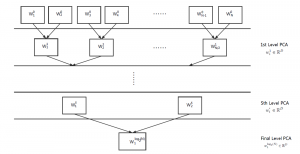

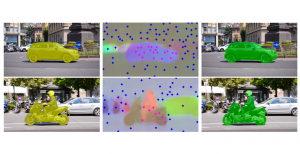

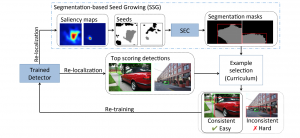

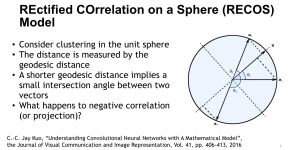

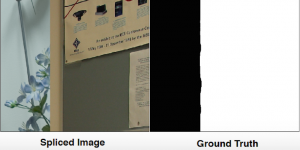

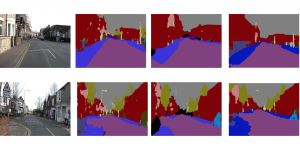

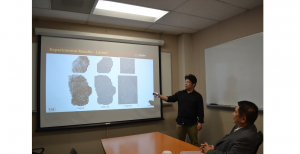

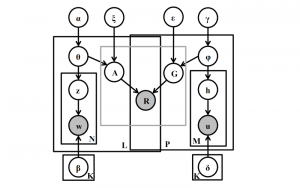

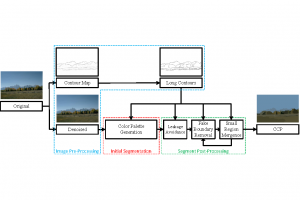

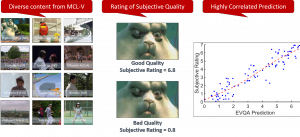

In MCL, we consider a new line of research on AI for medical image analysis, by adopting the Green Learning (GL) approach to address the previously mentioned issues. GL is based on the successive subspace learning (SSL) feature extraction for image data that yields different spatial-spectral representations of the input image from a finer to coarser scale, in a feed-forward manner. Therefore, the features are explainable and hence one can understand more easily any decisions made from the model. Also, GL requires less data samples to having a stable training, thus mitigating the medical samples scarcity problem. As such, the interpretable decisions from GL approach make it a particularly good fit to healthcare applications, so as physicians can understand the reasons that the model classified a tumor, for example, at a certain level of aggressiveness.

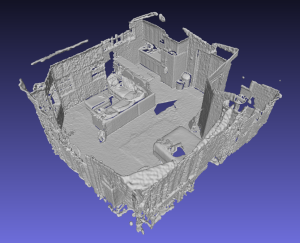

We envision the GL solution will be implemented as add-on software on MRI machines or client software installed in radiologist’s PCs because of its low computational complexity and memory requirements. The AI tool will encircle any suspicious region with quantitative scores such as likelihood, aggressiveness, etc., which will be available immediately after imaging. A radiologist can make human decision assisted by AI prediction. Such a decentralized AI solution protects patients’ privacy. At the same time, new AI algorithms can be updated through secure Internet connection as on-going health care service.

Finally, because of the expected growth of the automated medical image analysis in the future, GL aims at paving the way for medical image systems that will dissipate less energy, as well as be more transparent to physicians, without compromising the accuracy performance.