MCL Research on Semantic Scene Segmentation Based on Multiple Sensor Inputs

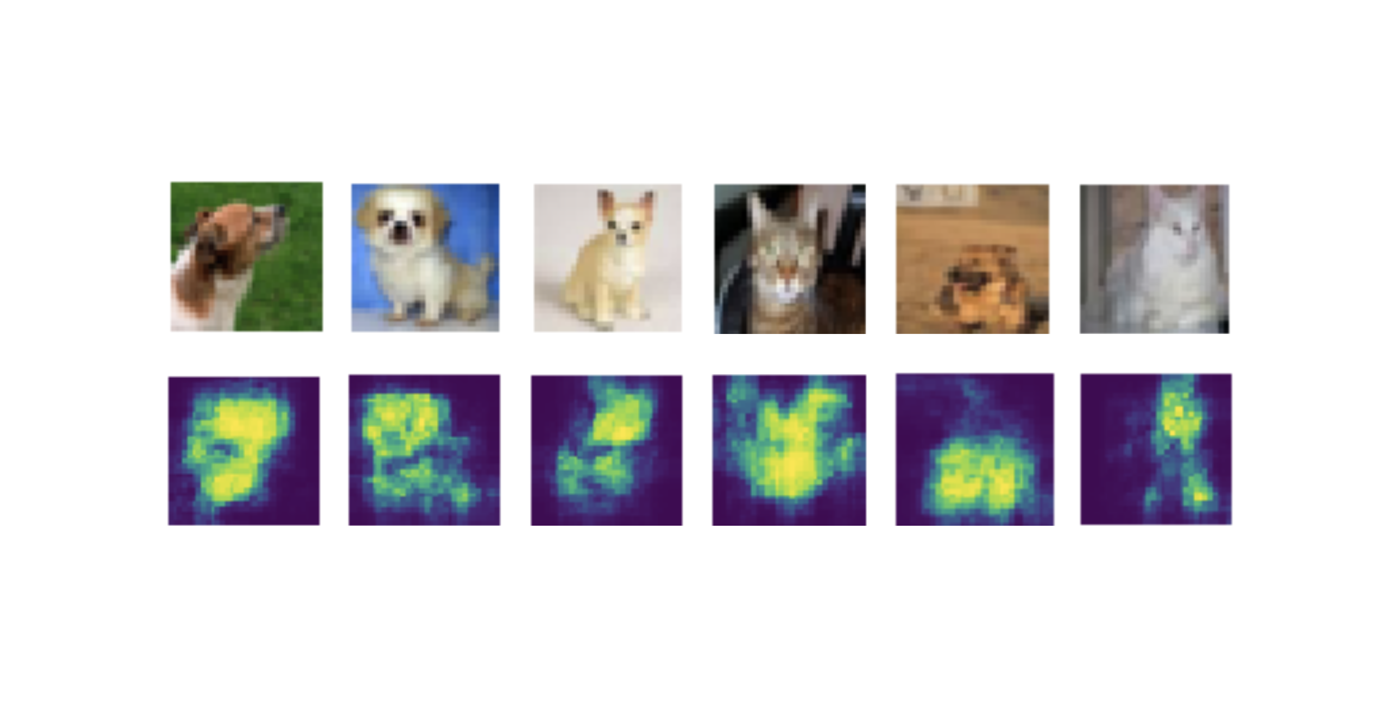

Semantic segmentation can help people identify and locate objects, which provide important road information for upper-level navigational tasks. Due to the rapid development of deep Convolutional Neural Networks (CNNs)[1], the performance of image segmentation models has been greatly improved and CNNs is widely used for this task. However, maintaining the performance under different conditions is a non-trivial task. In the dark, rain, or fog environment, the quality of RGB images will be greatly reduced while other sensors may still get fair results. Thus, our model combines the information of RGB image and depth map. When driving, we often encounter obstacles, like the trash can, barrier, rubble, stones, and cargos. Recognizing and avoiding them is very crucial for safety. To address this problem, we apply multi-dataset learning. In this way, our model can learn more classes from other data sets, including obstacles.

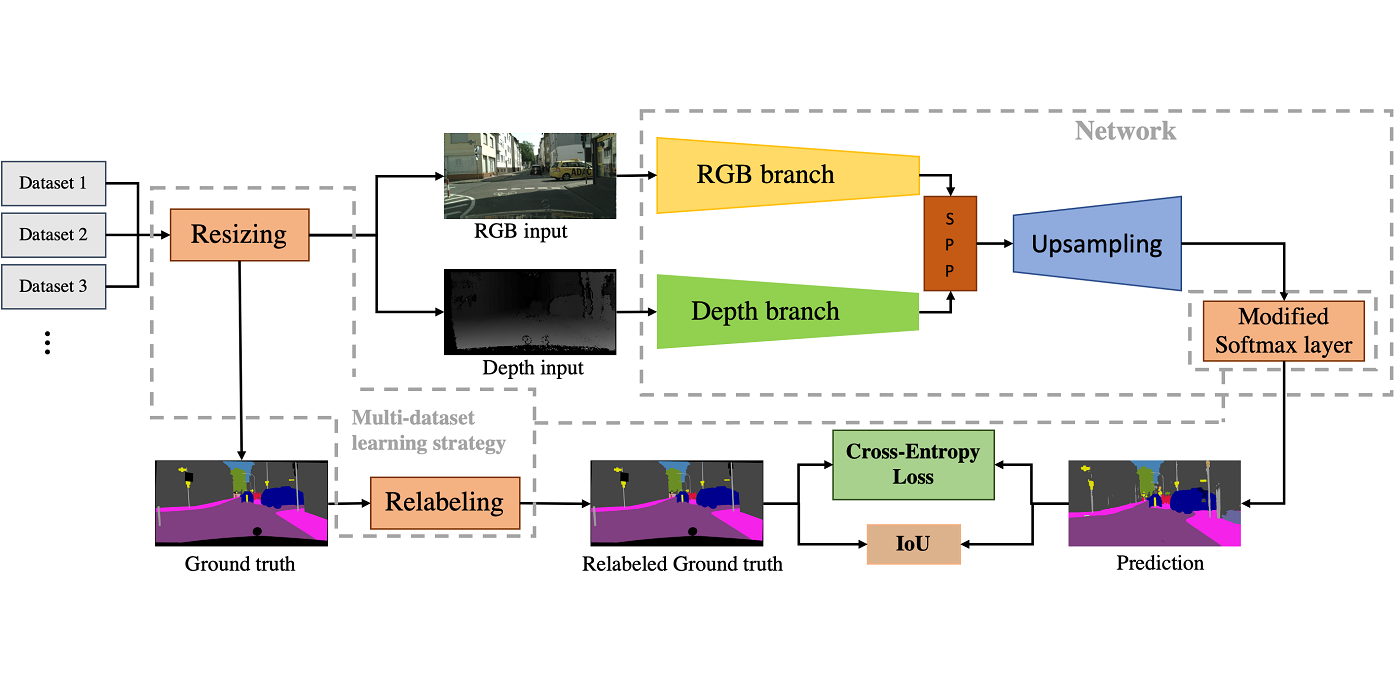

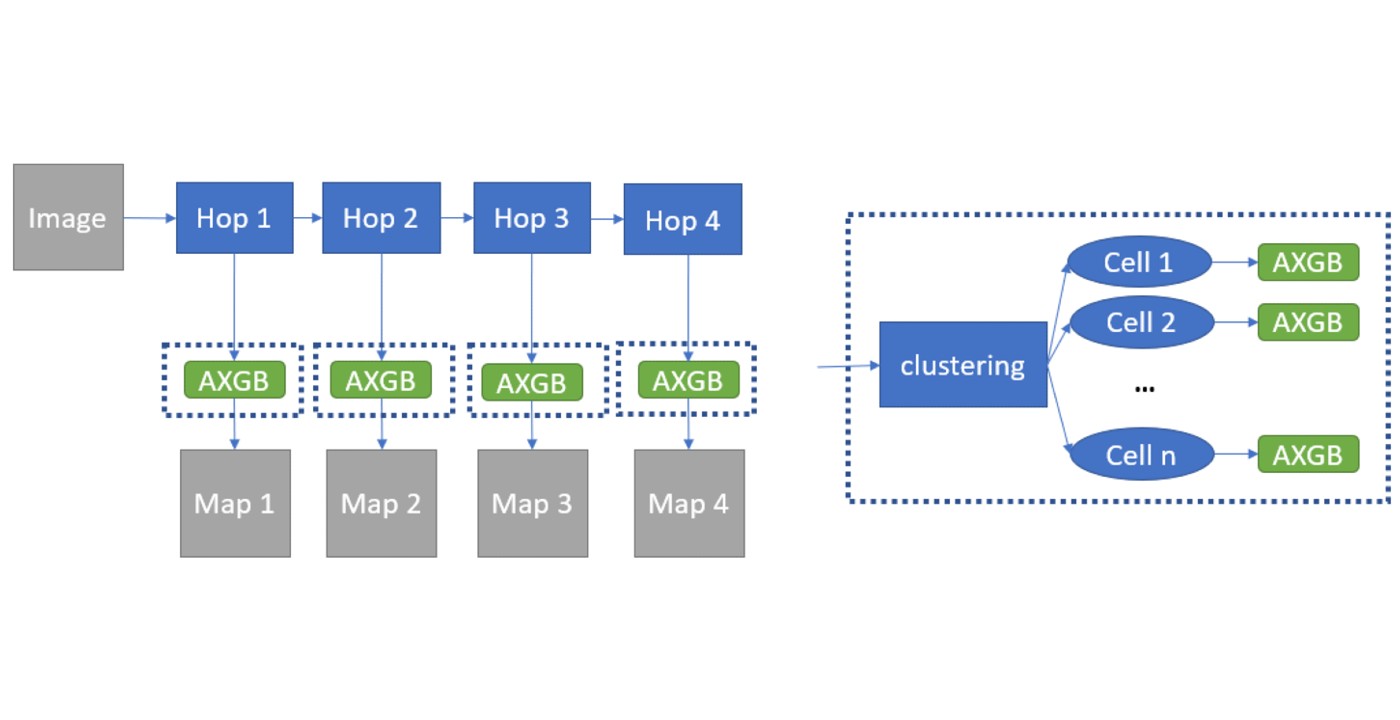

In our experiment, we fully evaluate the RFNet[2] with different datasets and methods to combine them. Regarding the framework of our model, the inputs from different datasets will pass through the resizing module. Then, the depth map and RGB image are sent to the network. Ground truth will go to Relabeling module. Multi-dataset learning strategy is applied to Resizing, Relabeling and Modified Softmax layer. Finally, by comparing the results of relabeled ground truth and prediction, we can obtain the intersection of union(IoU) and value of cross-entropy loss.

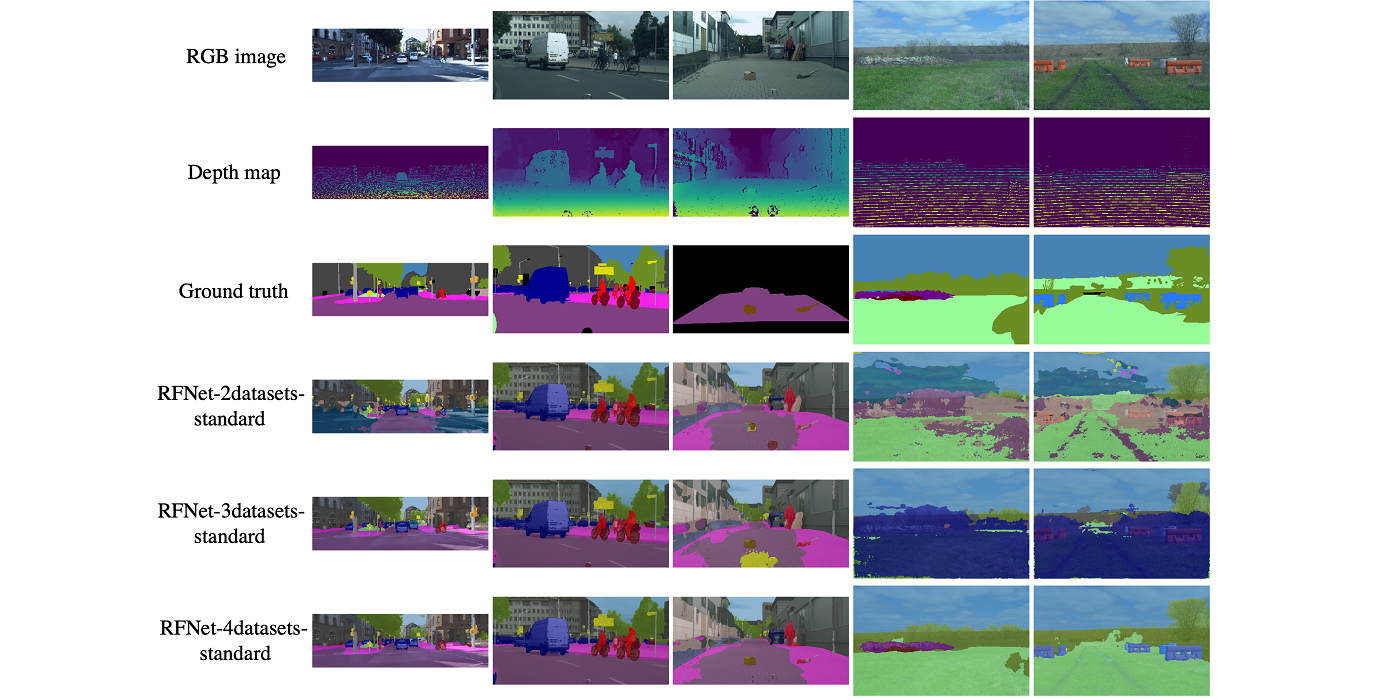

Our result shows that our models have excellent performances in the urban environment and blended environment. However, in the field environment, the depth map helps the model very slightly. We also proposed a new thrifty relabeling, which can improve the performance of the model without increasing the complexity of the network. Moreover, more datasets can help the model [...]