MCL Research on CNN Ensembles

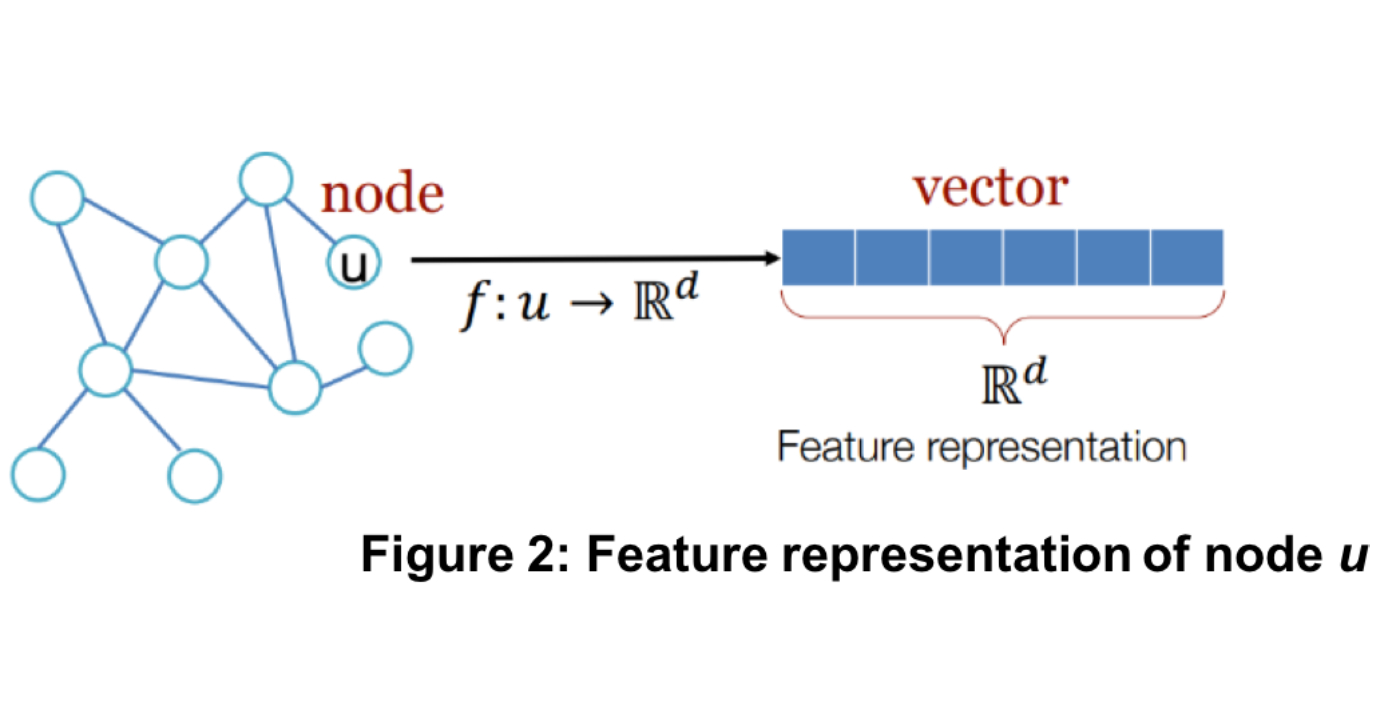

CNN technology provides state-of-the-art solutions to many image processing and computer vision problems. Given a CNN architecture, all of its parameters are determined by the stochastic gradient descent (SGD) algorithm through backpropagation (BP). The BP training demands a high computational cost. Furthermore, most CNN publications are application-oriented. There is a limited amount of progress after the classical result in [1]. Examples include explainable CNNs [2,3,4] and feedforward designs without backpropagation [5,6].

The determination of CNN model parameters in the one-pass feedforward (FF) manner was recently proposed by Kuo et al. in [6]. It derives network parameters of a target layer based on statistics of output data from its previous layer. No BP is used at all. This feedforward design provides valuable insights into the CNN operational mechanism. Besides, under the same network architecture, its training complexity is significantly lower than that of the BP design CNN. FF-designed and BP-designed CNNs are denoted by FF-CNNs and BP-CNNs, respectively.

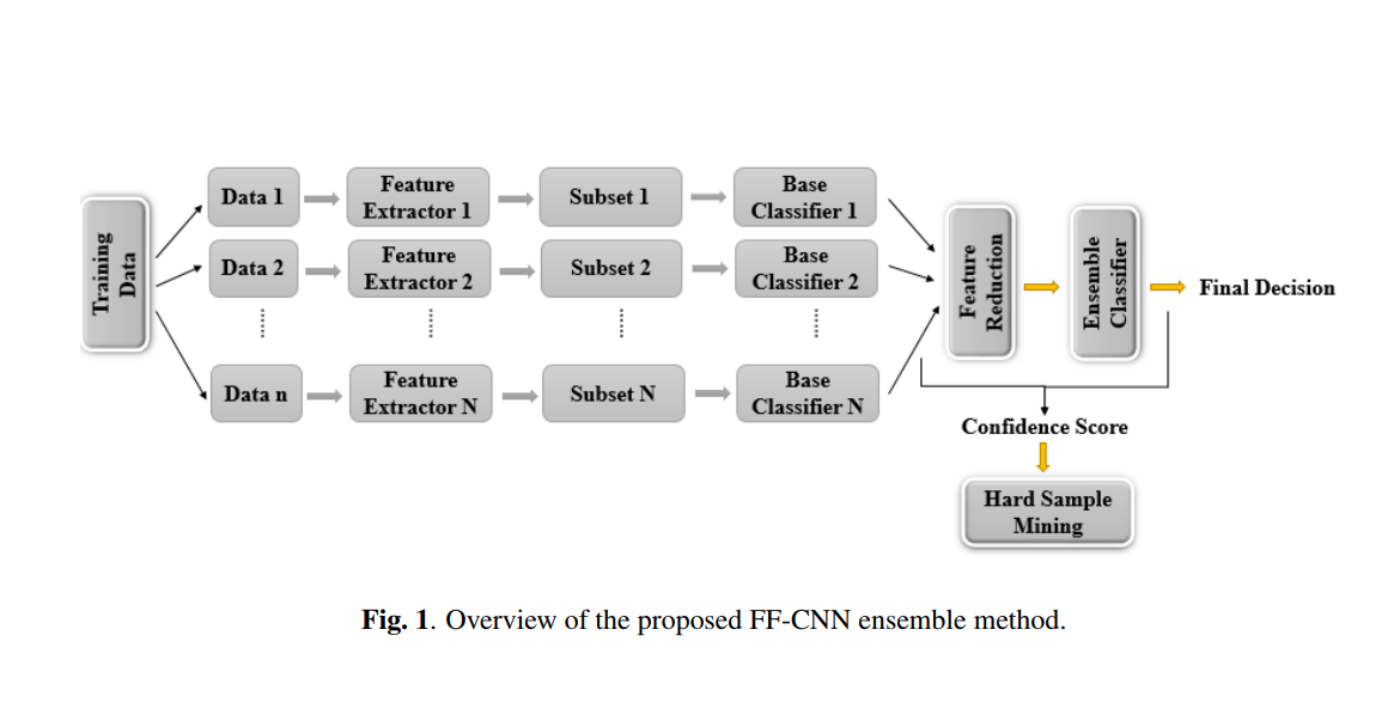

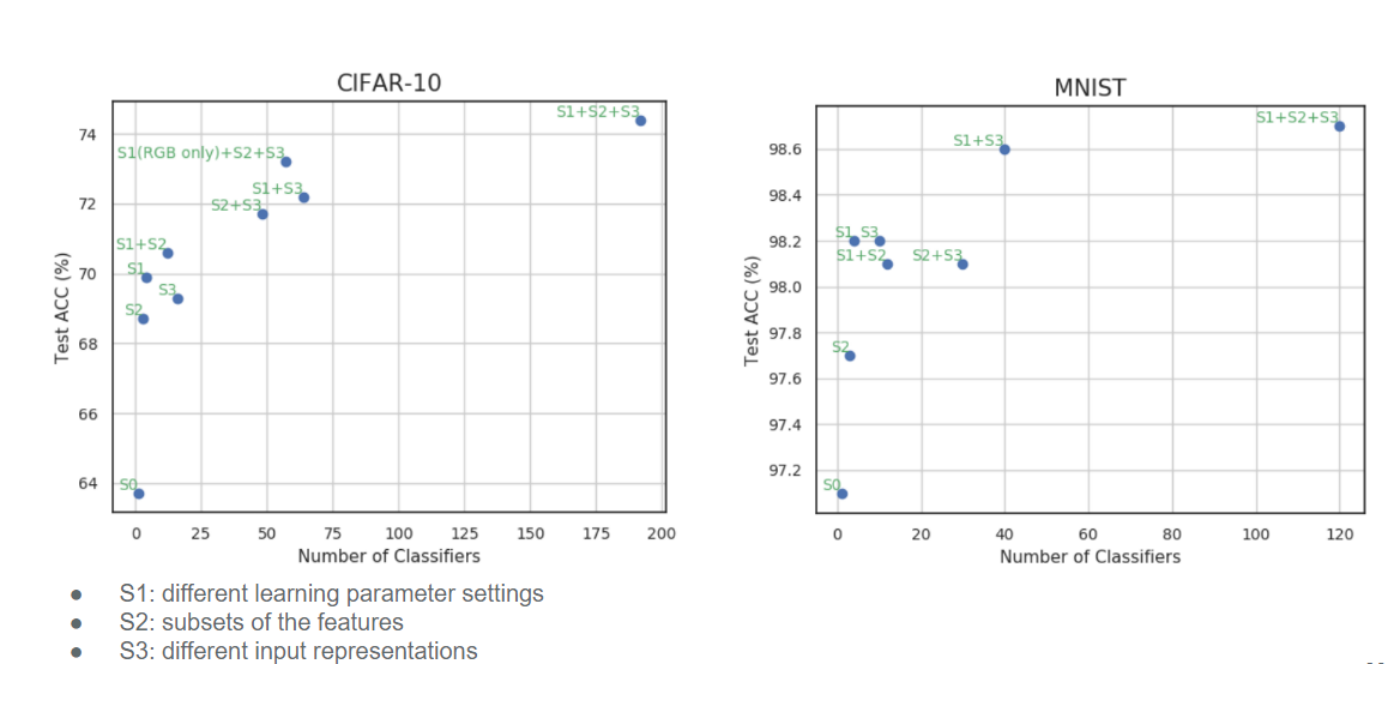

We focus on solving the image classification problem based on the feedforward-designed convolutional neural networks (FF-CNNs) [6]. An ensemble method that fuses the output decision vectors of multiple FF-CNNs to solve the image classification problem is proposed. To enhance the performance of the ensemble system, it is critical to increasing the diversity of FF-CNN models. To achieve this objective, we introduce diversities by adopting three strategies: 1) different parameter settings in convolutional layers, 2) flexible feature subsets fed into the Fully-connected (FC) layers, and 3) multiple image embeddings of the same input source. Furthermore, we partition input samples into easy and hard ones based on their decision confidence scores. As a result, we can develop a new ensemble system tailored to hard samples to further boost classification accuracy. Although [...]