We are so happy to welcome a new MCL member, Cynthia Huang joining MCL this semester. Here is a quick interview with Cynthia:

1. Could you briefly introduce yourself and your research interests?

I am Cynthia Huang, a junior undergraduate student majoring in Computer Engineering and Computer Science at USC. My research interests include machine learning, AutoML, and computer vision. Previously, I have worked on AutoML, specifically on simultaneous optimization of neural network architecture and weights. I hope to continue deepening my exploration of these fields in the future.

2. What is your impression about MCL and USC?

My impression of MCL is that it is a collaborative and inspiring group, where many talented individuals come together with exciting ideas. I truly appreciate the team spirit and supportive environment, where everyone is open to sharing insights and learning from one another. I find great joy in engaging in research discussions, exchanging perspectives, and brainstorming solutions to challenging problems in MCL. I am very excited about the opportunity to collaborate with everyone and look forward to contributing to meaningful research in the future!

3. What are your future expectations and plans in MCL?

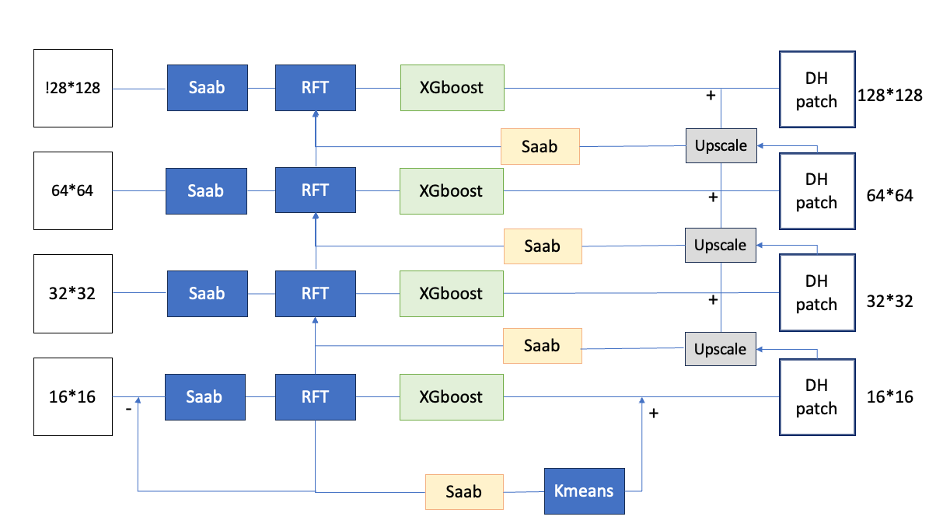

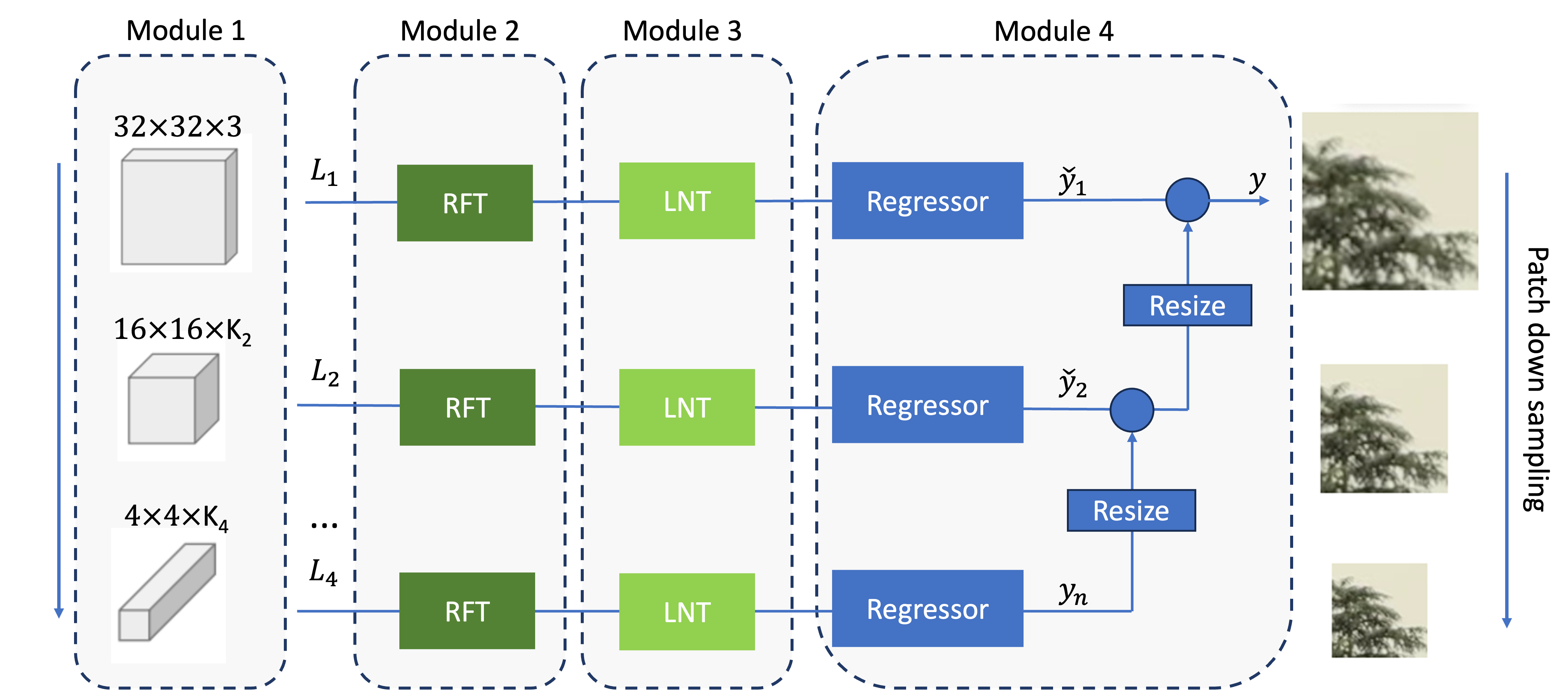

Currently, I am working on semantic segmentation using green learning. In the future, I look forward to continuing research discussions and collaborations with everyone at MCL. I hope to further explore new ideas, exchange knowledge, and contribute to exciting research. Additionally, I am excited to connect with more members of the MCL community.