MCL Research on Unsupervised Video Segmentation

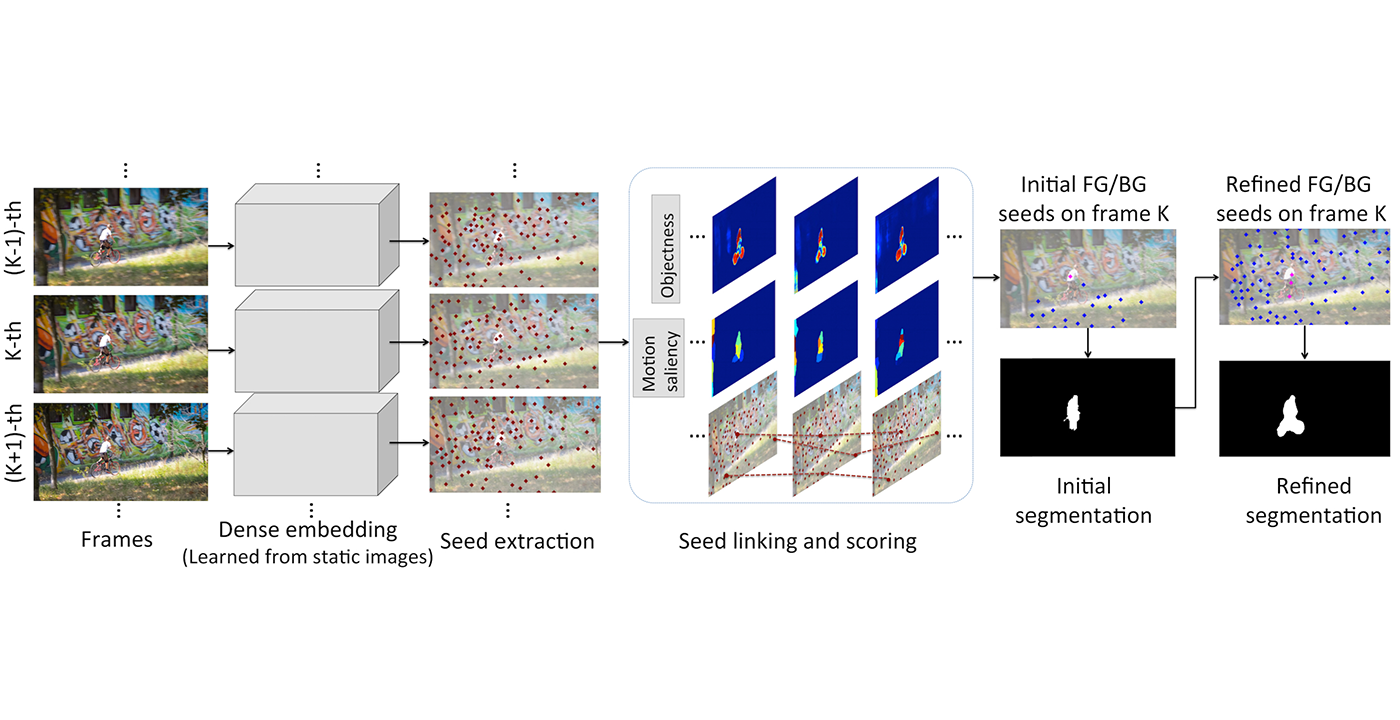

We propose a method for unsupervised video object segmentation by transferring the knowledge encapsulated in image-based instance embedding networks. The instance embedding network produces an embedding vector for each pixel that enables identifying all pixels belonging to the same object. Though trained on static images, the instance embeddings are stable over consecutive video frames, which allow us to link objects together over time. Thus, we adapt the instance networks trained on static images to video object segmentation and incorporate the embeddings with objectness and optical flow features, without model retraining or online fine-tuning. The proposed method outperforms state-of-the-art unsupervised segmentation methods in the DAVIS dataset and the FBMS dataset.

The main contributions include

– A new strategy for adapting instance segmentation models trained on static images to videos. Notably, this strategy performs well on video datasets without requiring any video object segmentation annotations.

– Proposal of novel criteria for selecting a foreground object without supervision, based on semantic score and motion features over a track.

– Insights into the stability of instance segmentation embeddings over time.

By Siyang Li