Professor Kuo Delivered an Invited Lecture at Caltech’s Keller Colloquium

The Keller Colloquium is a distinguished lecture series for the CMS (Computing and Mathematical Sciences) department at the California Institute of Technology (Caltech). The CMS department includes Applied Math, Control, and Computer Science programs. A committee of students and faculty chooses the speakers across these areas. Professor Kuo was invited to lecture during the Fall Quarter of the 2022-23 academic year.

Professor Kuo visited Caltech on October 31 (Monday). He met Professor P. P. Vaidyanathan and Professor Thomas Yizhao Hou before his seminar at 4 pm. The title of his lecture was “Green Learning: Methodology, Examples, and Outlook,” with the following abstract:

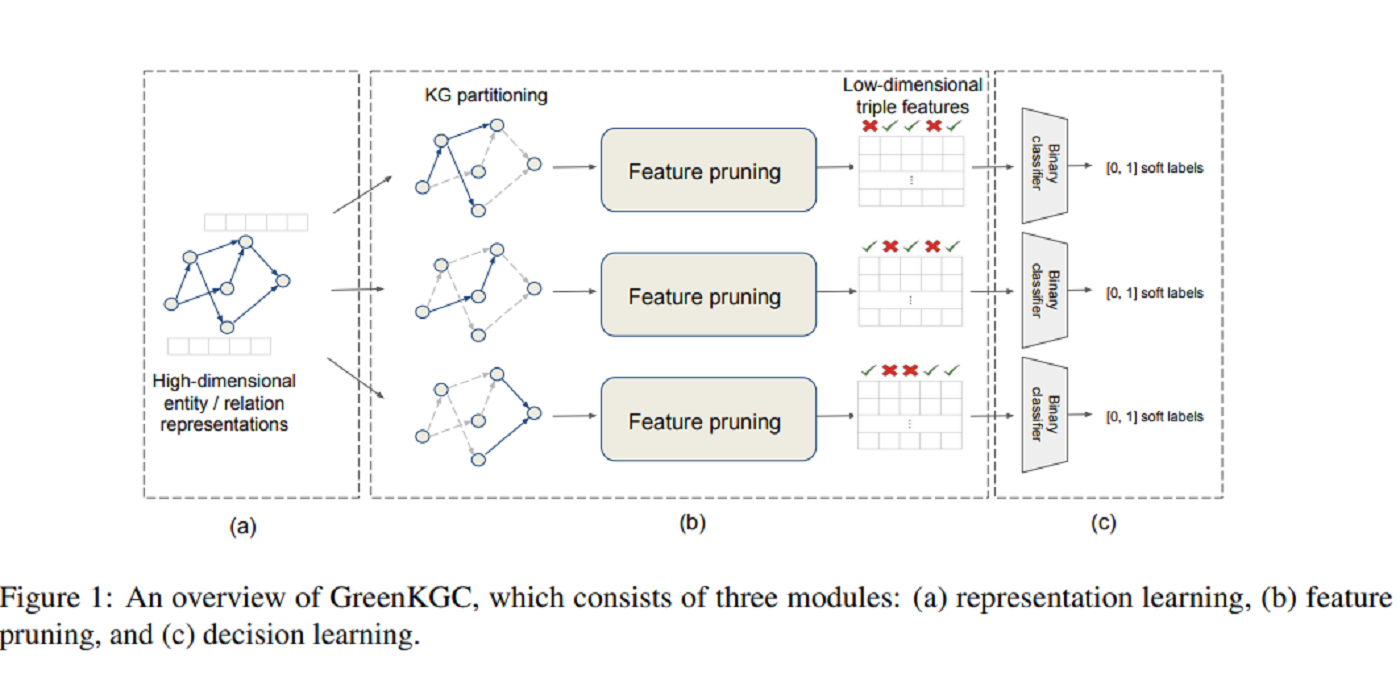

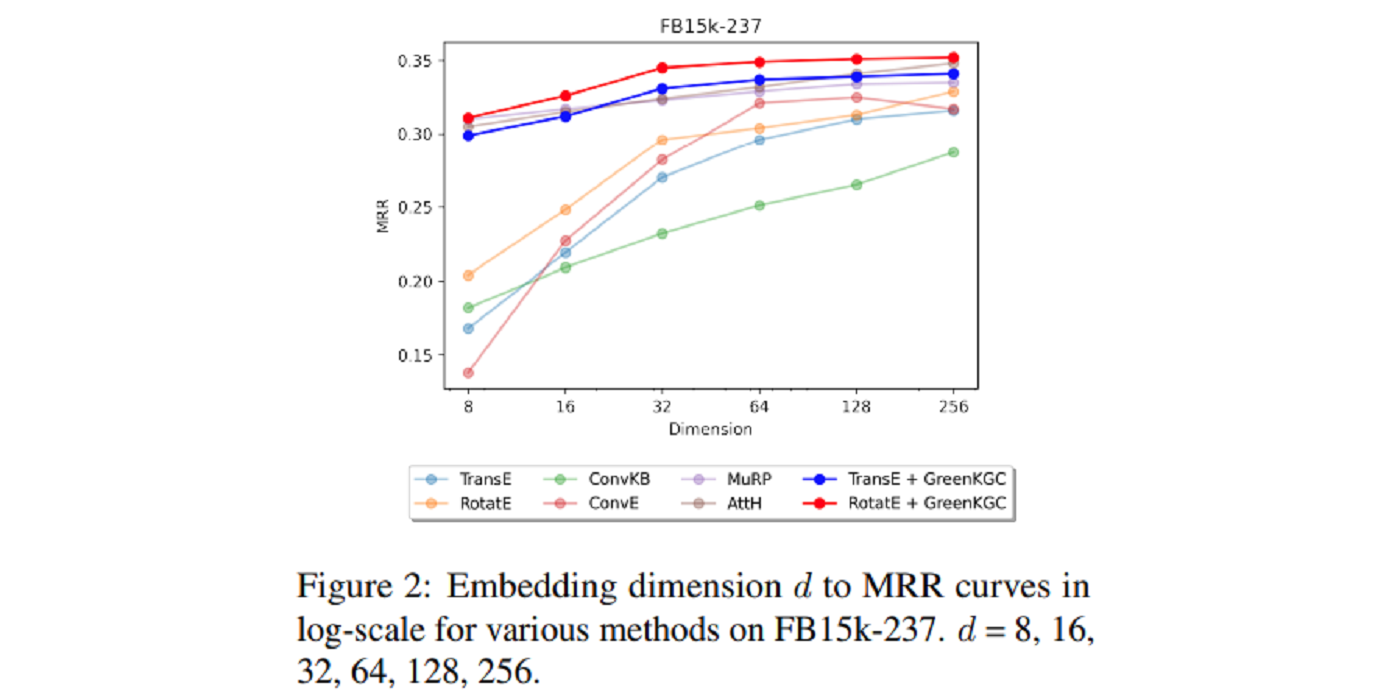

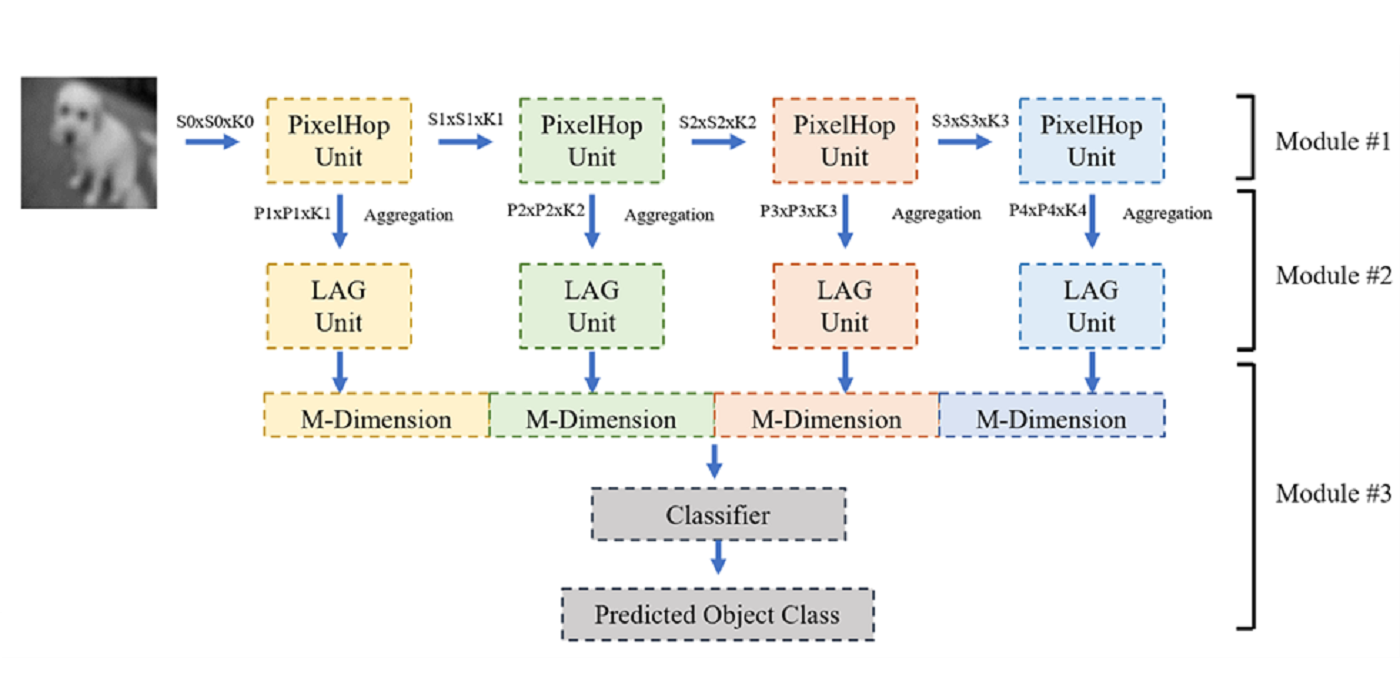

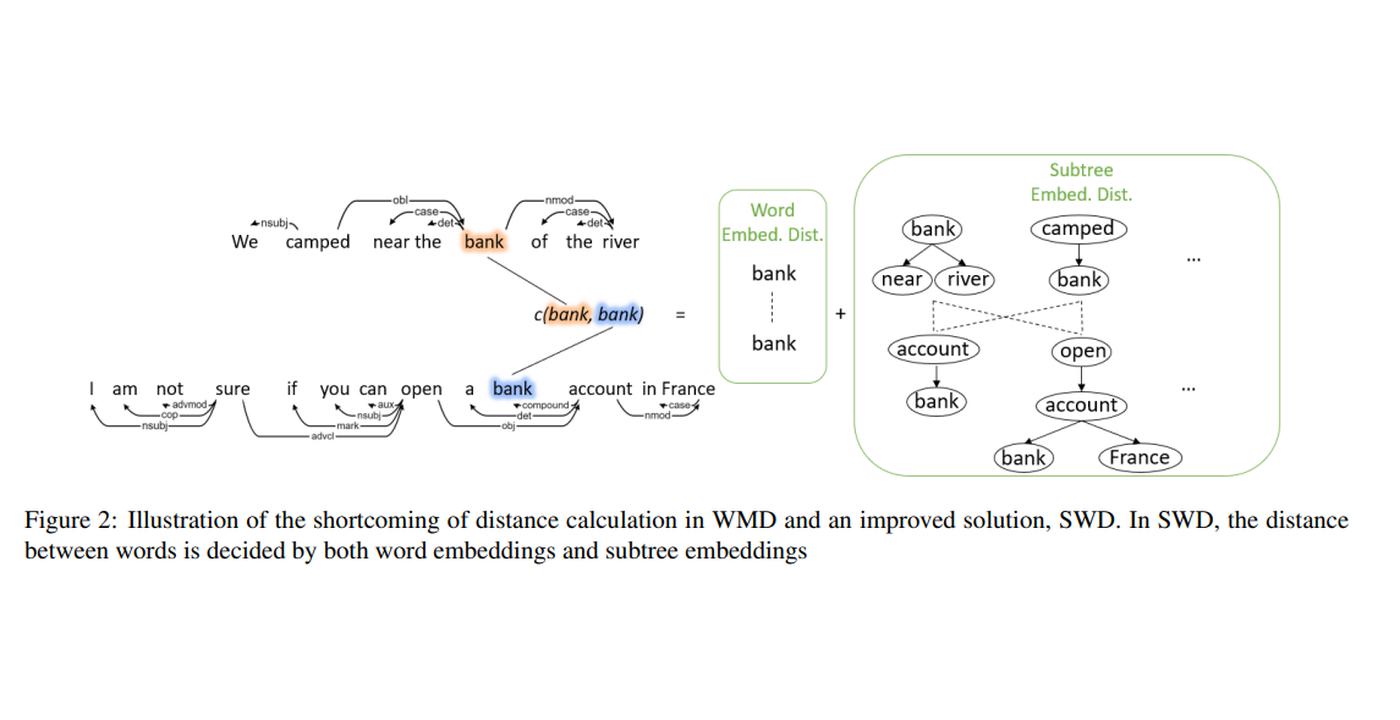

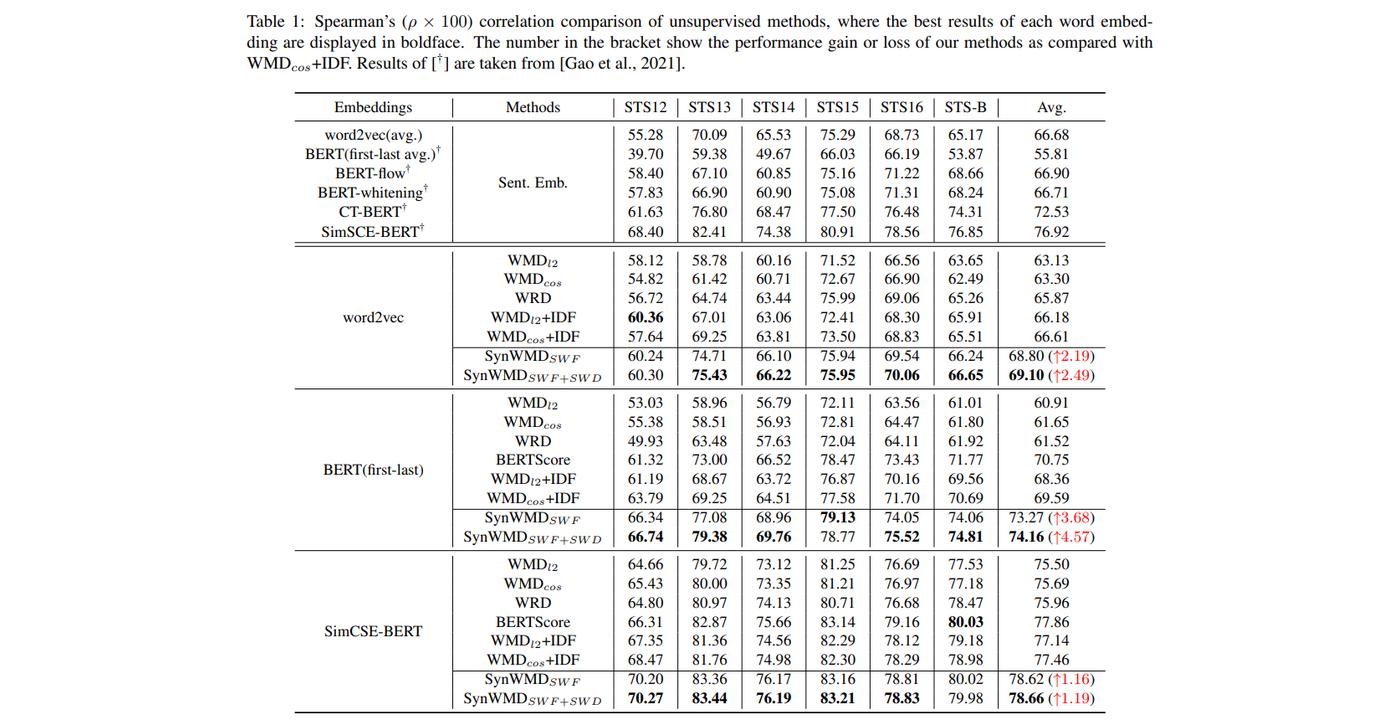

“The rapid advances in artificial intelligence in the last decade are primarily attributed to the wide applications of deep learning (DL). Yet, the high carbon footprint yielded by larger DL networks is a concern to sustainability. Green learning (GL) has been proposed as an alternative to address this concern. GL is characterized by low carbon footprints, small model sizes, low computational complexity, and mathematical transparency. It offers energy-effective solutions in cloud centers and mobile/edge devices. It has three main modules: 1) unsupervised representation learning, 2) supervised feature learning, and 3) decision learning. GL has been successfully applied to a few applications. This talk provides an overview of the GL solution, its demonstrated examples, and its technical outlook. The connection between GL and DL will also be discussed.”

The Lecture was well attended. People showed interest in this new machine-learning paradigm.