Image-text retrieval is a fundamental task in image understanding. This task aims to retrieve the most relevant information from another modality based on the given image or text. Recent approaches focus on training large neural networks to bridge the gap between visual and textual domains. However, these models are computationally expensive and not explainable regarding how the data from different modalities are aligned. End-to-end optimized models, such as large neural networks, can only output the final results, making it difficult for humans to understand the reasoning behind the model’s predictions.

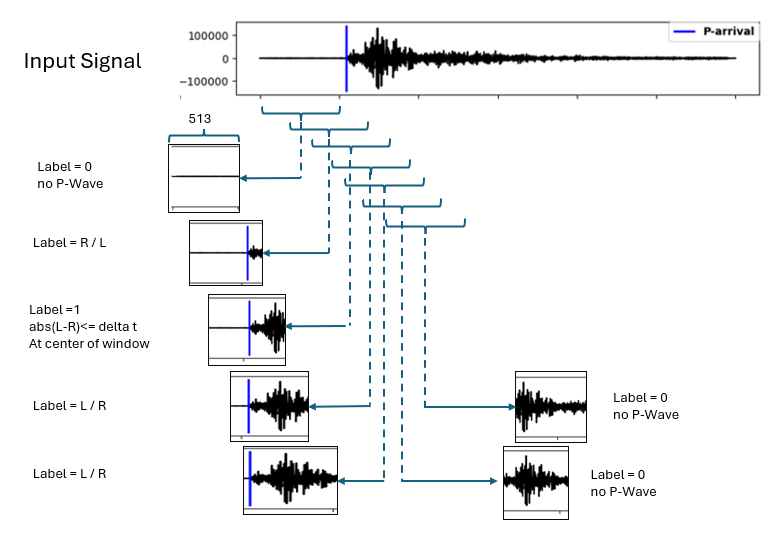

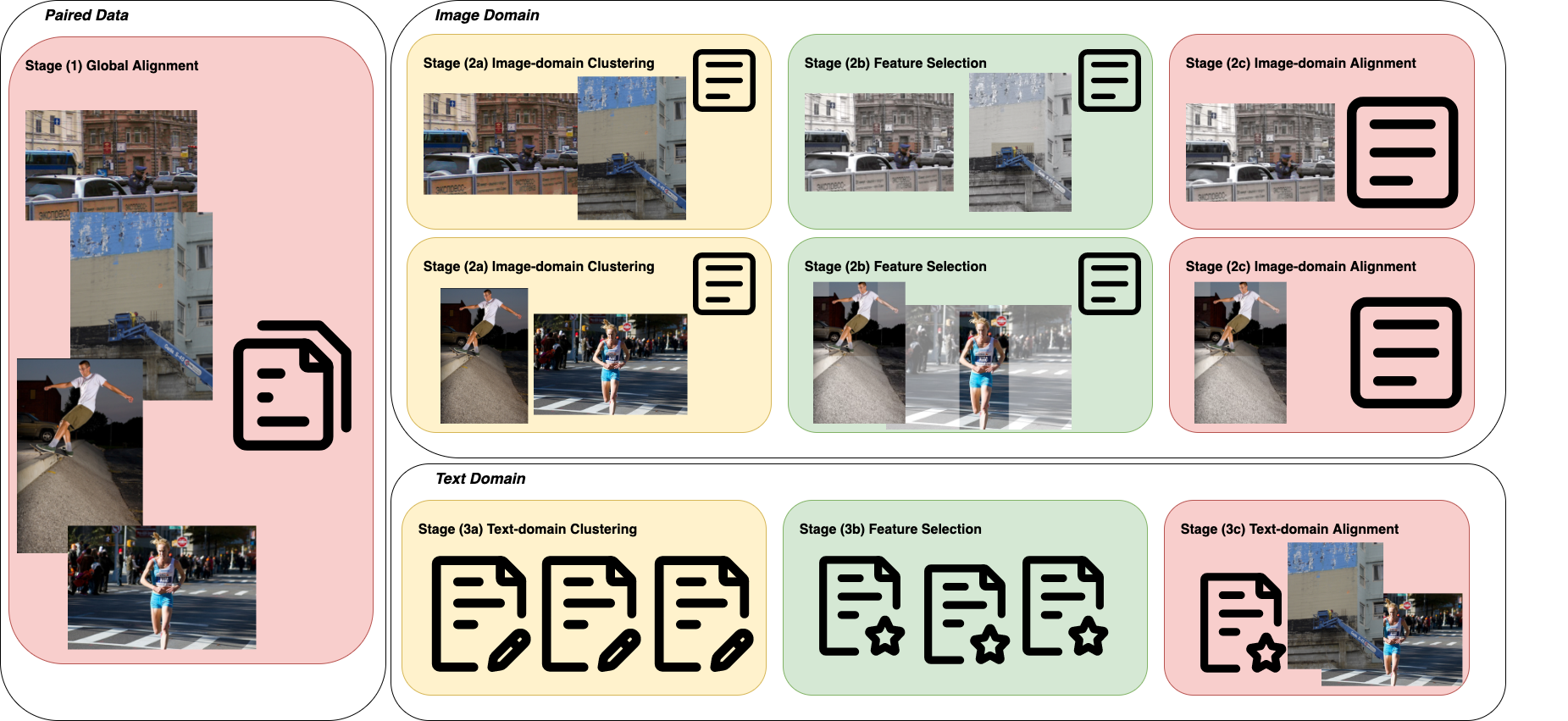

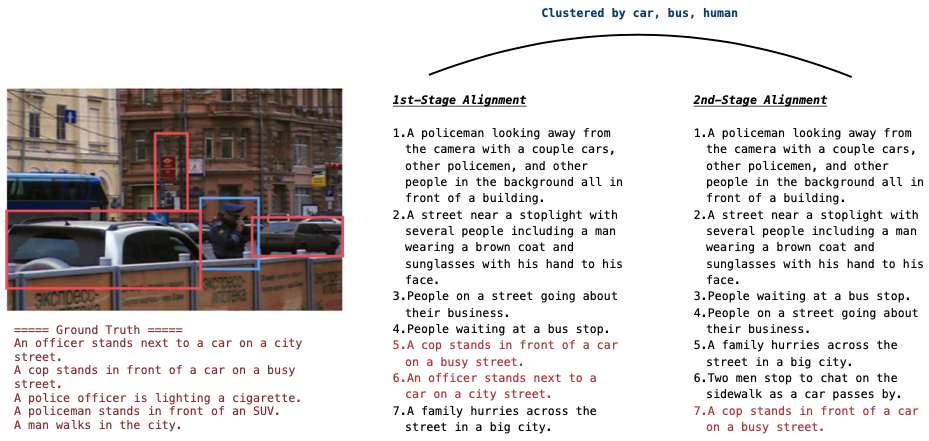

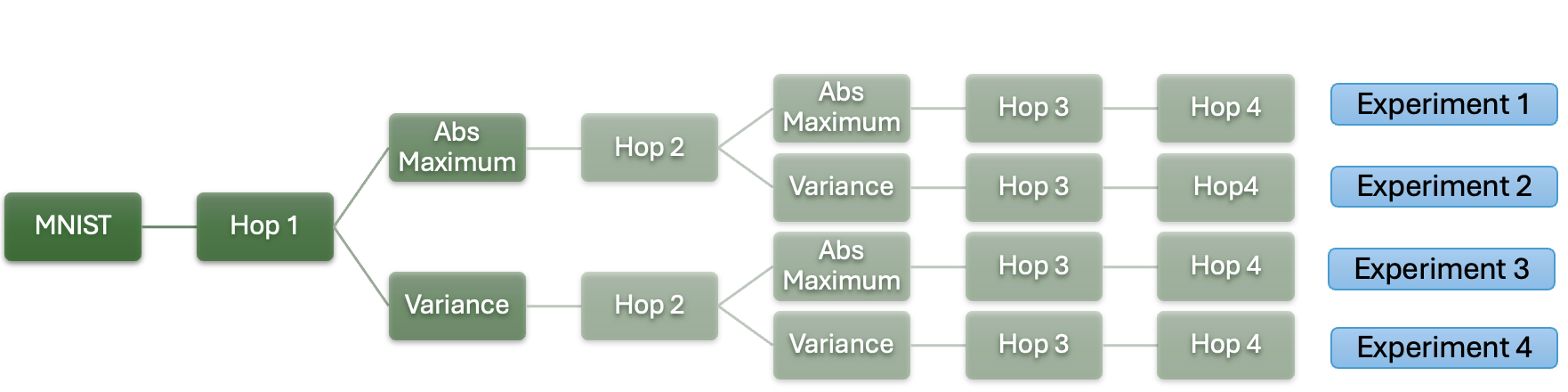

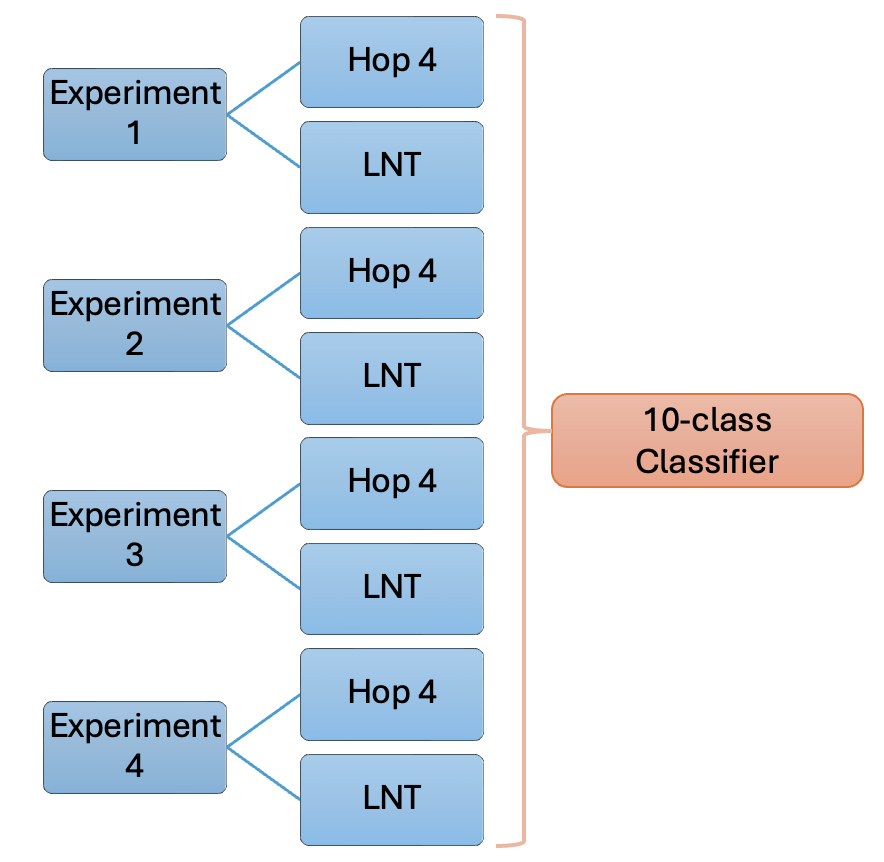

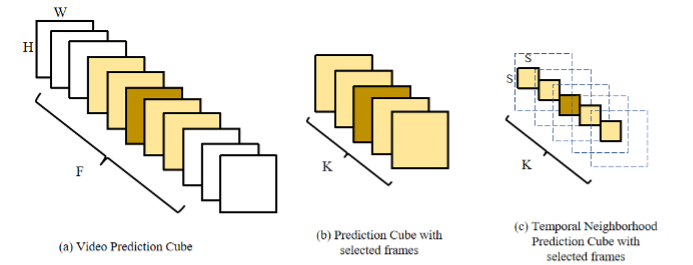

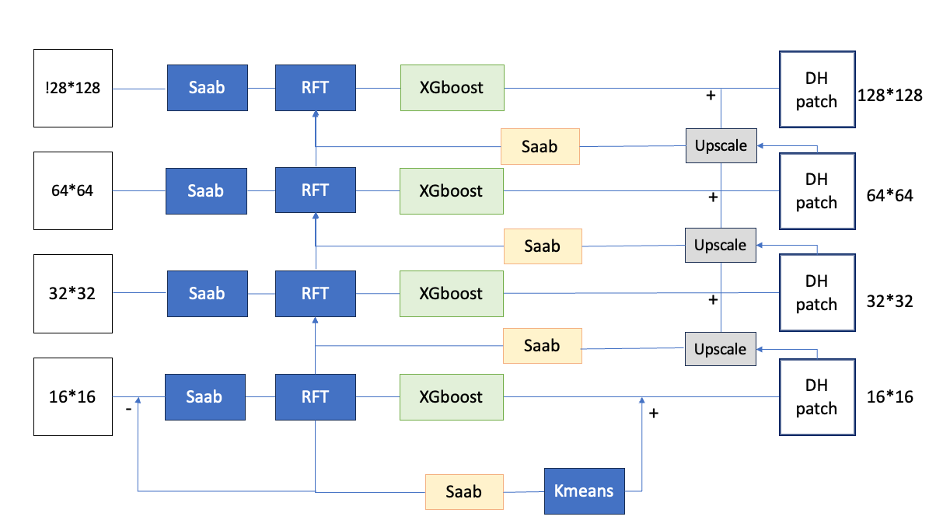

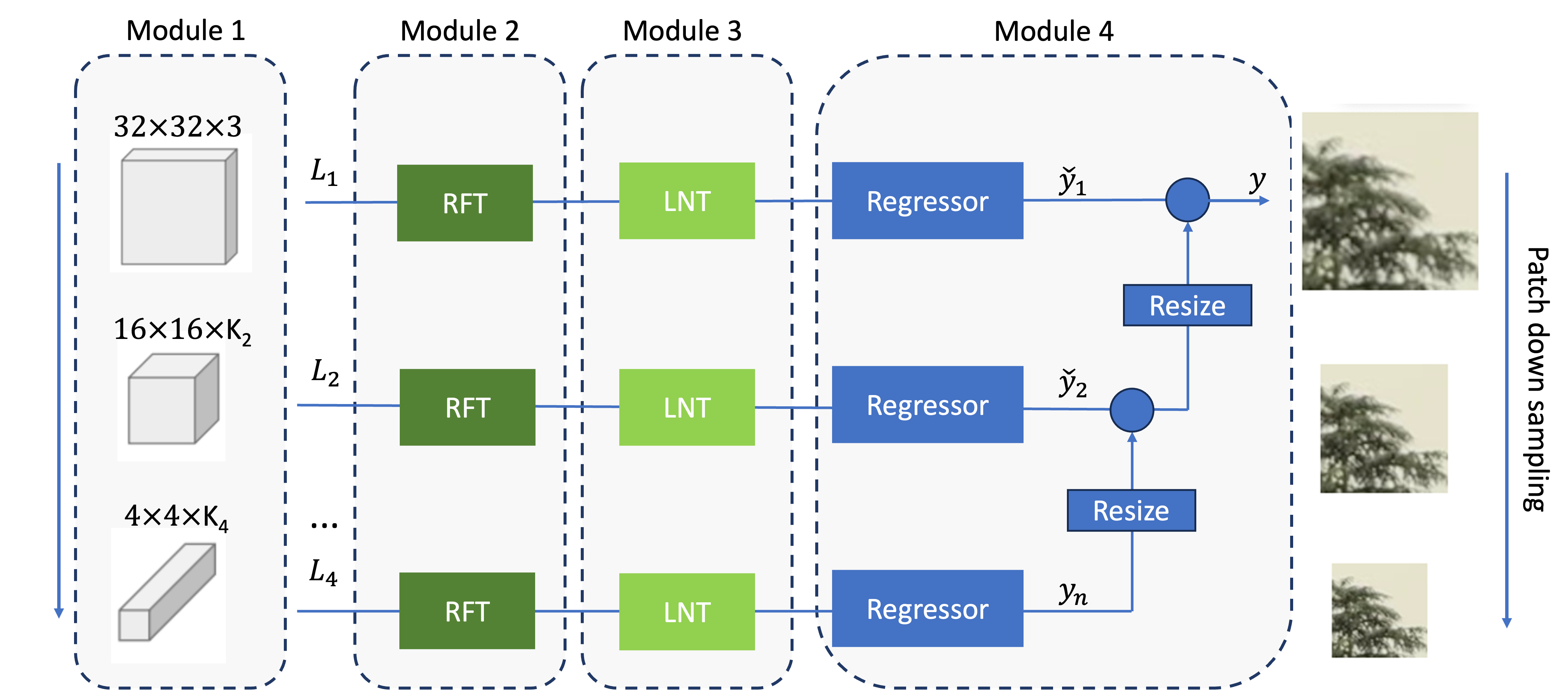

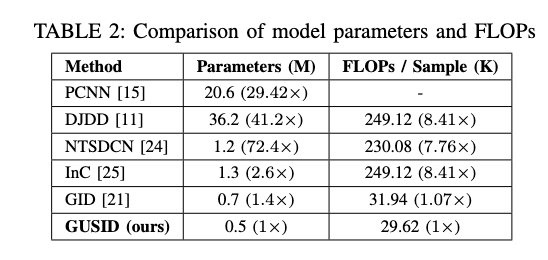

Hence, we propose a green learning solution, Green Multi-Modal Alignment (GMA), for computational efficiency and mathematical transparency. We reduce trainable parameters to 3% compared to fine-tuning the whole image and text encoders. The model is composed of three modules, including (1) Clustering, (2) Feature Selection, and (3) Alignment. The clustering process divides the whole dataset into subsets by choosing similar image and text pairs, reducing the training sample’s divergence. The second module, feature selection, reduces the feature dimension and mitigates the computational requirements. The importance of each feature can be interpreted as statistical evidence supporting our reasoning. The alignment is conducted by linear projection, which guarantees the inverse projection in both direction retrievals, namely image-to-text and tex-to-image retrievals.

Experimental results show that our model can outperform the SOTA retrieval models in text-to-image and image-to-text retrieval on the Flick30k and MS-COCO datasets. Besides, our alignment process can incorporate visual and text encoder models trained separately and generalize well to unseen image-text pairs.