MCL Research on Texture Synthesis

Automatic synthesis of visually pleasant texture that resembles exemplary texture finds applications in computer graphics. Texture synthesis has been studied for several decades since it is also of theoretical interest in texture analysis and modeling. Texture can be synthesized pixel-by-pixel or patch-by-patch based on an exemplary pattern. For the pixel-based synthesis, a pixel conditioned on its squared neighbor was synthesized using the conditional probability and estimated by a statistical method. Generally, patch-based texture synthesis yields higher quality than pixel-based texture synthesis. Yet, searching the whole image for patch-based synthesis is extremely slow. To speed up the process, small patches of the exemplary texture can be stitched together to form a larger region. Although these methods can produce texture of higher quality, the diversity of produced textures is limited. Besides texture synthesis in the spatial domain, texture images from the spatial domain can be transformed to the spectral domain with certain filters (or kernels), thus exploiting the statistical correlation of filter responses for texture synthesis. Commonly used kernels include the Gabor filters and the steerable pyramid filter banks.

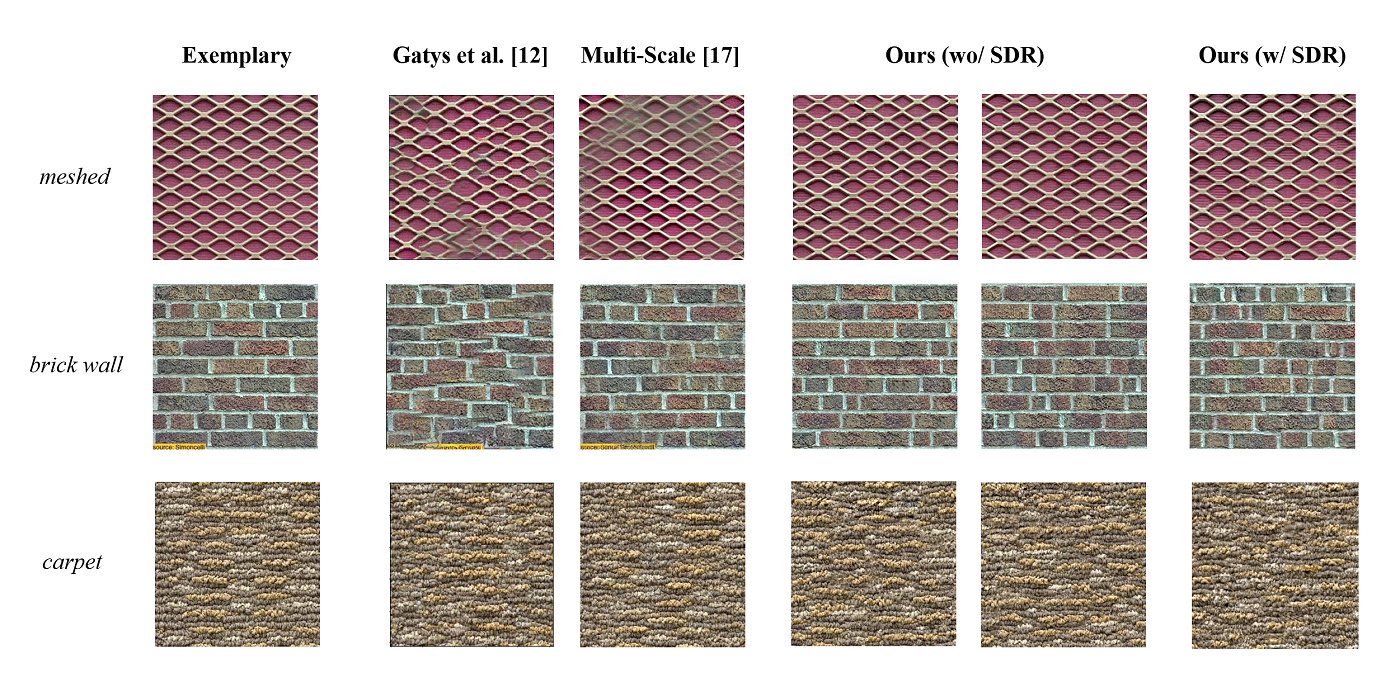

We have witnessed amazing quality improvement of synthesized texture over the last five to six years due to the resurgence of neural networks. Texture synthesis based on deep learning (DL), such as Convolutional Neural Networks (CNNs) and Generative Adversarial Networks(GANs), yield visually pleasing results. DL-based methods learn transform kernels from numerous training data through end-to-end optimization. However, these methods have two main shortcomings: 1) a lack of mathematical transparency and 2) a higher training and inference complexity. To address these drawbacks, we investigate a non-parametric and interpretable texture synthesis method, called NITES [1].

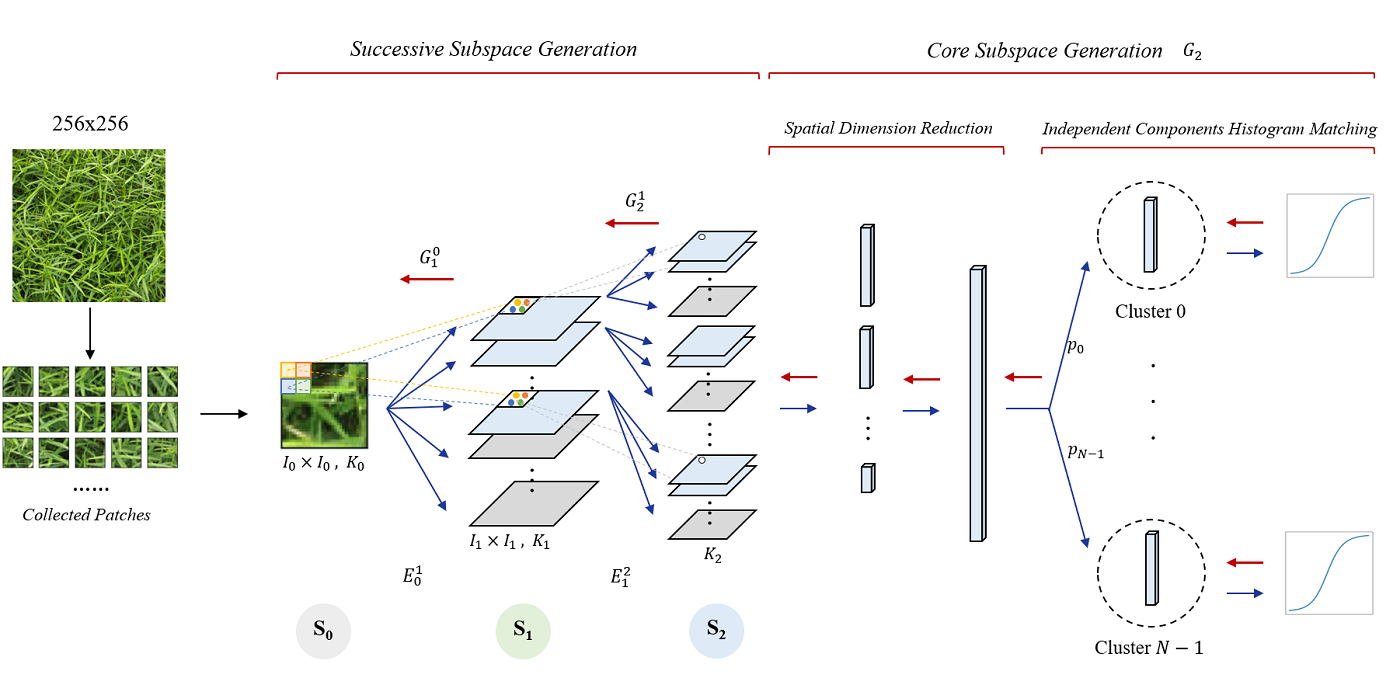

NITES consists of three steps. First, it analyzes the exemplary texture to obtain its joint spatial-spectral [...]