MCL Research on 3D Whole-Brain Image Analysis in Mice

Mouse brains are often used as model systems in medical studies, as they share many similarities with the human brain in function and structure. By observing biomarkers in brain tissue, researchers can gain insight into how neurons and blood vessels interact to support processes such as learning, memory, and sensory perception (especially to triggers of content/stress and so on).

Our studies on 3D microscopic images of mouse brains involve two biomarkers: lectin and cFOS. Lectin is a protein which binds specifically to certain sugar molecules on the surface of cells lining blood vessels, and can therefore be stained with a fluorescent dye to mark the network of blood vessels. cFOS labelling, on the other hand, marks neurons that were recently active, serving as an “activity map” of brain regions responding to external stimuli or behavioural tasks. The detailed information of both biomarkers is obtained through the dissection of the mouse (especially cFOS, which requires observation around 2 hours after stimulation), which means that it cannot be observed through imaging of the human brain.

These datasets are typically large per brain, high-dimensional, and require pixel-level interpretation. Manual labelling for such data is often time-consuming, and could vary depending on the experience level of the person labelling. A single brain can produce gigabytes to terabytes of 3D imaging data, containing complex vessel networks and cellular activation patterns. As cFOS shows up as very small points on the image, labelling those accurately proves to be difficult in practice as well.

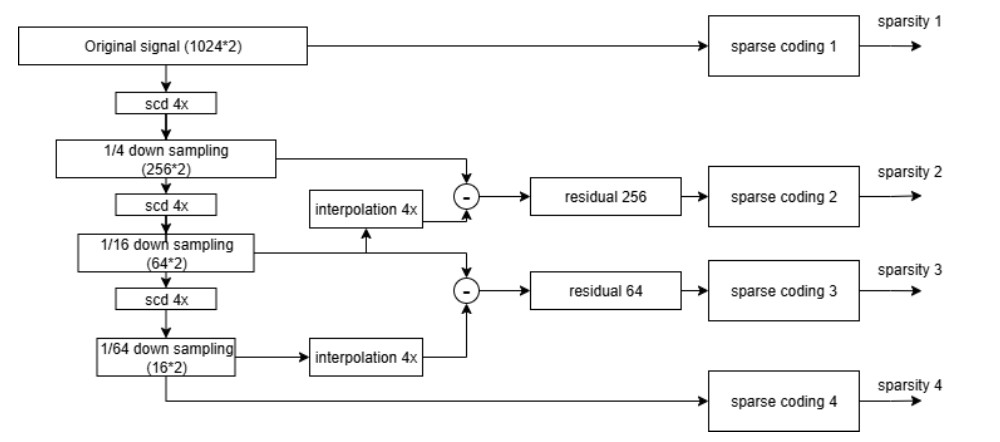

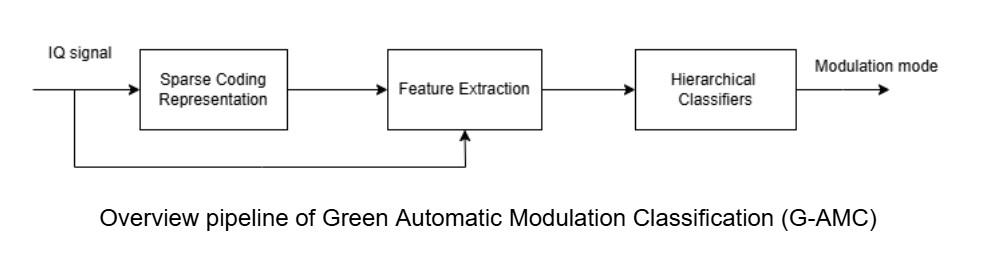

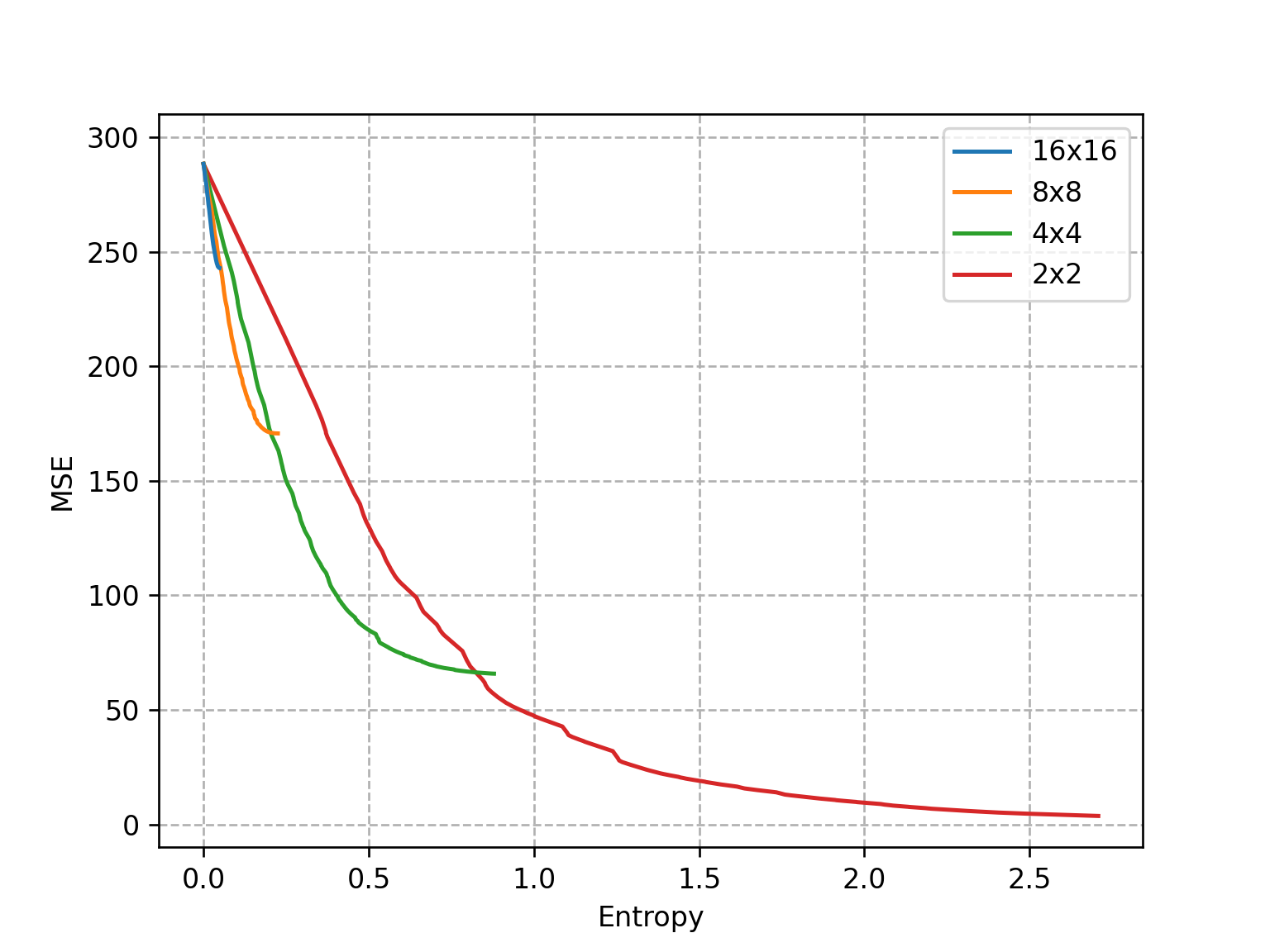

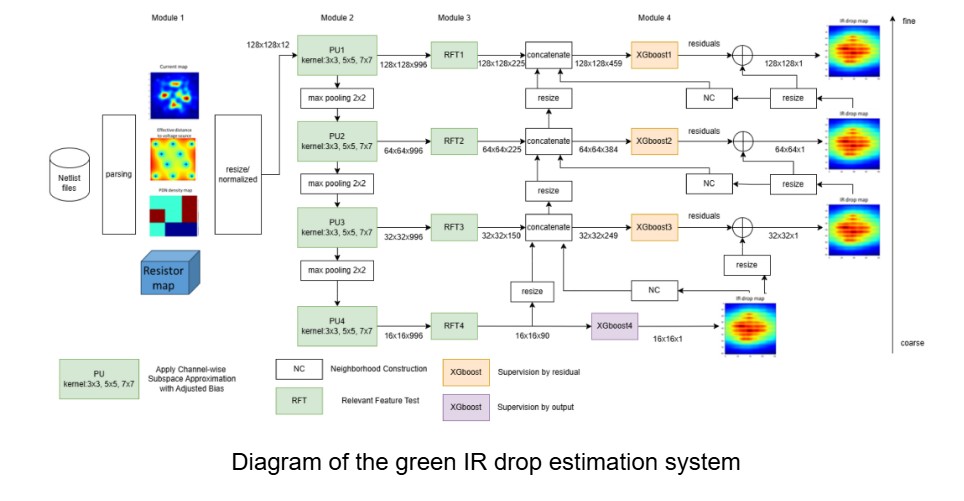

Green learning offers an efficient method for automatically segmenting and analysing these complex images. Deep learning often demands extensive labelled data and computational resources, and such data is often hard to find when it comes to medical applications. In contrast, [...]