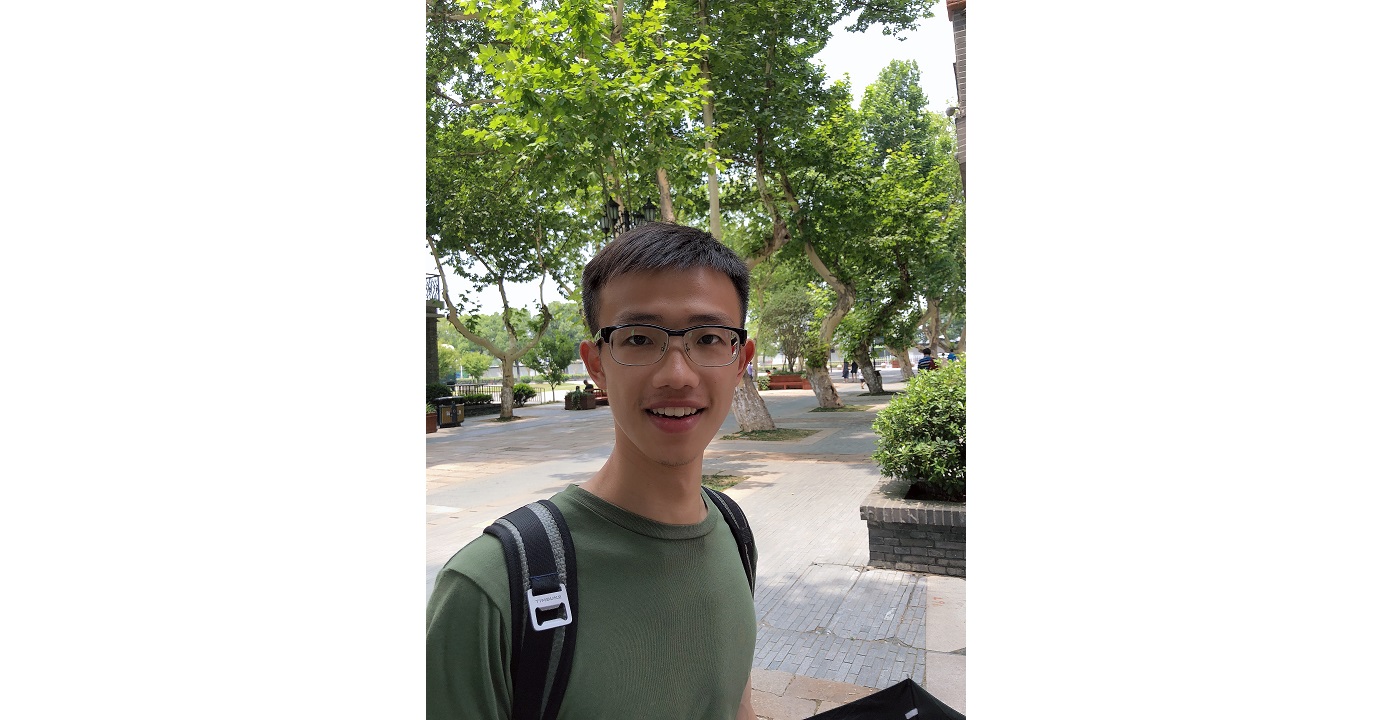

Welcome MCL New Member Jiesi Hu

Could you briefly introduce yourself and your research interests?

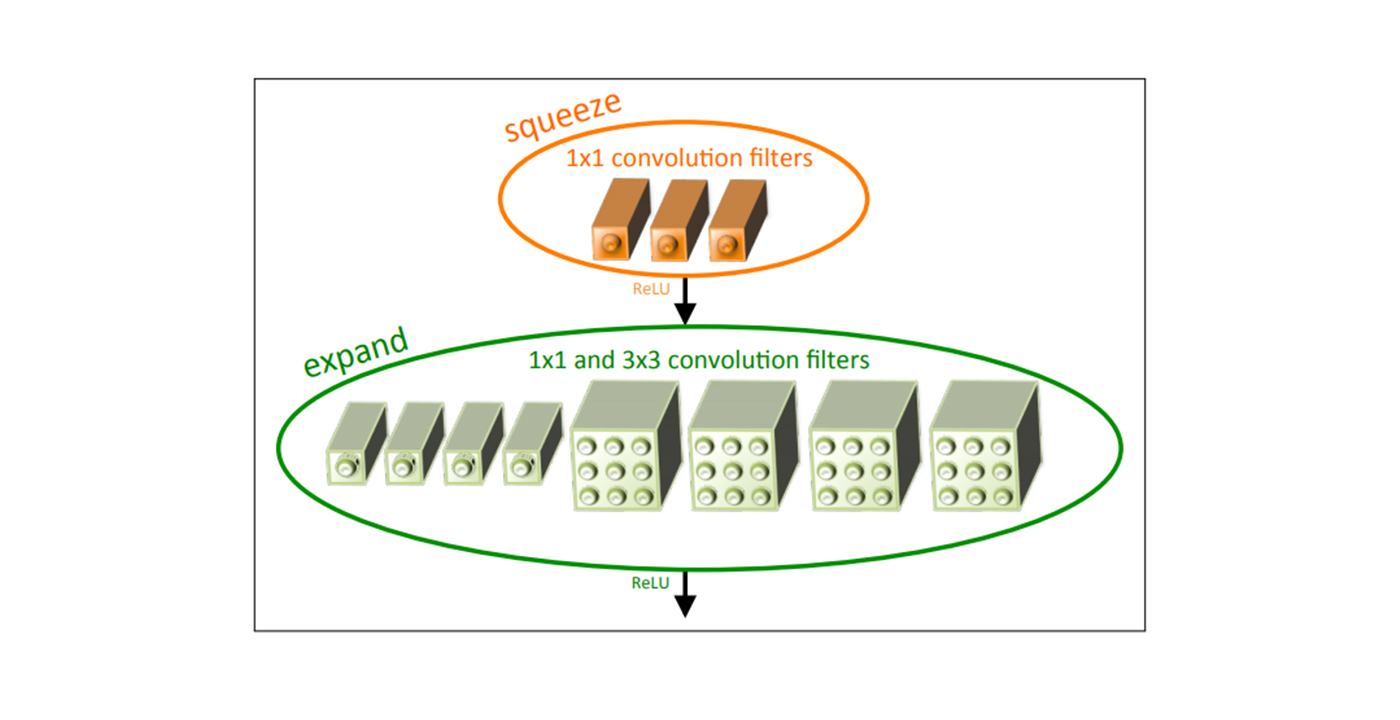

I am an EE master student in Viterbi. I just finished my first year of master’s. I get my bachelor’s degree from the electronic college in Nanjing University of Posts and Telecommunications. I like playing tennis, badminton, table tennis and jogging. I also joined the tennis club at USC. My area of interest is machine learning and signal processing. The topic I want to study is video tracking which I think is a very useful technique.

What is your impression about MCL and USC?

I think the members of MCL is very kind. They are always willing to help me when I have questions about the course. They spend lots of time explaining until I fully understand. Besides, I think Professor Kuo is a good manager. Professor Kuo personally guides everyone, and all members of MCL has clear goals and tasks.

What is your future expectation and plan in MCL?

I hope I can work hard and learn more about video tracking and machine learning. I want to have a deeper insight into them. I know I am too naïve now both in experience and knowledge, so I want to learn how to do research and academic report. If possible, I also want to make some contribution to MCL. In the future, I would like to become a PhD student.