MCL Research on Source-Distribution-Aimed Generative Model

There are typically two types of statistical models in mechine learning, discriminative models and generative models. Different from discriminative models that aim at drawing decision boundaries, generative models target at modeling the data distribution in the whole space. Generative models tackle a more difficult task than discriminative model because it needs to model complicated distributions. For example, generative models should capture correlations such as “Things look like boats are likely to appear near things that look like water” while discriminative model differentiates “boat” from “not boat”.

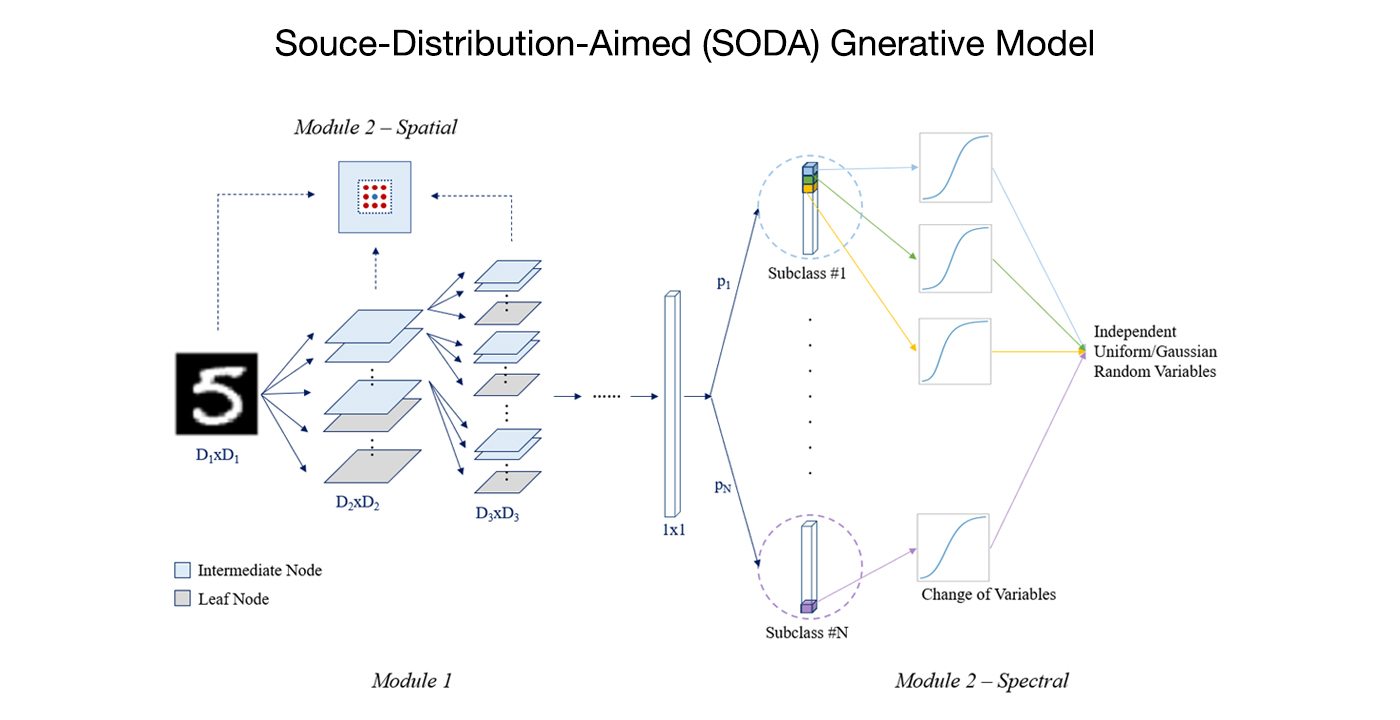

Image generative models have become popular in recent years since Generative Adversarial Network (GANs), can generate realistic natural images. They, however, have no clear relationship to probability distributions and suffer from difficult training process and mode dropping problem. Although difficult training process and mode dropping problems may be alleviated by using different loss functions [1], the underlying relationship to probability distributions remains vague in GANs. It encourages us to develop a SOurce-Distribution-Aimed (SODA) generative model that aims at providing clear probability distribution functions to describe data distribution.

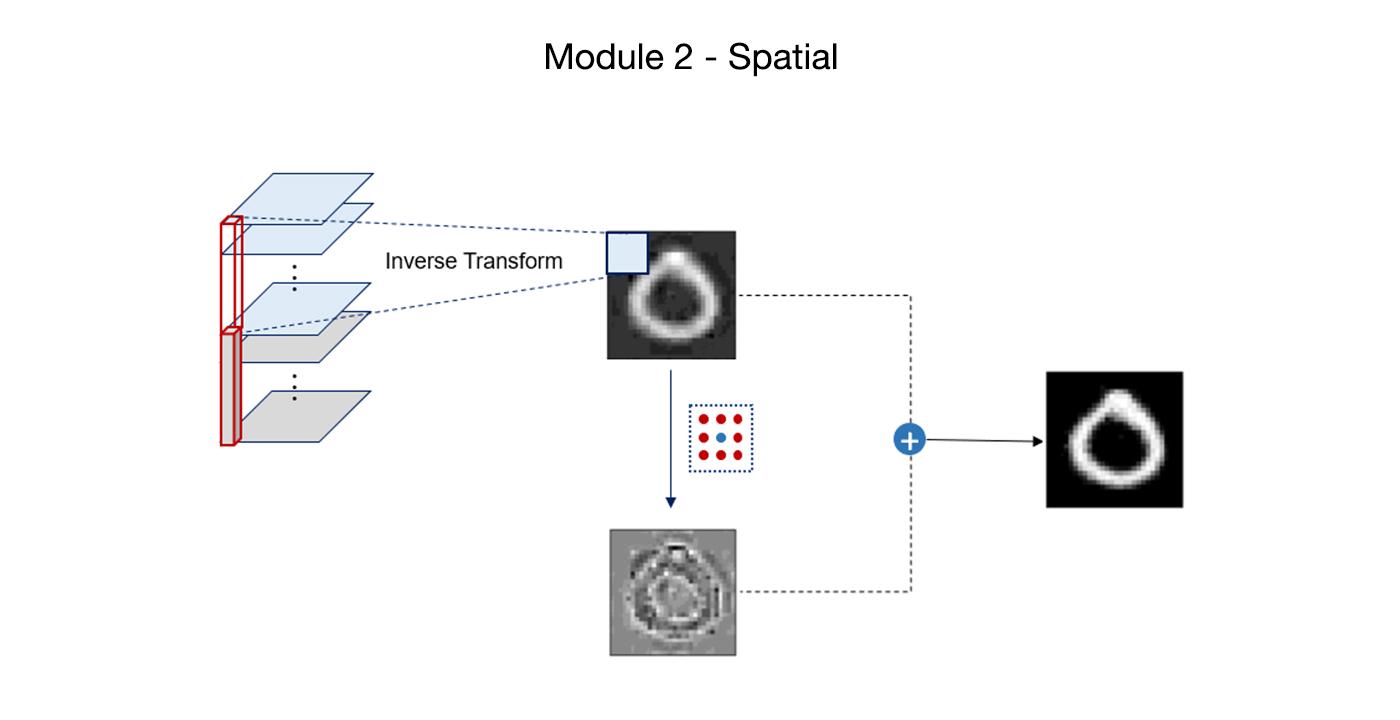

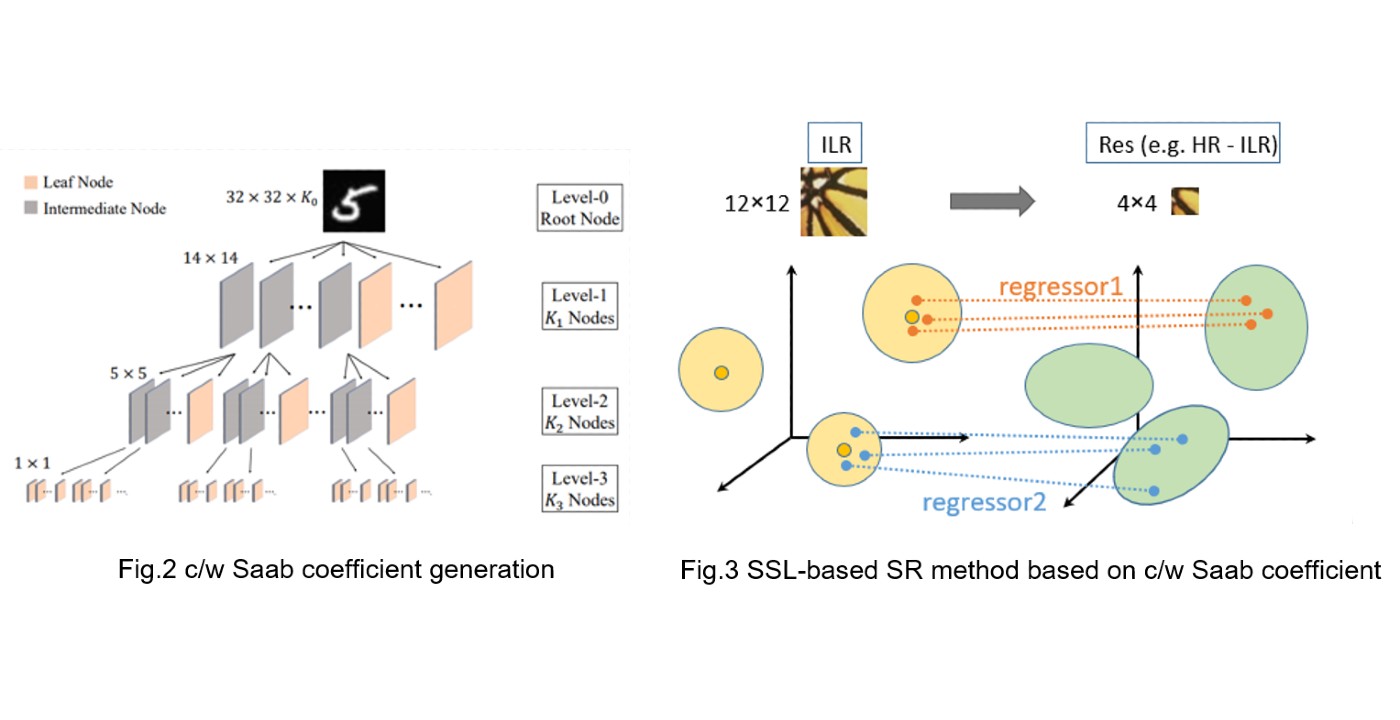

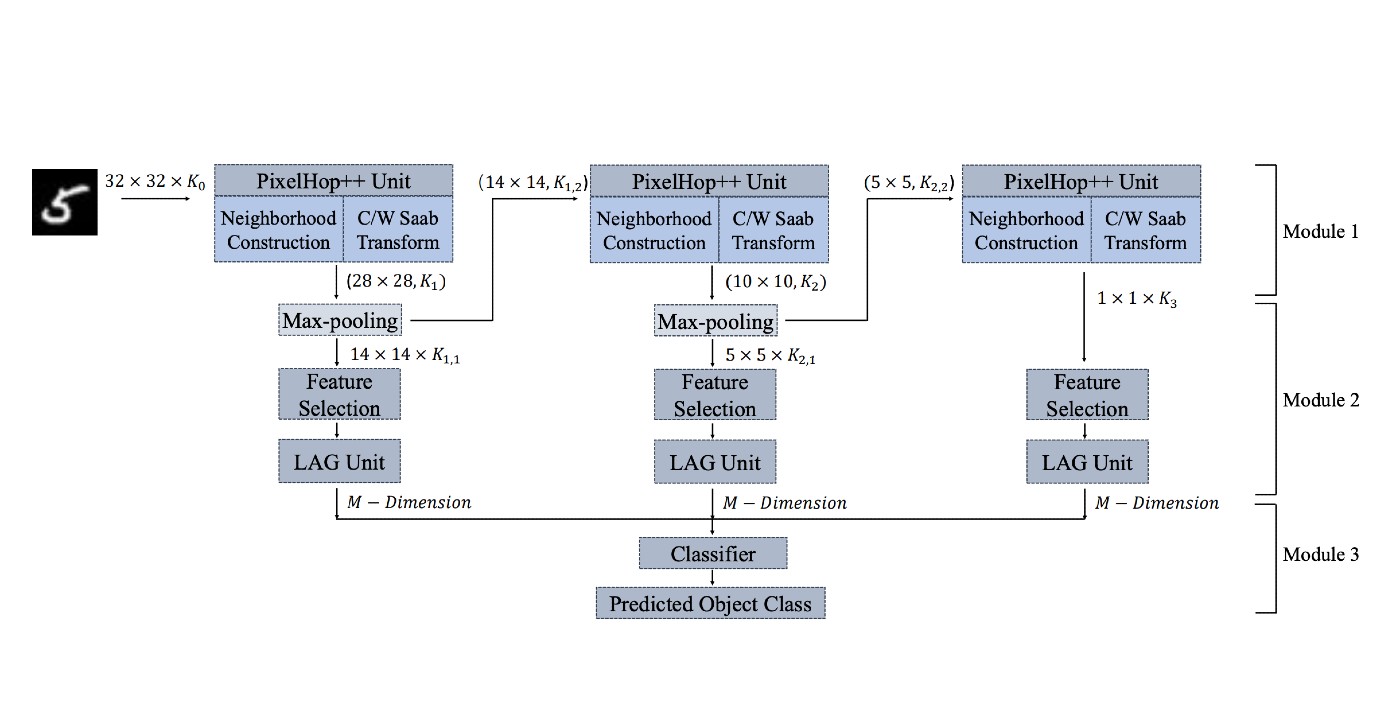

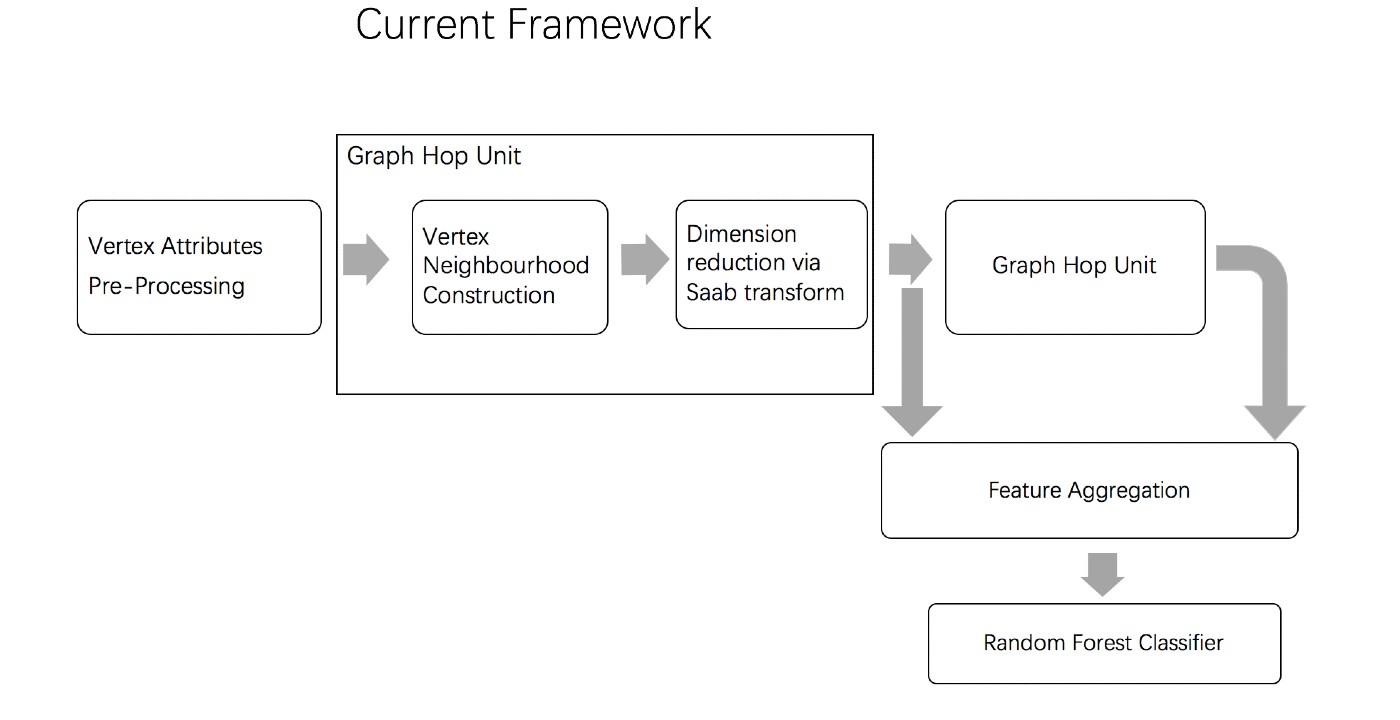

There are two main modules in our SODA generative model. One is finding proper source data representations and the other is determining the source data distribution in each representation. One proper representation for source data is joint spatial-spectral representation proposed by Kuo, et.al. [2, 3]. By transforming between spectral domain and spatial domain, a rich set of spectral and spatial representations can be obtained. Spectral representations are vectors of Saab coefficients while spatial representations are pixels in an image or Saab coefficients that are arranged based on their pixel order in spatial domain. Spectral representation at the last stage give a global view of an image while the spatial representations describe details in [...]