MCL Research on Renal Imaging Analysis

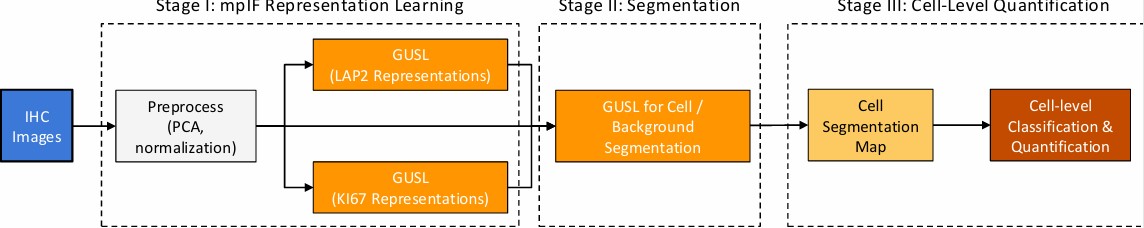

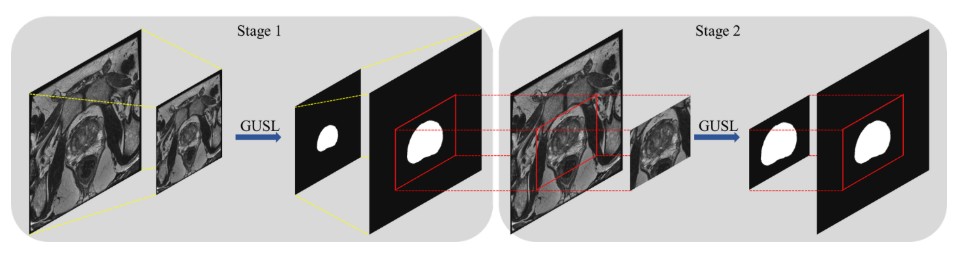

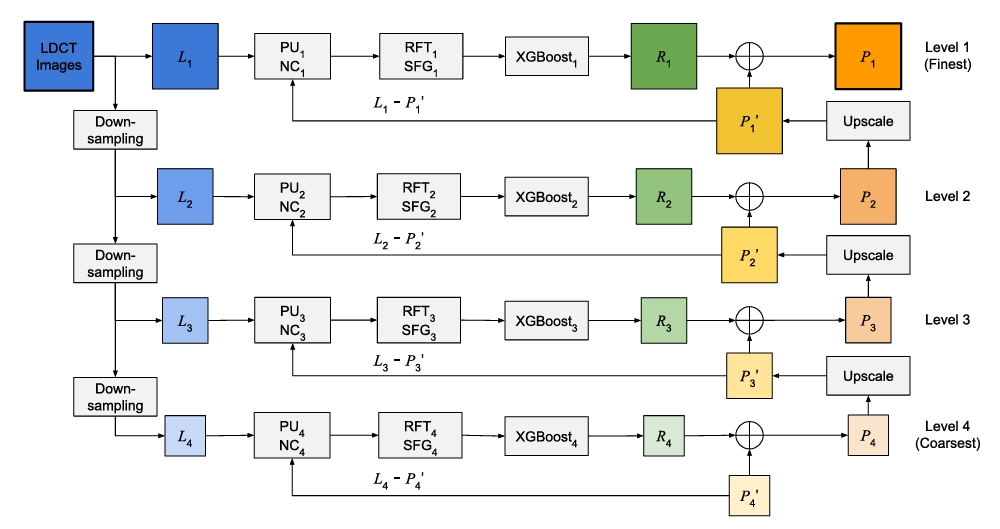

Our paper proposes a multi-stage Green U-shaped Learning (GUSL) framework for efficient and reliable IHC image quantification. As shown in the overall pipeline (Stage I–III), the system starts with the preprocessing of the input IHC image using normalization and PCA. In Stage I, marker-specific GUSL modules learn mpIF-informed intermediate representations, such as LAP2 and KI67-related cues, from co-registered training data. In Stage II, these representations are integrated by a dedicated GUSL module to generate a cell/background segmentation map in a coarse-to-fine and residual refinement manner. In Stage III, connected cell regions are extracted, and cell-level classification is performed to determine whether each cell is biomarker-positive or biomarker-negative. The entire framework follows a feedforward, modular design without end-to-end backpropagation, reducing computational cost while keeping the system transparent and interpretable.

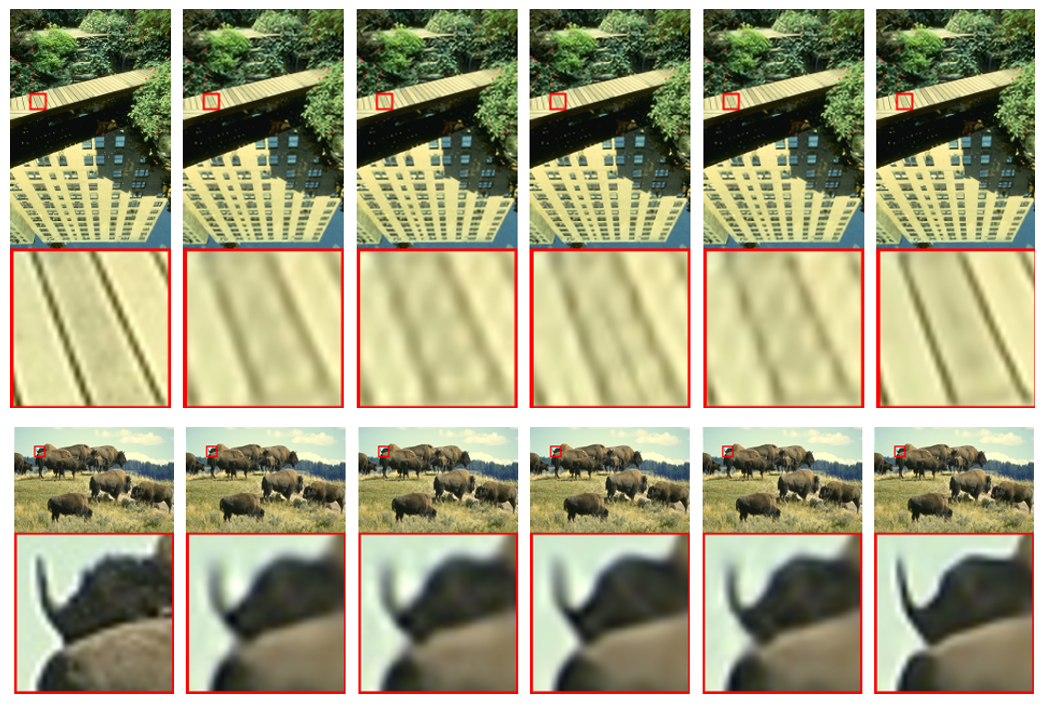

Qualitative examples are shown in the second figure. Column (a) presents the input brightfield IHC images. Column (b) shows the ground-truth cell segmentation and biomarker labels. Columns (c) and (d) compare results from a representative deep learning baseline and our GUSL method. We observe that the proposed method produces clearer cell boundaries and more consistent positive/negative classification, especially in crowded regions and low-contrast areas. These visual results are consistent with our quantitative evaluation, which demonstrates competitive segmentation accuracy and improved quantification agreement, while using much lower model complexity and energy consumption.