MCL Research on Green Image Coding

Image coding is a fundamental multimedia technology. Popular image coding

techniques include hybrid and deep learning-based frameworks. Our green image

coding (GIC) aims to explore a new path to a low-complexity and high-efficiency

coding framework.

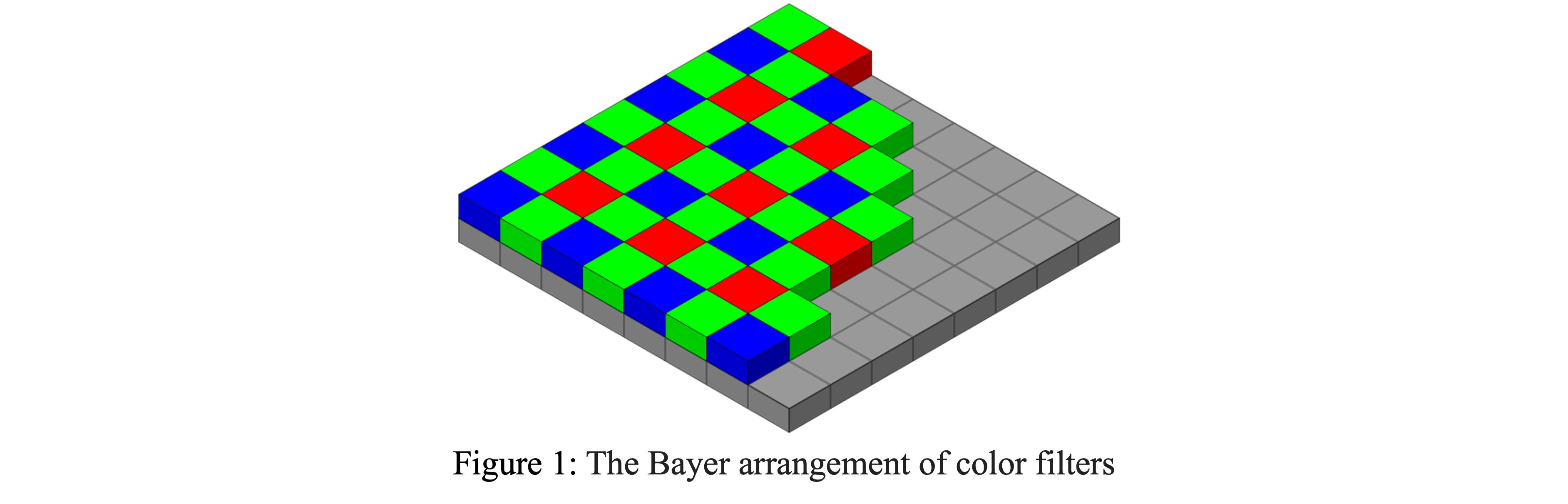

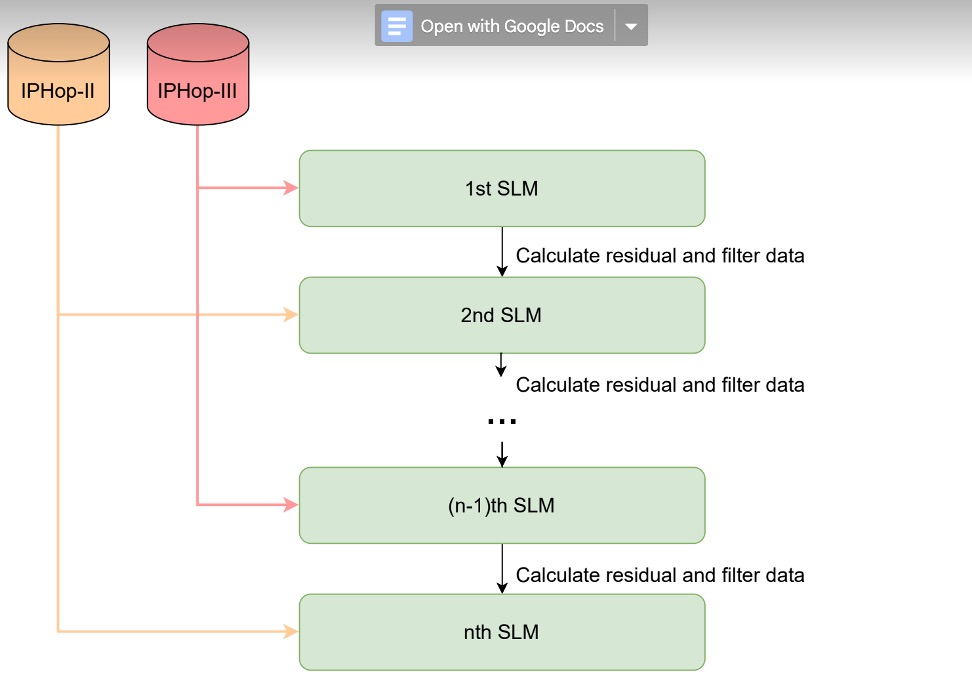

The main characteristics of the proposed GIC are multi-grid representation and vector

quantization (VQ). Natural images have rich frequency components and high energy.

Multi-grid representation uses resampling techniques to decompose the image into

multiple layers so that the energy and frequency components are distributed to

different layers. Then, we use vector quantization to handle each layer.

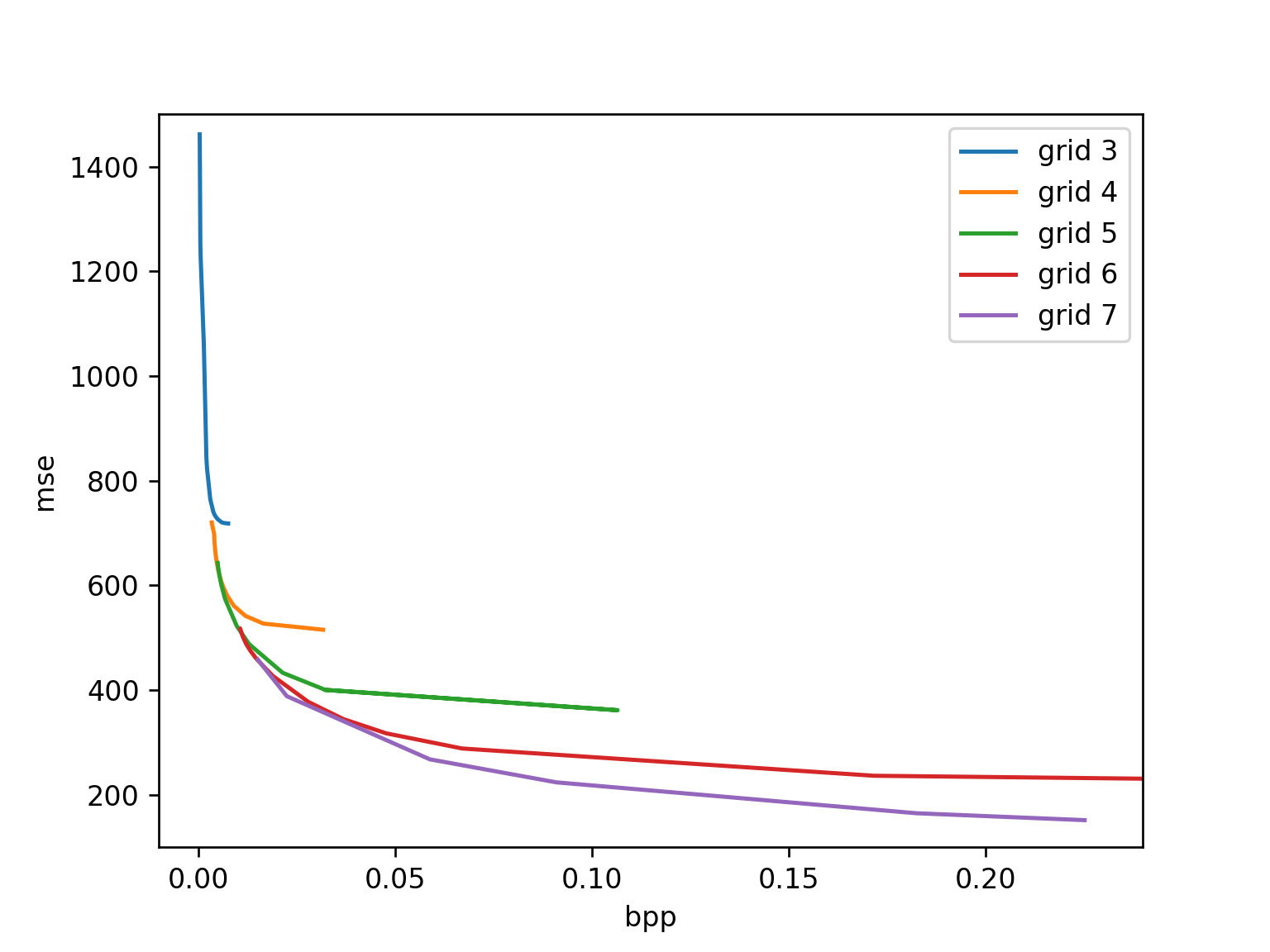

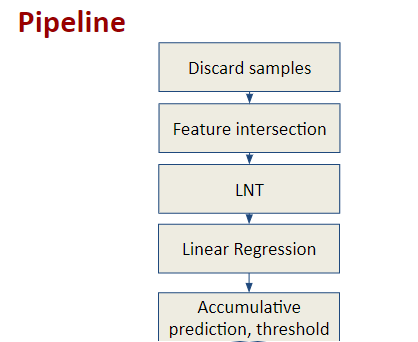

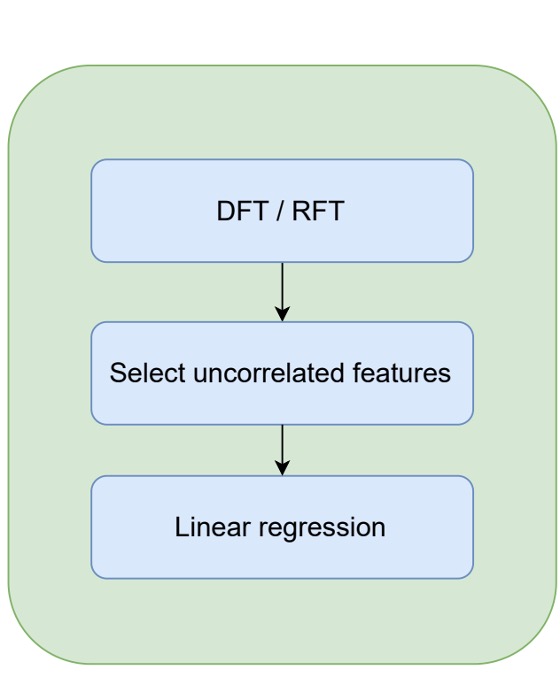

Based on the research on our previous GIC framework [1][2]. We identified two key

issues of the multi-grid representation + vector quantization framework. First, unlike

the traditional coding framework, vector quantization does not need transform.

Because transform decorrelates the input signals, while vector quantization needs

correlation exists among different dimensions of the input signals. Second, when

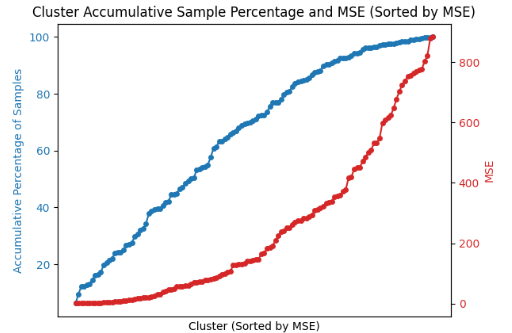

applying multi-grid representation in compression, the key is bitrate allocation, i.e.,

how to assign bits to different layers. For traditional frameworks, bitrate allocation or

rate control happens in different blocks or frames with a relative parallel relationship.

But for our multi-gid representation. The bitrate allocation is a sequential operation.

Because the bitrate of the current layer has a significant influence on the next. For this

issue, we use a slope matching technique to do the bitrate allocation. Specifically,

when the RD slope of the current layer decreases to the start slope of the next, we stop

coding the current layer and switch to the next.

[1] Wang Y, Mei Z, Zhou Q, et al. Green image codec: a lightweight learning-based

image coding method[C]//Applications of Digital Image Processing XLV. SPIE, 2022,

12226: 70-75.

[2] Wang Y, Mei Z, Katsavounidis [...]