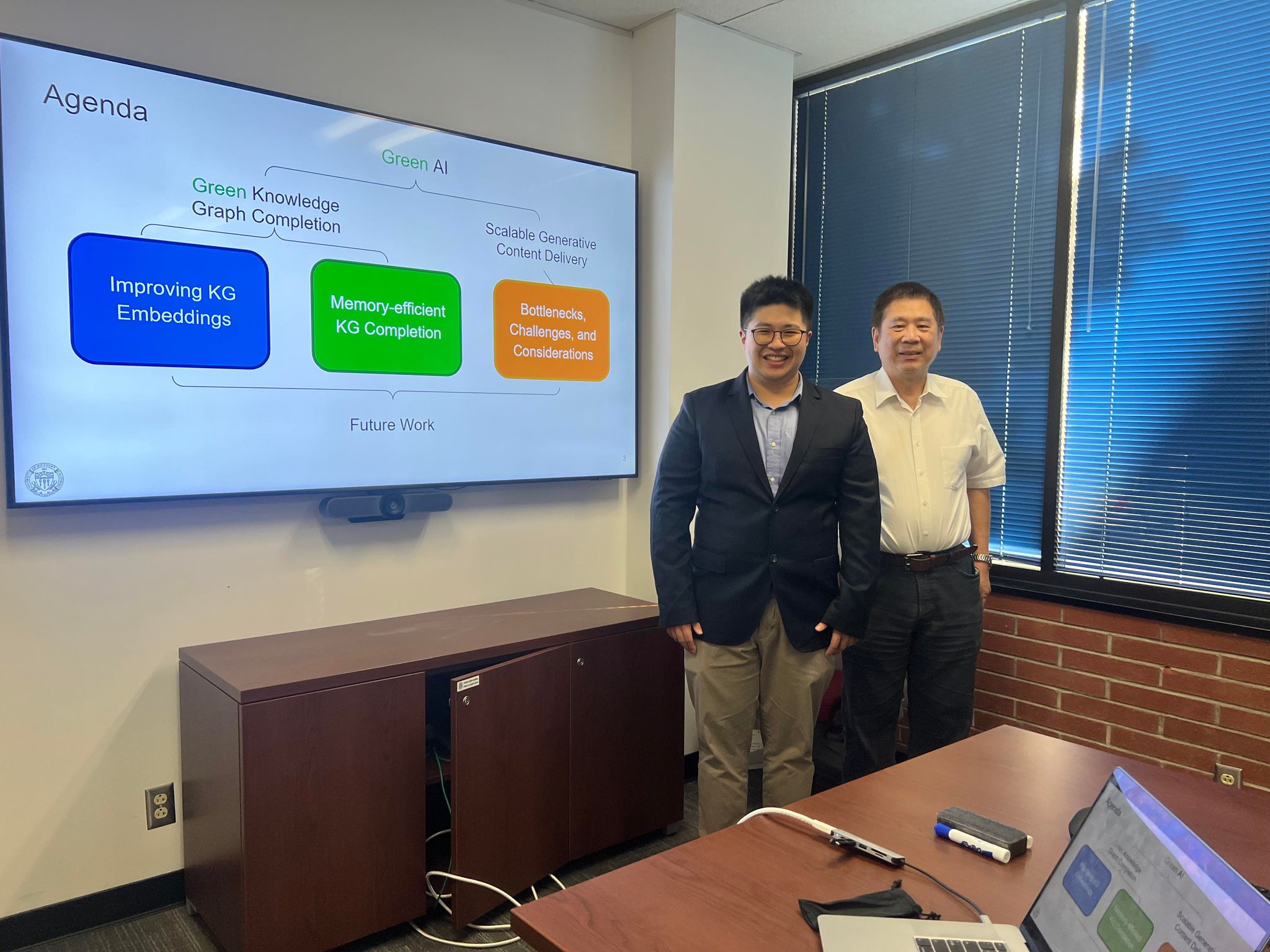

Congratulations to Joe Wang for Passing His Defense

Congratulations to Joe Wang for passing his defense. Joe’s thesis is titled “Green Knowledge Graph Completion and Scalable Generative Content Delivery.” His Dissertation Committee includes Jay Kuo (Chair), Antonio Ortega, and Robin Jia (Outside Member). MCL News team invited Joe for a short talk on her thesis and PhD experience, and here is the summary. We thank Joe for his kind sharing, and wish her all the best in the next journey.

Knowledge graphs (KGs) and Generative AI (GenAI) models have powerful reasoning capabilities and are crucial for building advanced artificial intelligence (AI) systems. In my thesis, we focus on four fundamental research to improve the efficiency, scalability, and explainability of the existing methods. They are:

1. Improving KG Embeddings with Entity Types: Entity types describe the high-level taxonomy and categorization of entities in KGs. They are often ignored in KG embedding learning. Thus, we propose a new methodology to incorporate entity types to improve KG embeddings. Specifically, our method can represent entities and types in the same embedding space with a constant number of additional model parameters. In addition, our method has a huge advantage in computation efficiency during inference.

2. KG Completion with Classifiers: KG embeddings have limited expressiveness in modeling relations. Thus, we study using binary classifiers to represent relations in the KG completion task. There are several advantages to modeling missing links as a binary classification problem, including having access to more powerful classifiers and data augmentation.

3. Green KG Completion: KG completion methods often require higher embedding dimensions for good performance. Thus, we investigate applying feature transformation and univariate feature selection to reduce the feature dimensions in KG completion methods. The KGs are first partitioned into several groups to extract [...]