MCL Research on Image Super-Resolution

Single Image Super-Resolution (SISR) is a classic problem in image processing that entails reconstructing a high-resolution (HR) image from a low-resolution (LR) input. Since the introduction of deep learning (DL) approaches, end-to-end methods have become the dominant paradigm in SISR. However, their performance gains typically come at the expense of increased model complexity and a substantial growth in parameter count.

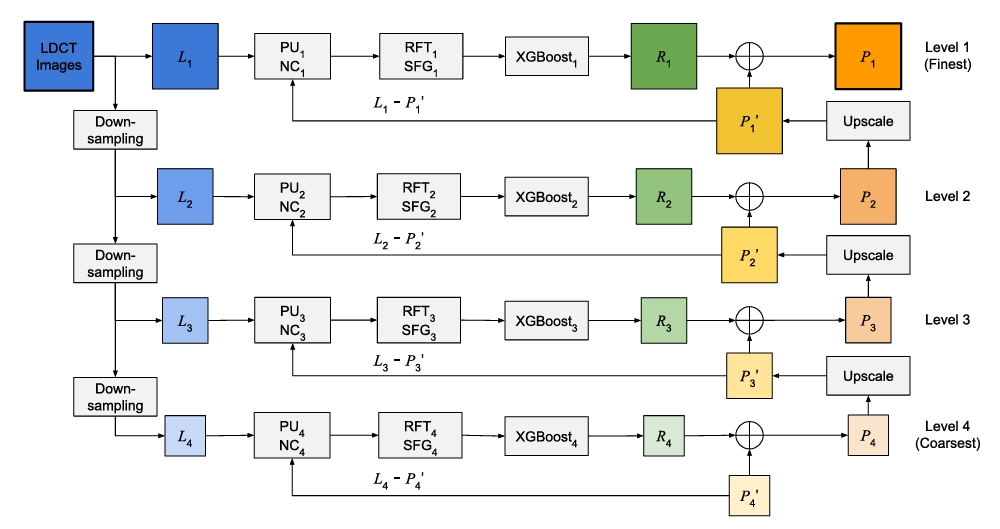

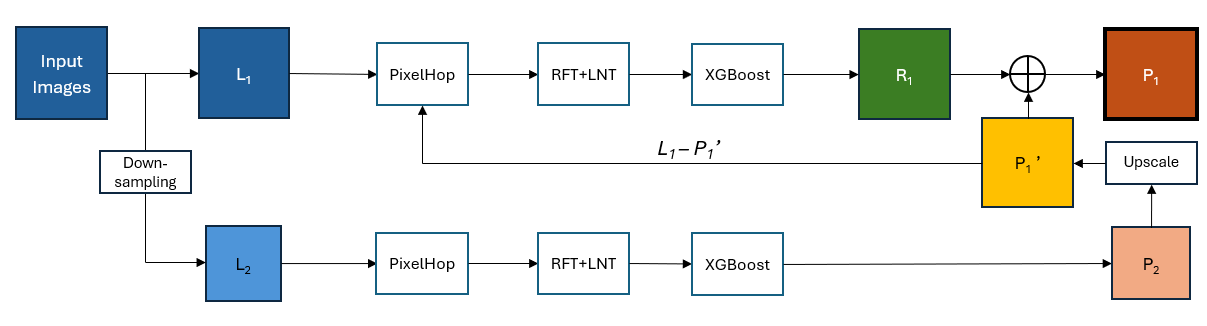

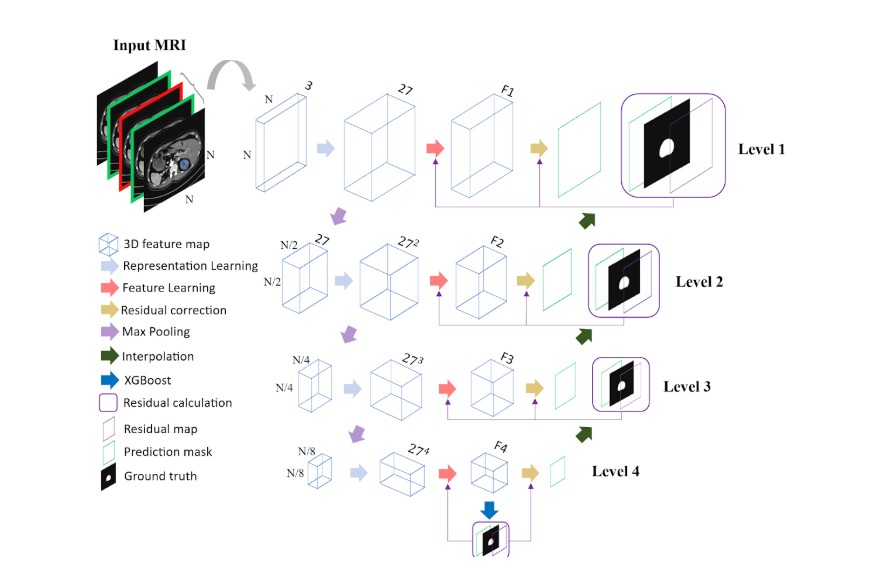

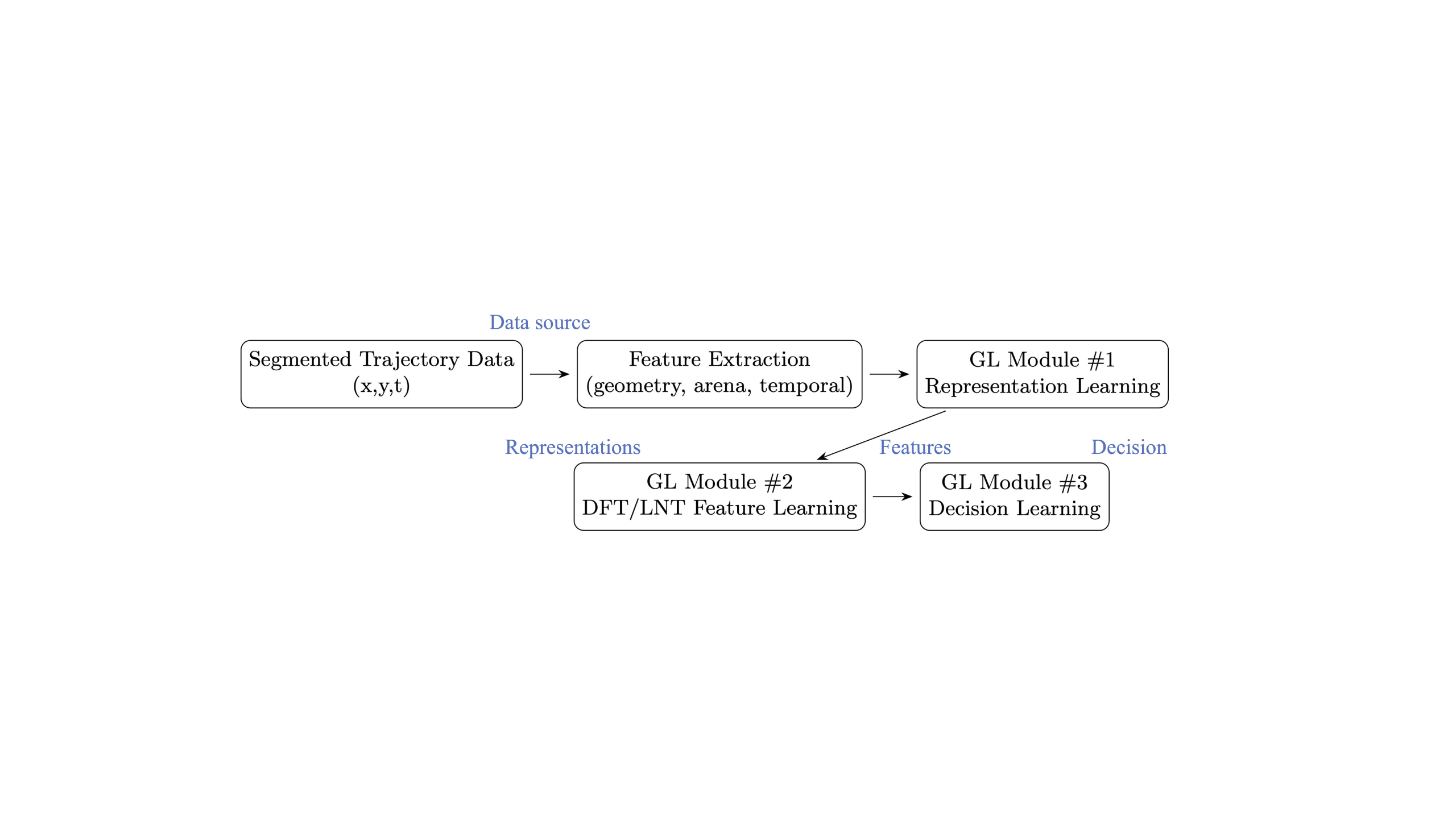

To address these computational and interpretability challenges, we propose Green U-Shaped Learning (GUSL). This method departs from the black-box nature of deep neural networks by establishing a transparent, multi-stage pipeline. The framework utilizes residual correction across multiple resolution levels, mimicking a U-shaped structure where global structural information is captured at lower resolutions, and high-frequency local details are refined at higher resolutions. This progressive approach not only better manages the ill-posed nature of super-resolution through stage-wise regularization but also significantly reduces the parameter count and training footprint compared to conventional deep learning models.

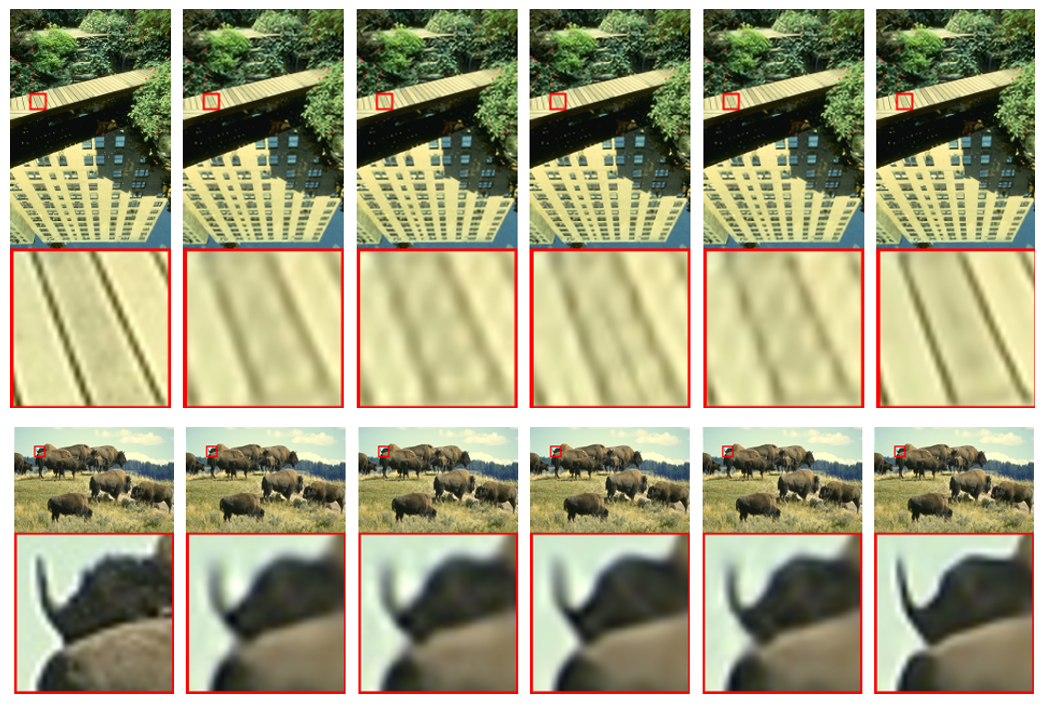

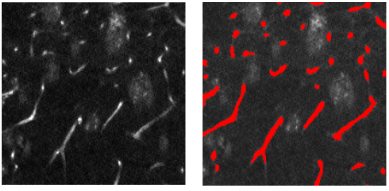

Furthermore, to tackle the inherent spatial heterogeneity of the SISR task, where content varies significantly between smooth, homogeneous backgrounds (“Easy” samples) and complex, high-frequency textures (“Hard” samples), we adopt a divide-and-conquer strategy. By explicitly distinguishing between these two categories, we isolate the reconstruction tasks. This ensures that computational resources and modeling capacity are focused on regions with high residual errors, while simultaneously preventing the over-processing of simple areas.