Image super-resolution (SR) is a classic image reconstruction problem in computer vision (CV), which aims at recovering a high-resolution image from a low-resolution image. As a type of supervised generative problem, image SR attracts wide attention due to its strong connection with other CV topics, such as object recognition, object alignment, texture synthesis and so on. Besides, it has extensive applications in real world, for example, medical diagnosis, remote sensing, biometric information identification, etc.

For the state-of-the-art approaches for SR, typically there are two mainstreams: 1) example-based learning methods, and 2) Deep Learning (CNN-based) methods. Example-based methods either exploit external low-high resolution exemplar pairs [1], or learn internal similarity of the same image with different resolution scales [2]. However, features used in example-based methods are usually traditional gradient-related or just handcraft, which may affect model performance. While CNN-based SR methods (e.g. SRCNN [3]) does not really distinguish between feature extraction and decision making. Lots of basic CNN models/blocks are applied to SR problem, e.g. GAN, residual learning, attention network, and provide superior SR results. Nevertheless, the non-explainable process and exhaustive training cost are serious drawbacks of CNN-based methods.

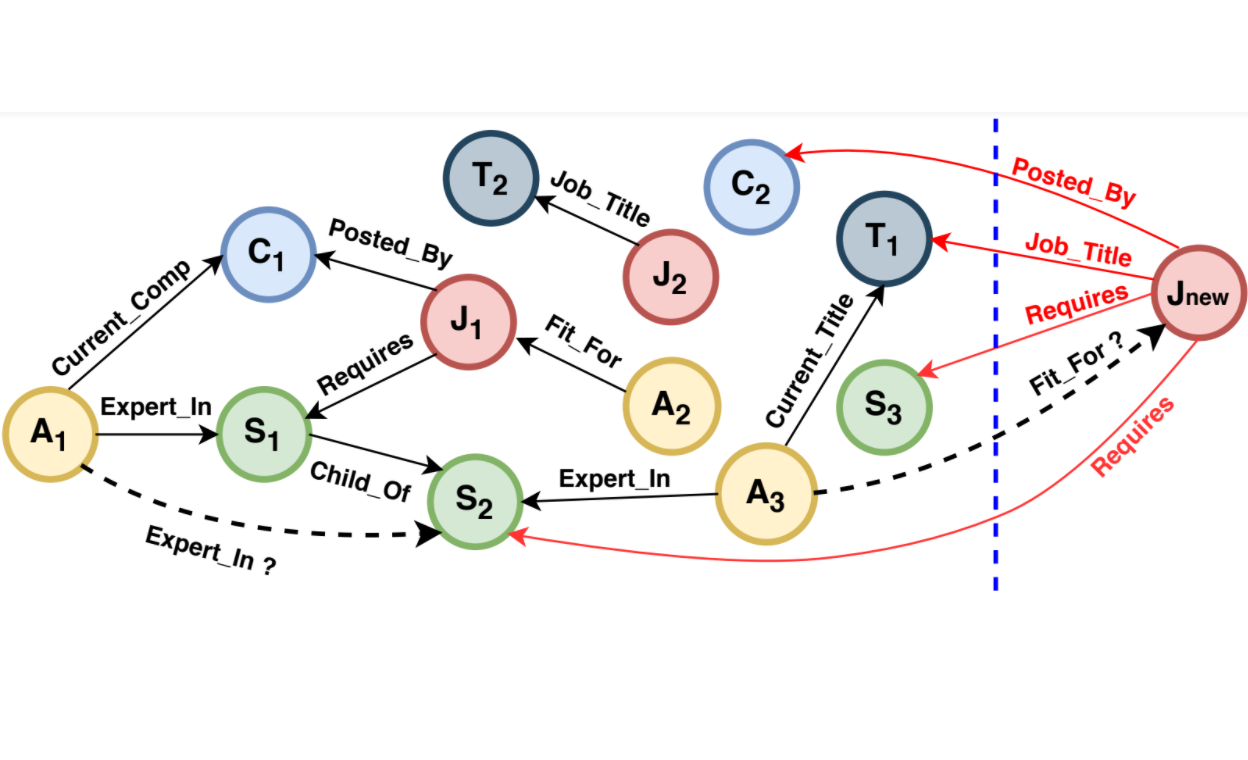

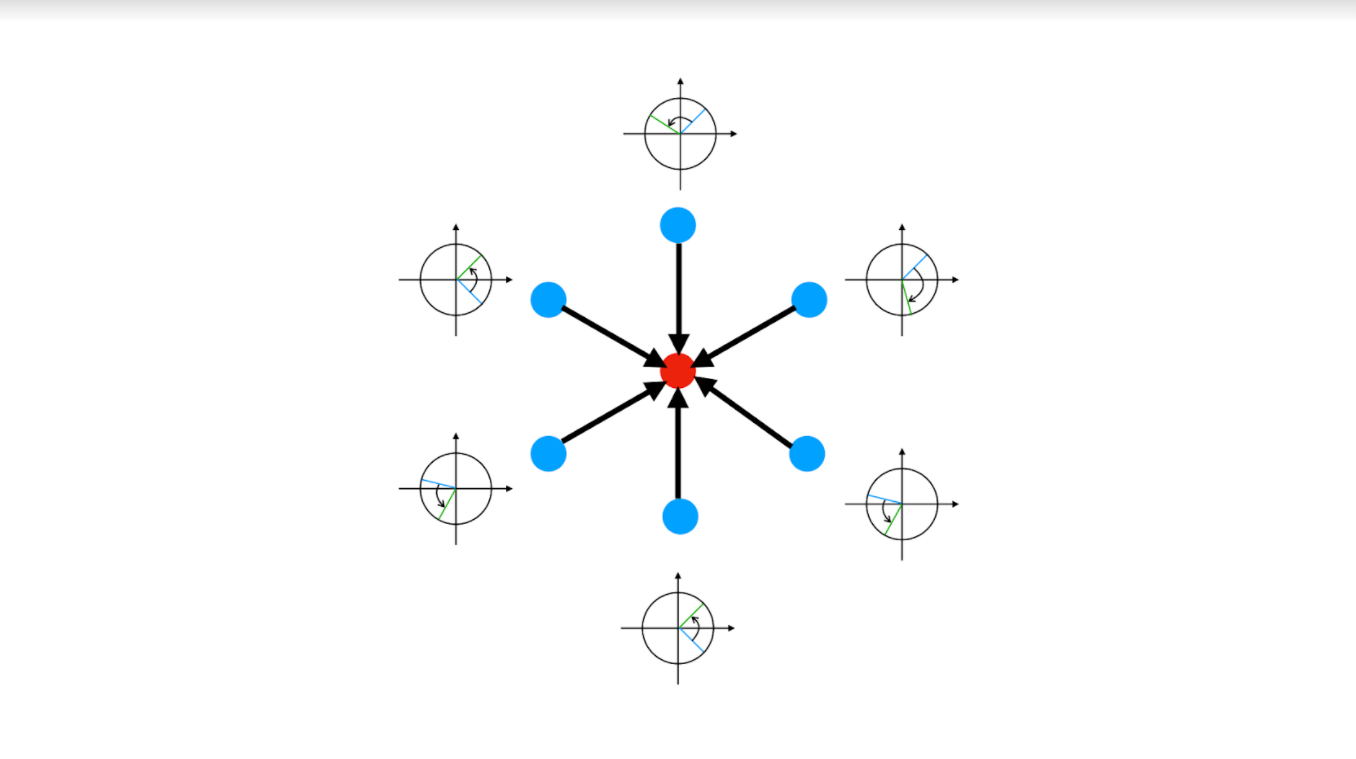

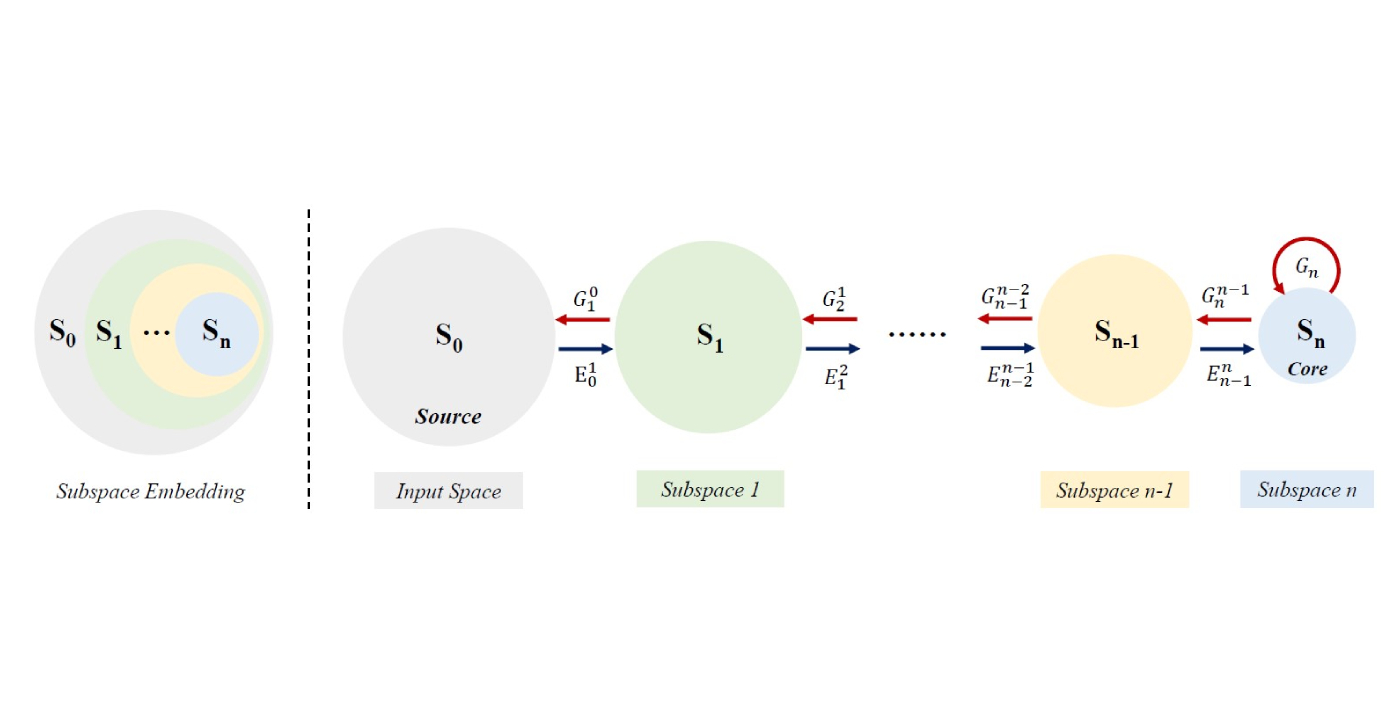

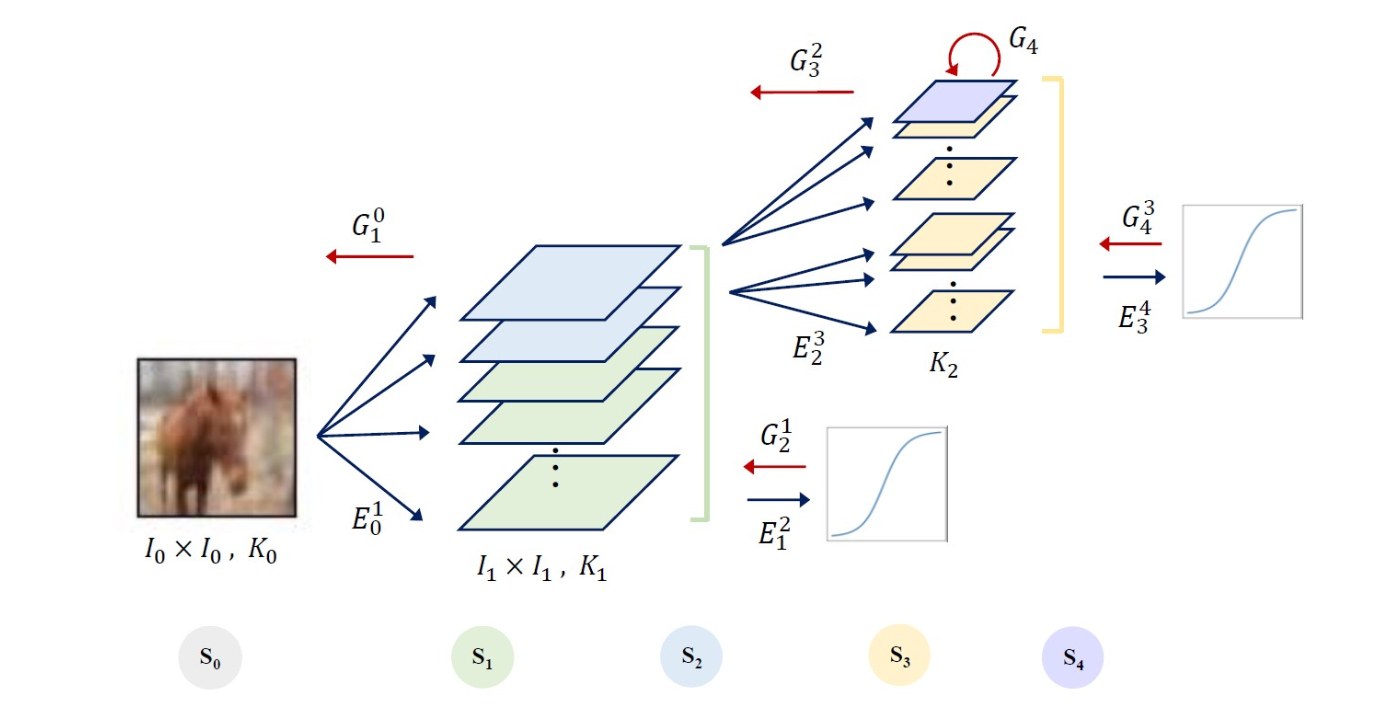

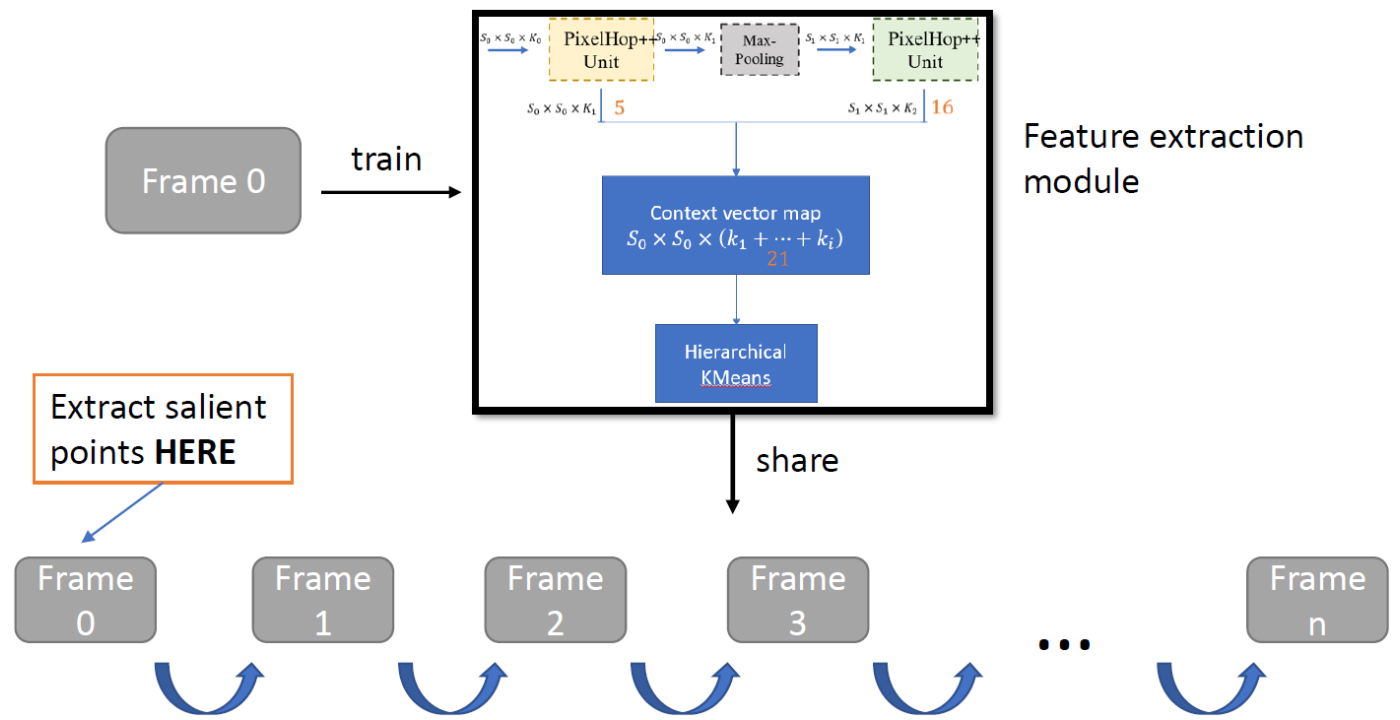

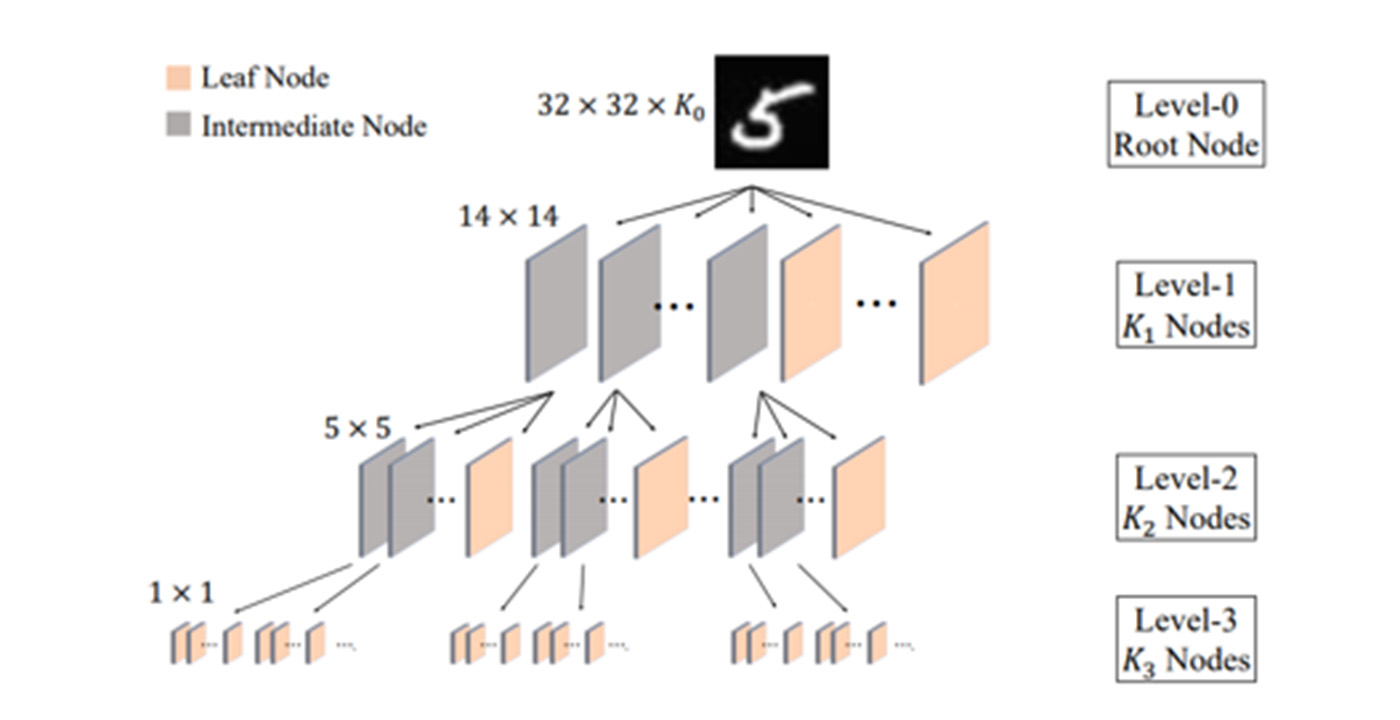

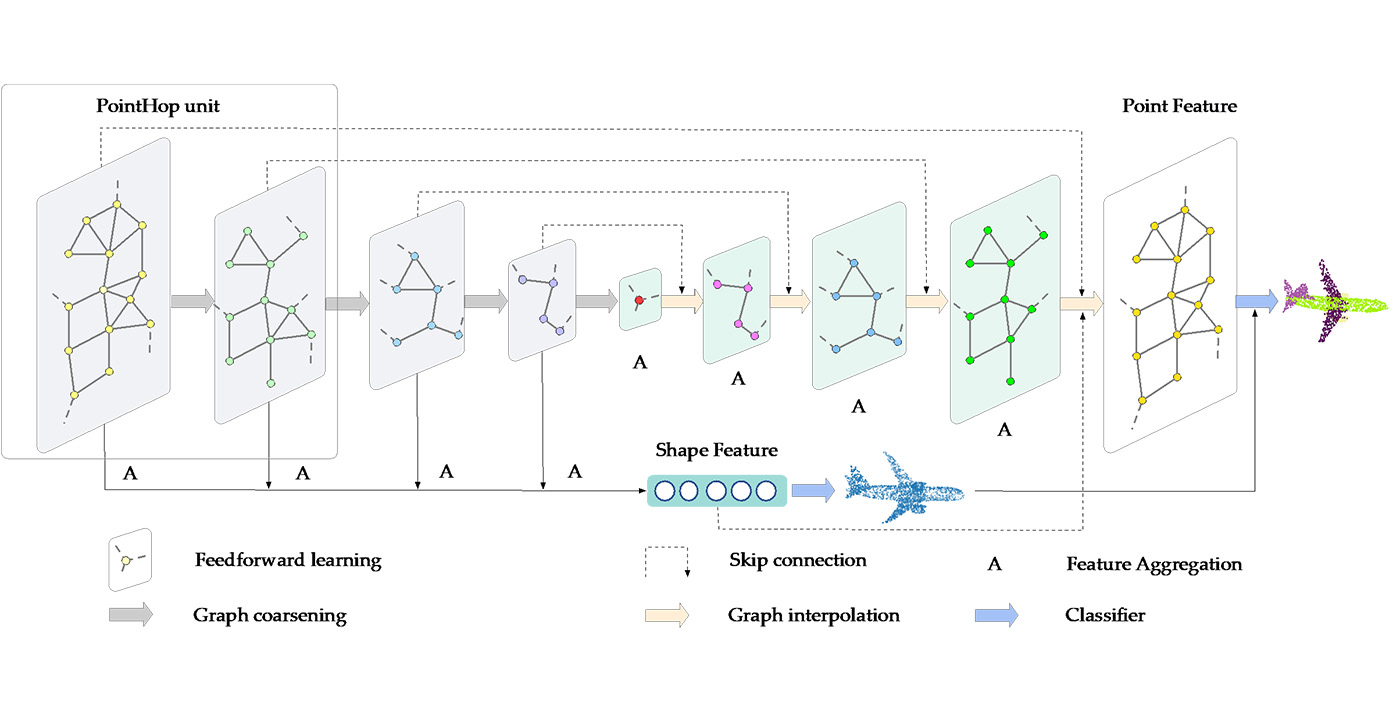

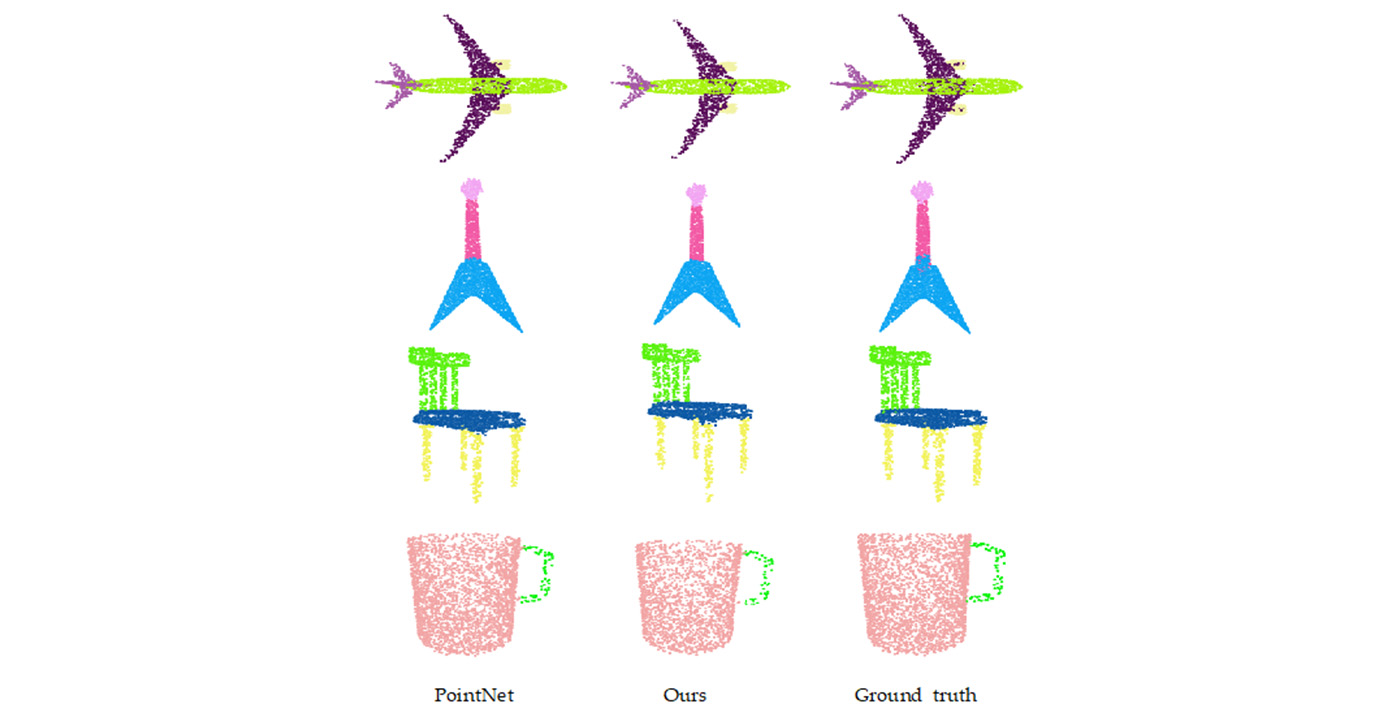

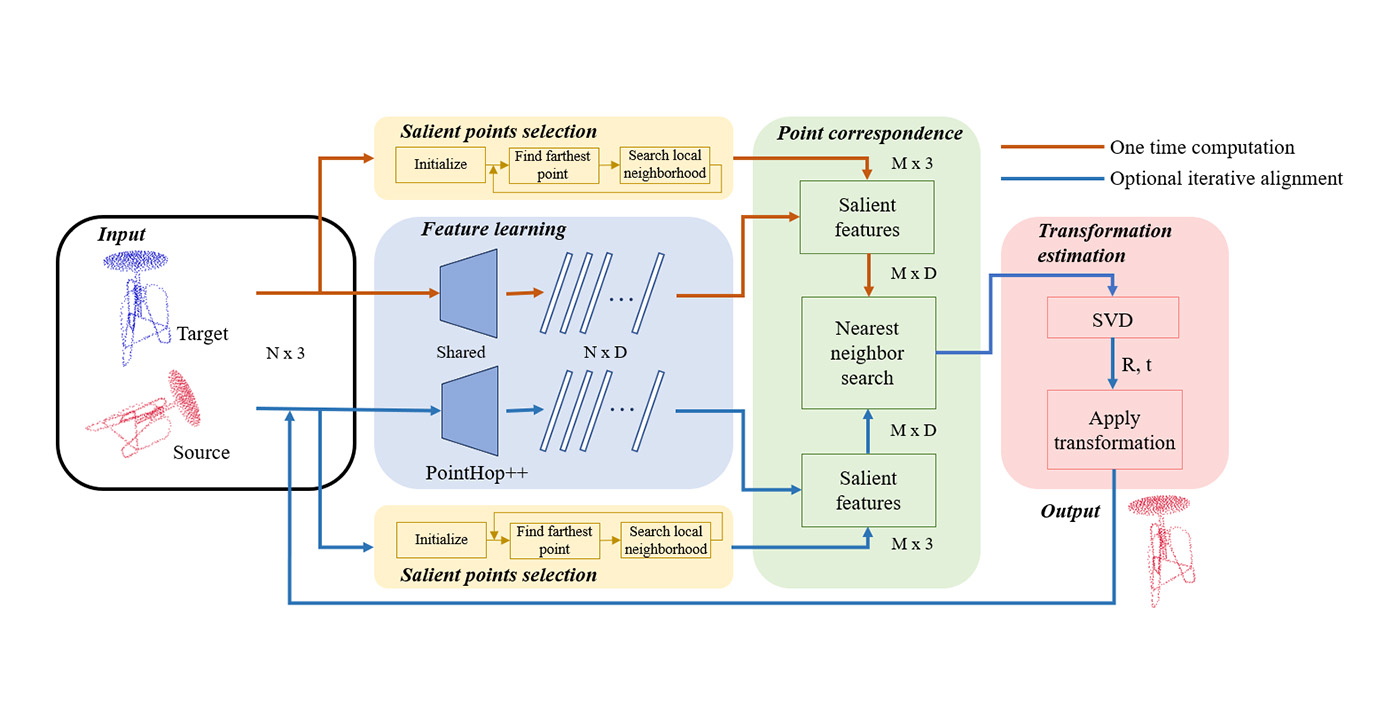

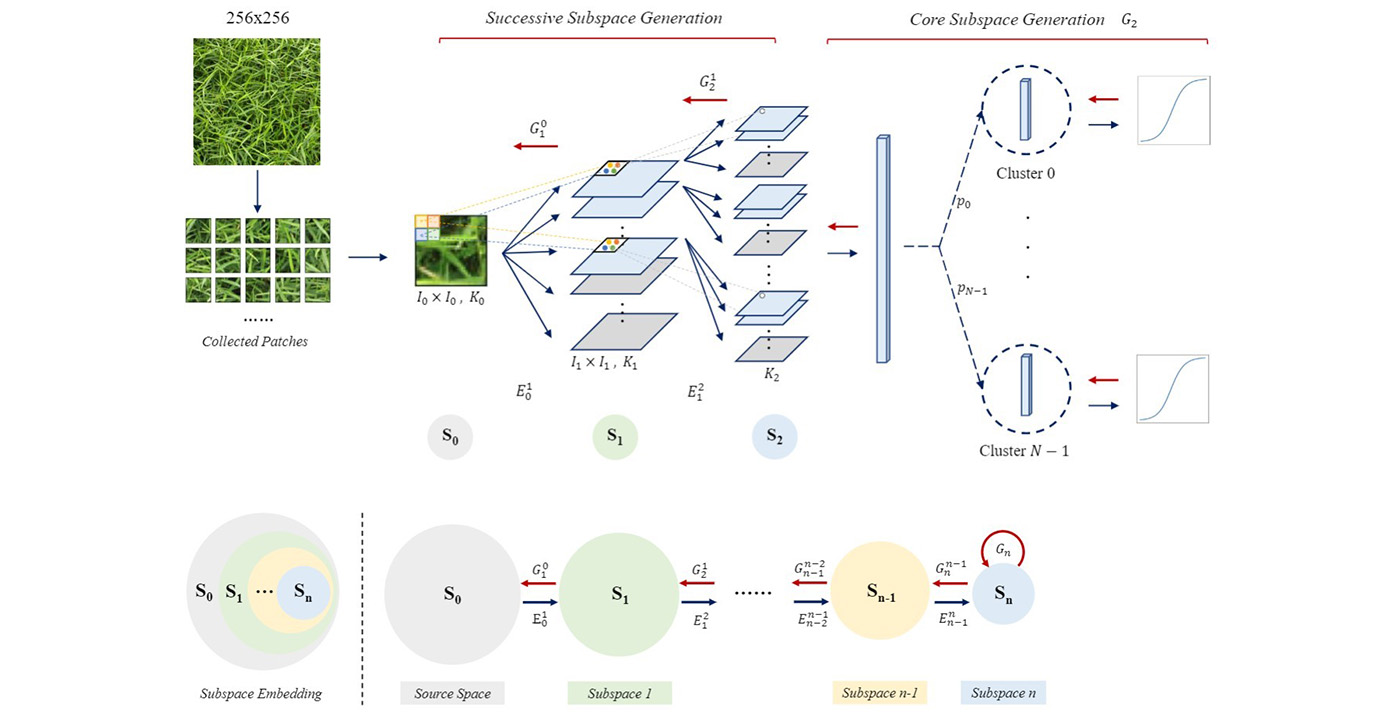

By taking advantage of reasonable feature extraction [4], we utilize spatial-spectral compatible cw-Saab features to express exemplar pairs. In addition, we formulate a Successive-Subspace-Learning-based (SSL-based) method to gradually partition data into subspaces by feature statistics, and apply regression in each subspace for better local approximation. By visualization the samples in representative subspaces, we find obvious sample similarity in pixel domain. This demonstrates the efficiency of our method in splitting samples into subspaces with semantic meaning. In the future, we aim at providing such a SSL-based explainable method with high efficiency for SR problem.

— By Wei Wang

Reference:

[1] Timofte, Radu, [...]