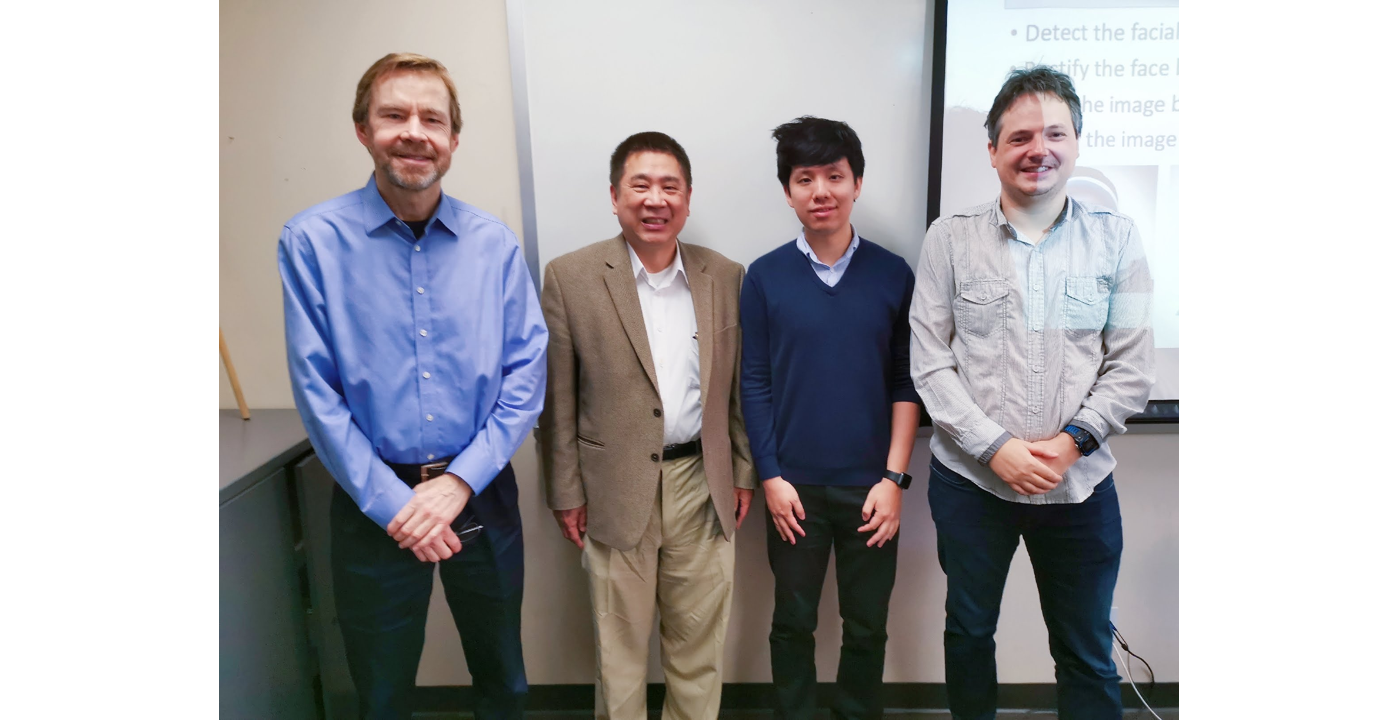

Congratulations to Harry Yang for Passing His Defense!

Congratulations to Harry Yang for passing his defense on May 7, 2019! Let us hear what he would like to say about his defense and an abstract of his thesis.

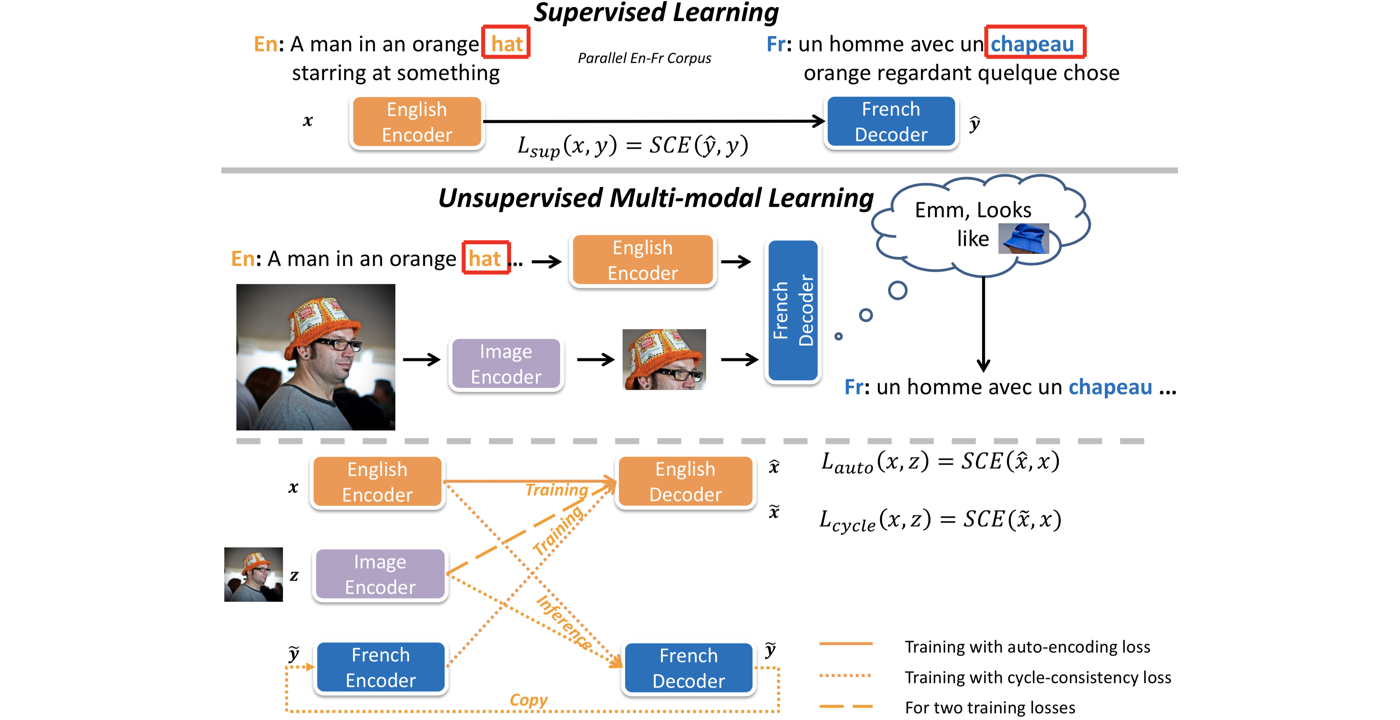

“In the thesis, we tackle the problem of translating faces and bodies between different identities without paired training data: we cannot directly train a translation module using supervised signals in this case. Instead, we propose to train a conditional variational auto-encoder (CVAE) to disentangle different latent factors such as identity and expressions. In order to achieve effective disentanglement, we further use multi-view information such as keypoints and facial landmarks to train multiple CVAEs. By relying on these simplified representations of the data we are using a more easily disentangled representation to guide the disentanglement of image itself. Experiments demonstrate the effectiveness of our method in multiple face and body datasets. We also show that our model is a more robust image classifier and adversarial example detector comparing with traditional multi-class neural networks.

“To address the issue of scaling to new identities and also generate better-quality results, we further propose an alternative approach that uses self-supervised learning based on StyleGAN to factorize out different attributes of face images, such as hair color, facial expressions, skin color, and others. Using pre-trained StyleGAN combined with iterative style inference we can easily manipulate the facial expressions or combine the facial expressions of any two people, without the need of training a specific new model for each of the identity involved. This is one of the first scalable and high-quality approach for generating DeepFake data, which serves as a critical first step to learn a more robust and general classifier against adversarial examples.”

Harry also shared about his Ph.D. experience:

“Firstly, I would [...]