MCL Research on Green Blind Image Quality Assessment

Image quality assessment (IQA) aims to evaluate image quality at various stages of image processing such as image acquisition, transmission, and compression. Based on the availability of undistorted reference images, objective IQA can be classified into three categories [1]: full-reference (FR), reduced-referenced (RR) and no-reference (NR). The last one is also known as blind IQA (BIQA). FR-IQA metrics have achieved high consistency with human subjective evaluation. Many FR-IQA methods have been well developed in the last two decades such as SSIM [2] and FSIM [3]. RR-IQA metrics only utilize features of reference images for quality evaluation. In some application scenarios (e.g., image receivers), users cannot access reference images so that NR-IQA is the only choice. BIQA methods attract growing attention in recent years.

Generally speaking, conventional BIQA methods consist of two stages: 1) extraction of quality-aware features and 2) adoption of a regression model for quality score prediction. As the amount of user generated images grows rapidly, a handcrafted feature extraction method is limited in its power of modeling a wide range of image content and distortion characteristics. Deep neural networks (DNNs) achieve great success in blind image quality assessment (BIQA) with large pre-trained models in recent years. However, their solutions cannot be easily deployed at mobile or edge devices, and a lightweight solution is desired.

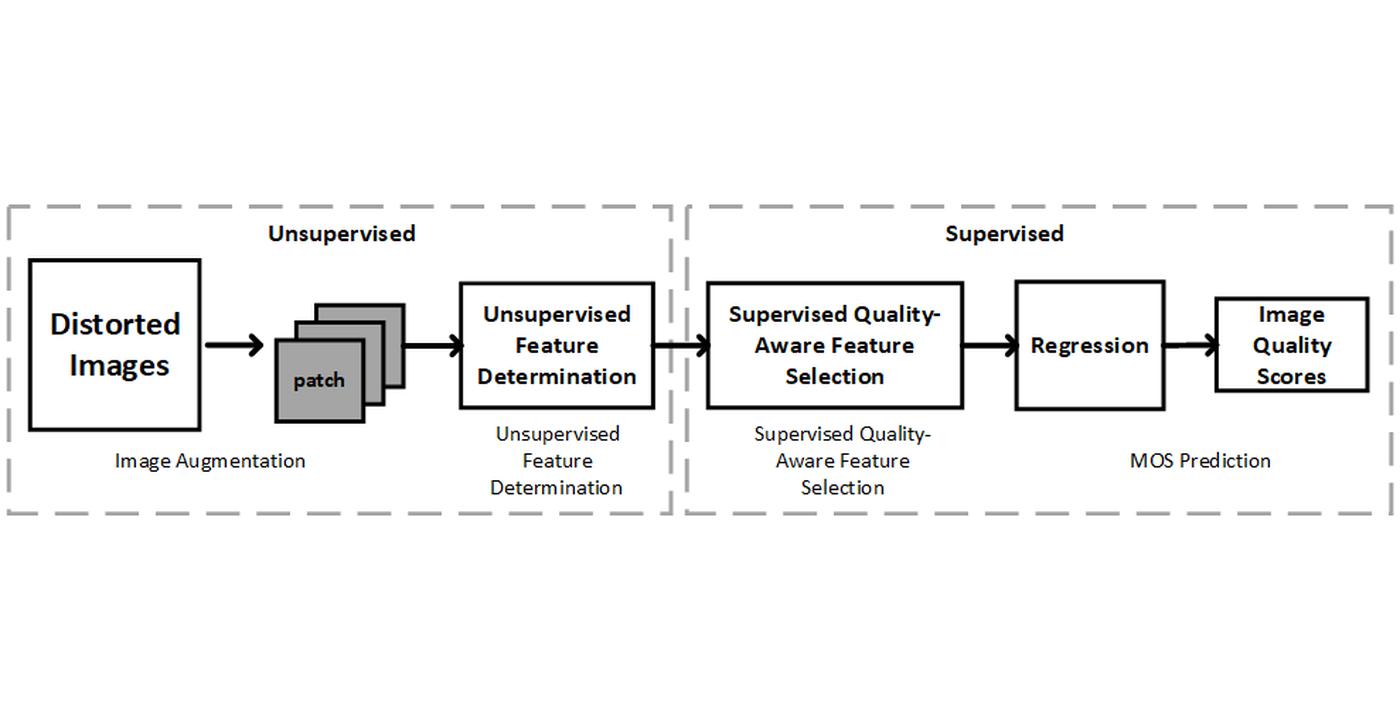

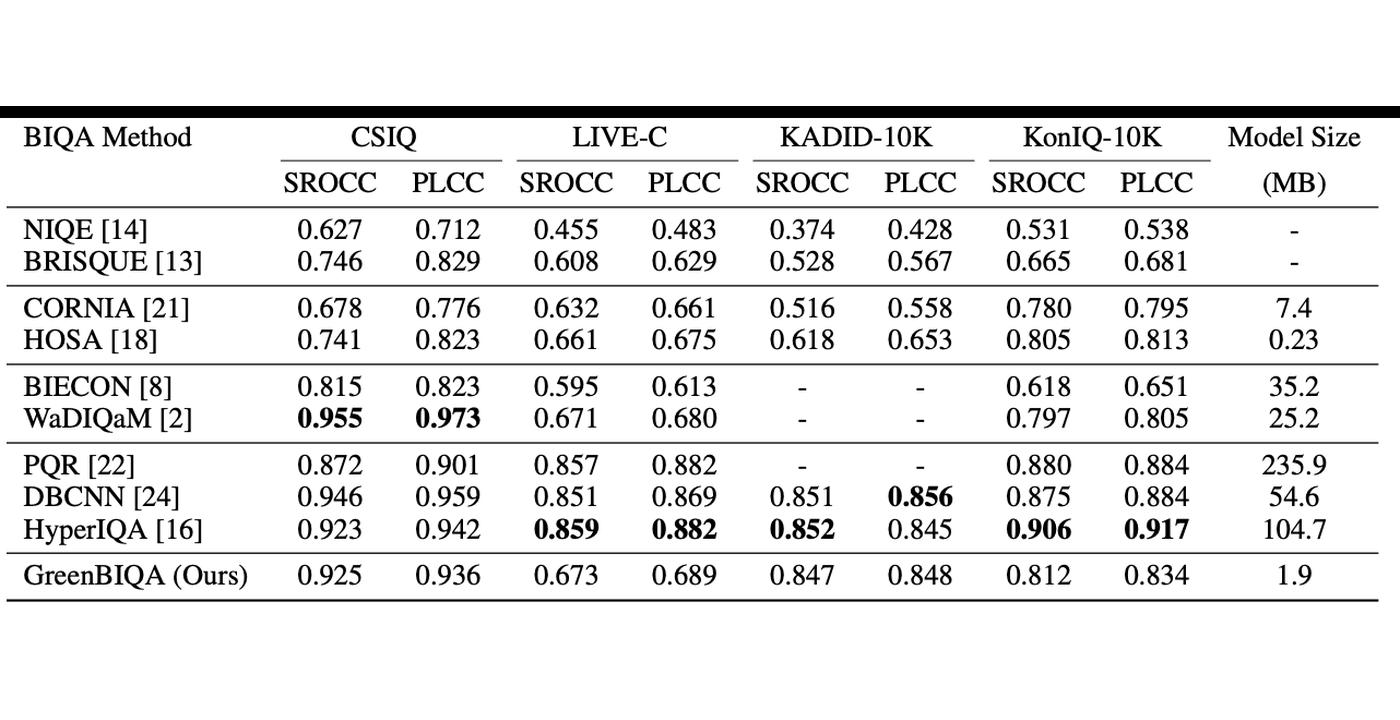

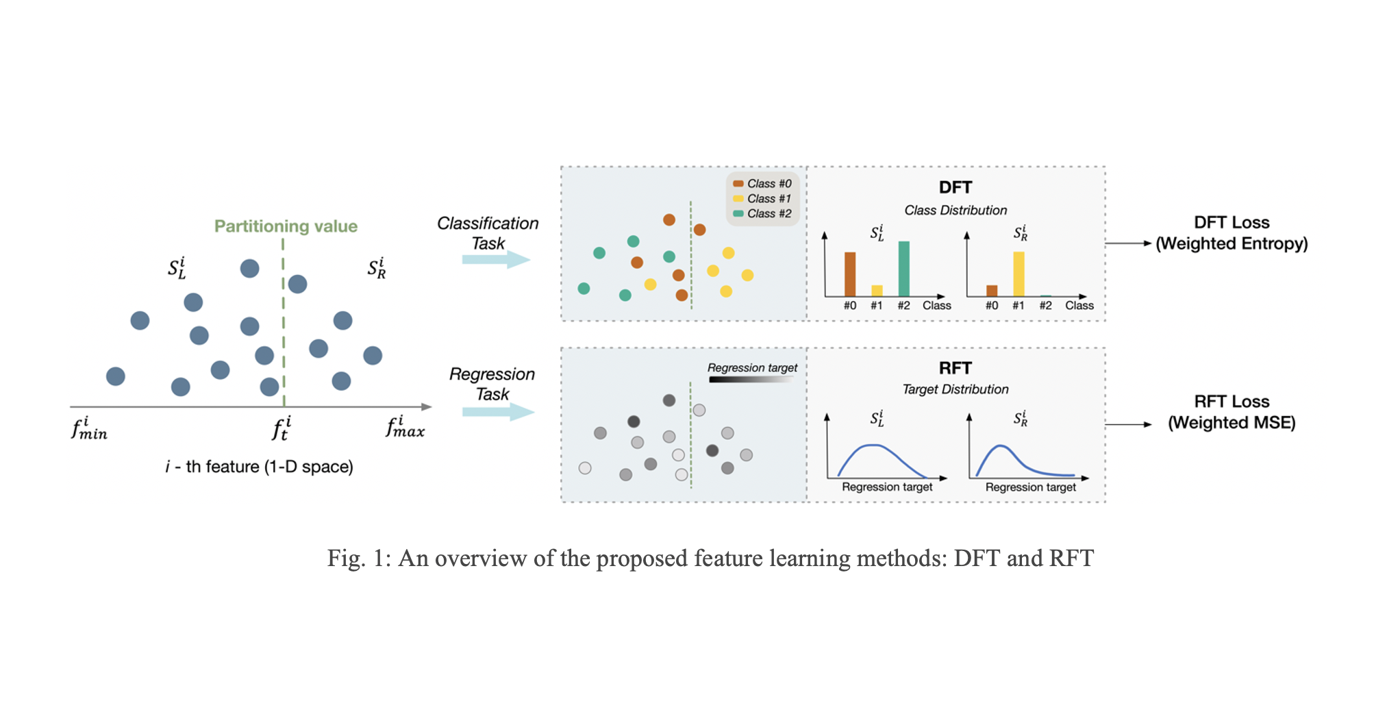

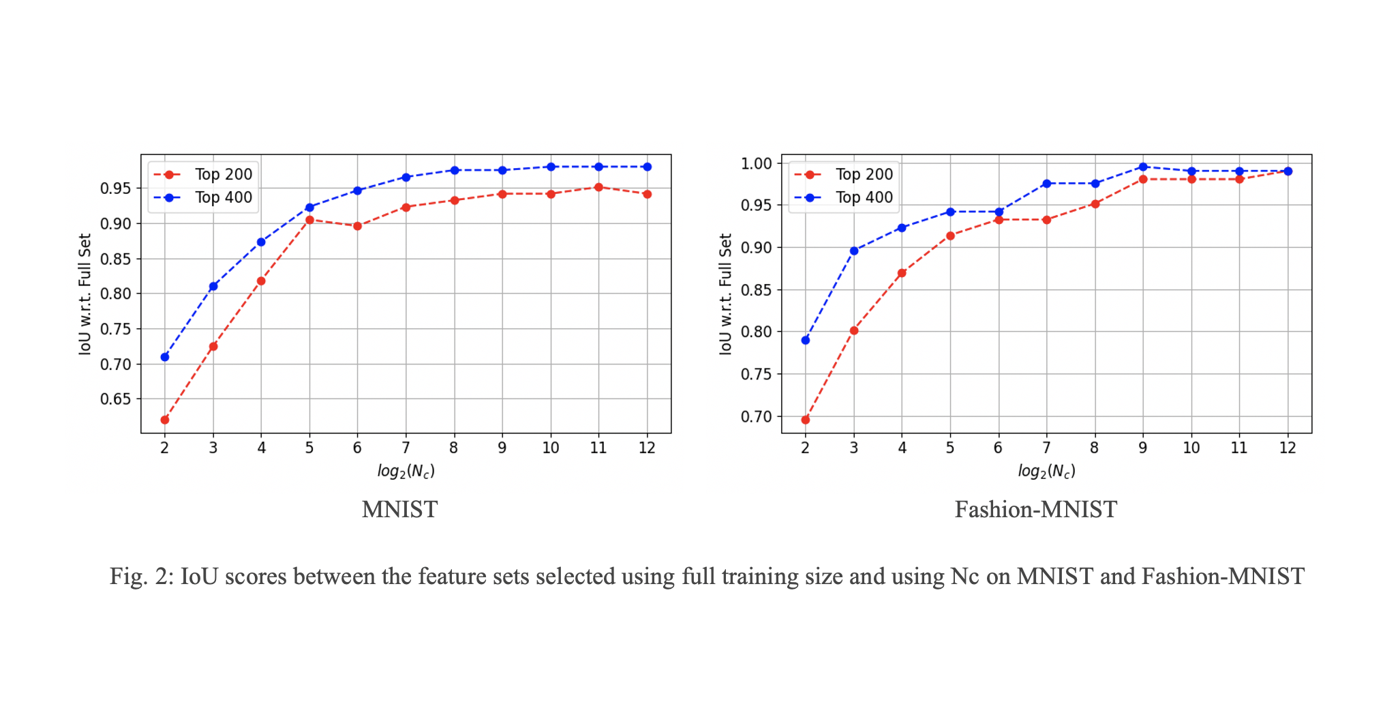

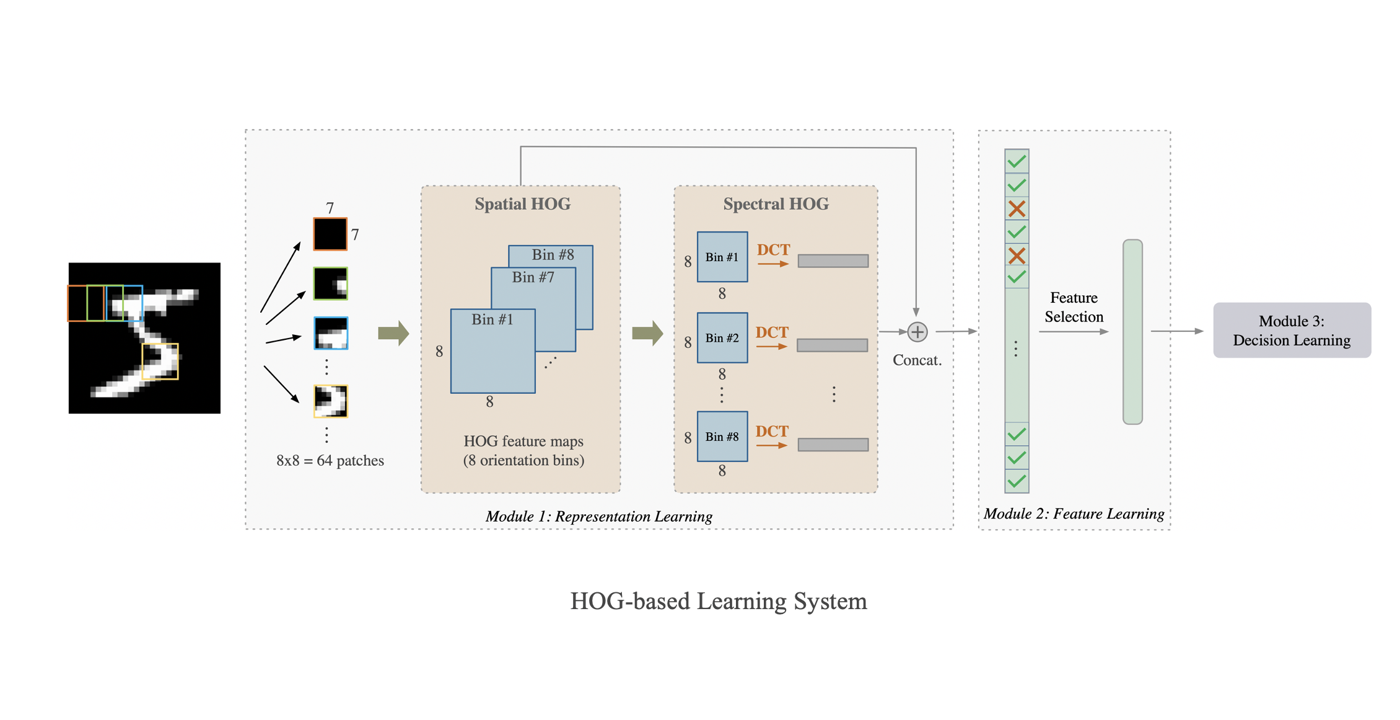

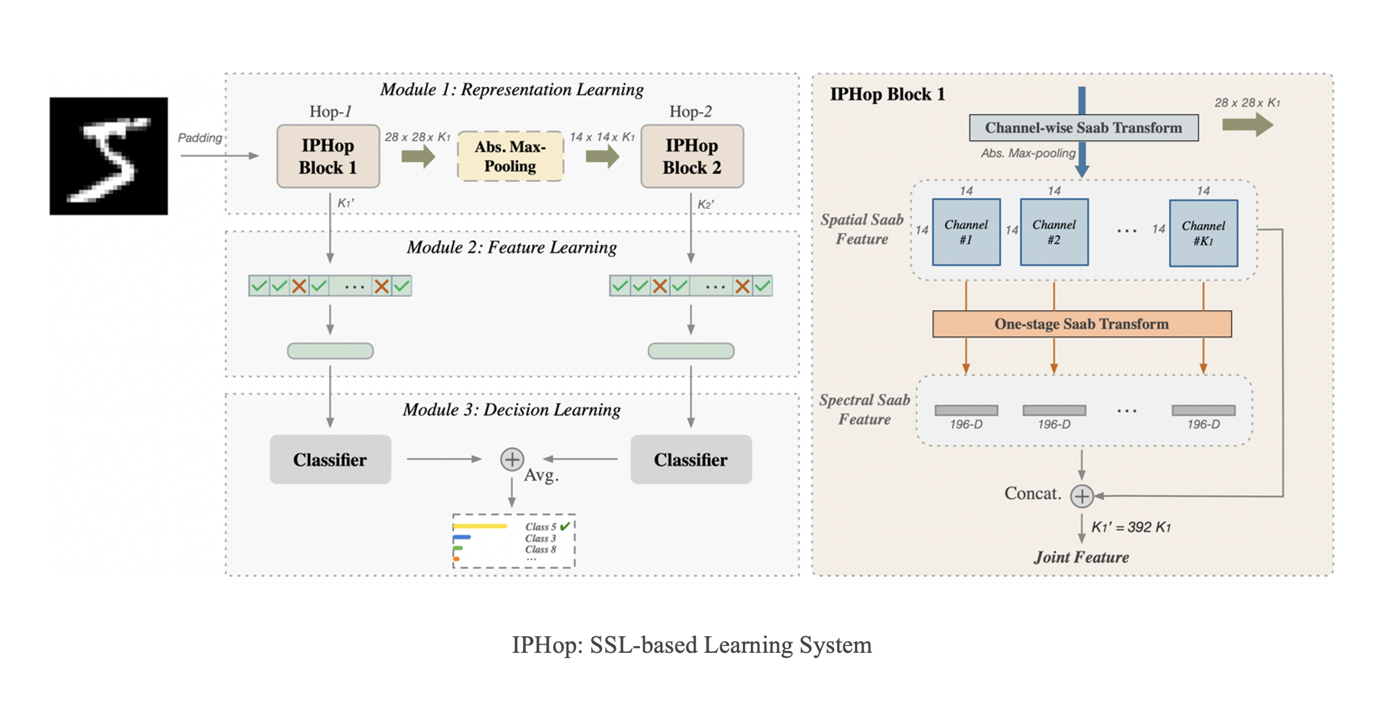

In this work, we propose a novel BIQA model, called GreenBIQA, that aims at high performance, low computational complexity and a small model size. GreenBIQA adopts an unsupervised feature generation method and a supervised feature selection method to extract quality-aware features. Then, it trains an XGBoost regressor to predict quality scores of test images. We conduct experiments on four popular IQA datasets, which include two synthetic-distortion and two authentic-distortion [...]