MCL Research on MRI Imaging of Lung Ventilation

Chronic diseases like chronic obstructive pulmonary disease (COPD) and asthma have high prevalence and reduce the compliance of the lung, thereby impeding normal ventilation. Functional lung imaging is of vital importance for the diagnosis and evaluation of these lung deseases. In recent years, high performance low field systems have shown great advantages for lung MRI imaging due to reduced susceptibility effects and improved vessel conspicuity. These MRI configurations provide improved field homogeneity compared with conventional field strengths (1.5T, 3.0T). More possibilities are brought to the researchers to detect regional volume changes throughout the respiratory cycle at lower field strengths, such as 0.55T.

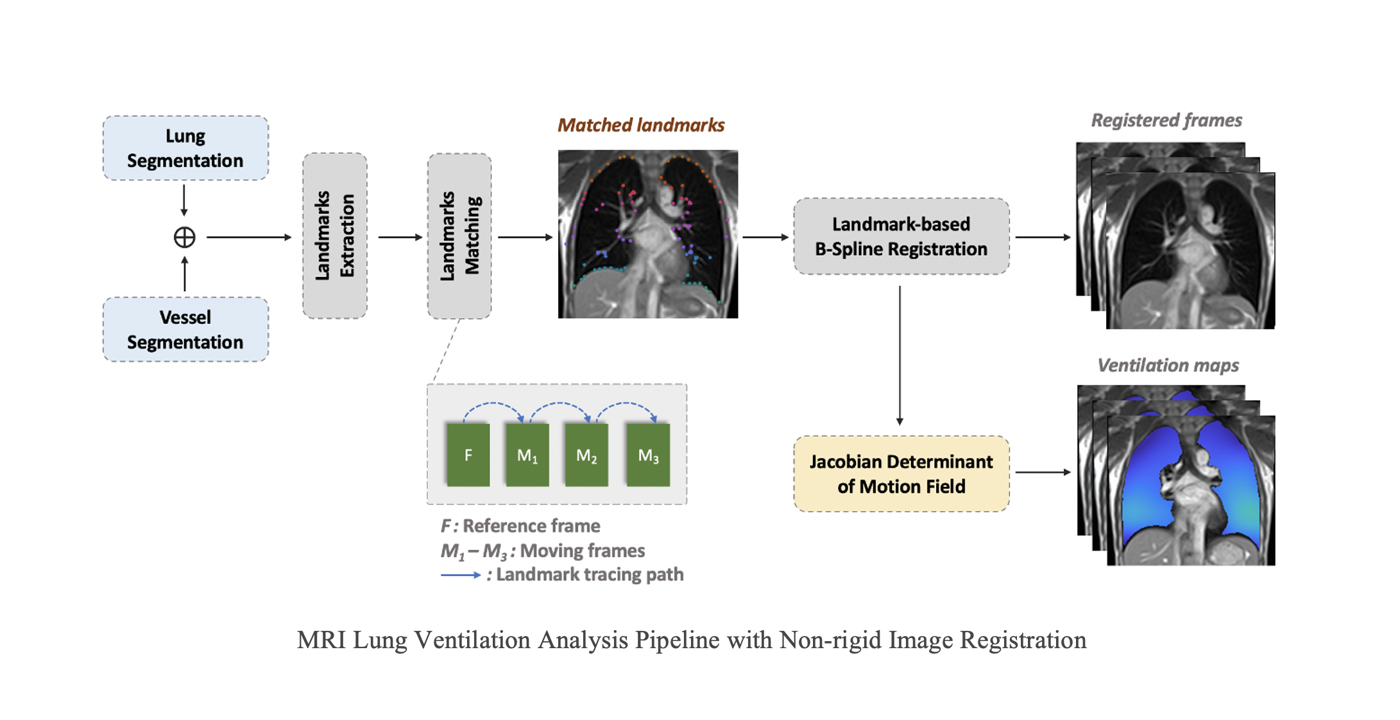

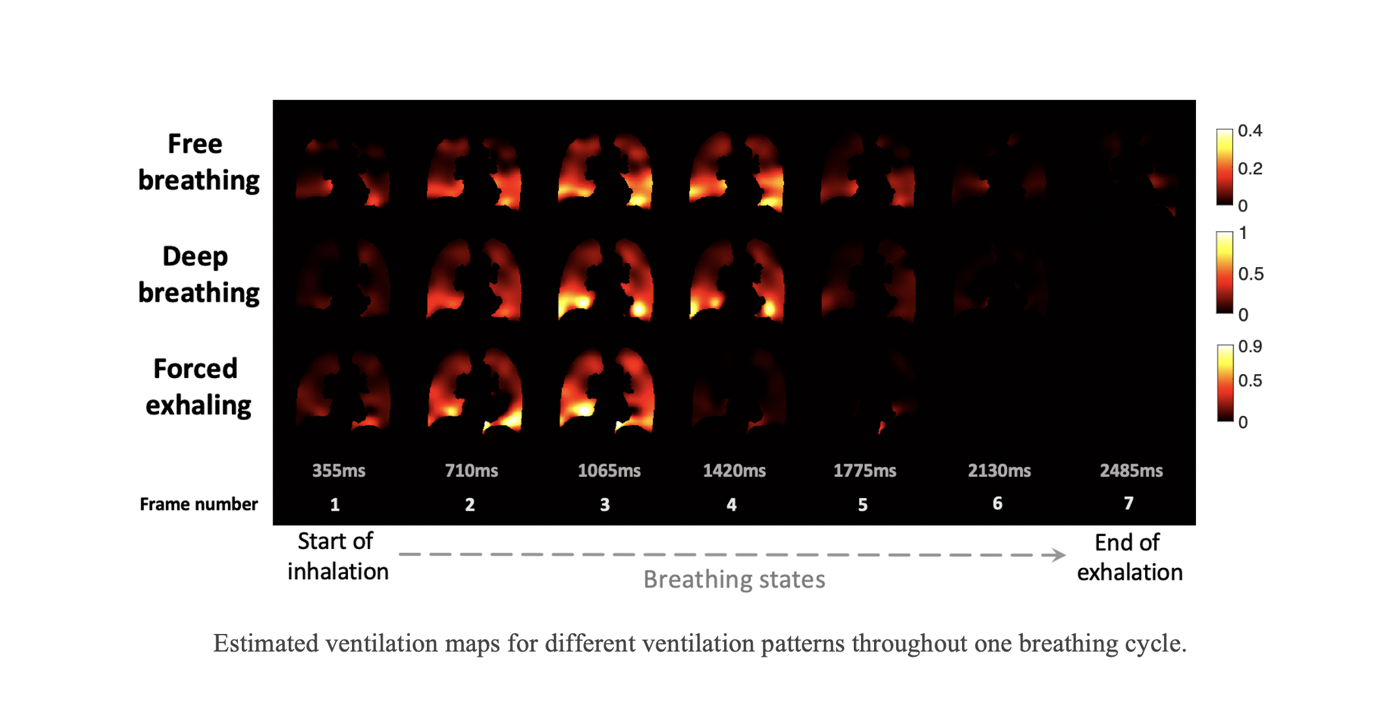

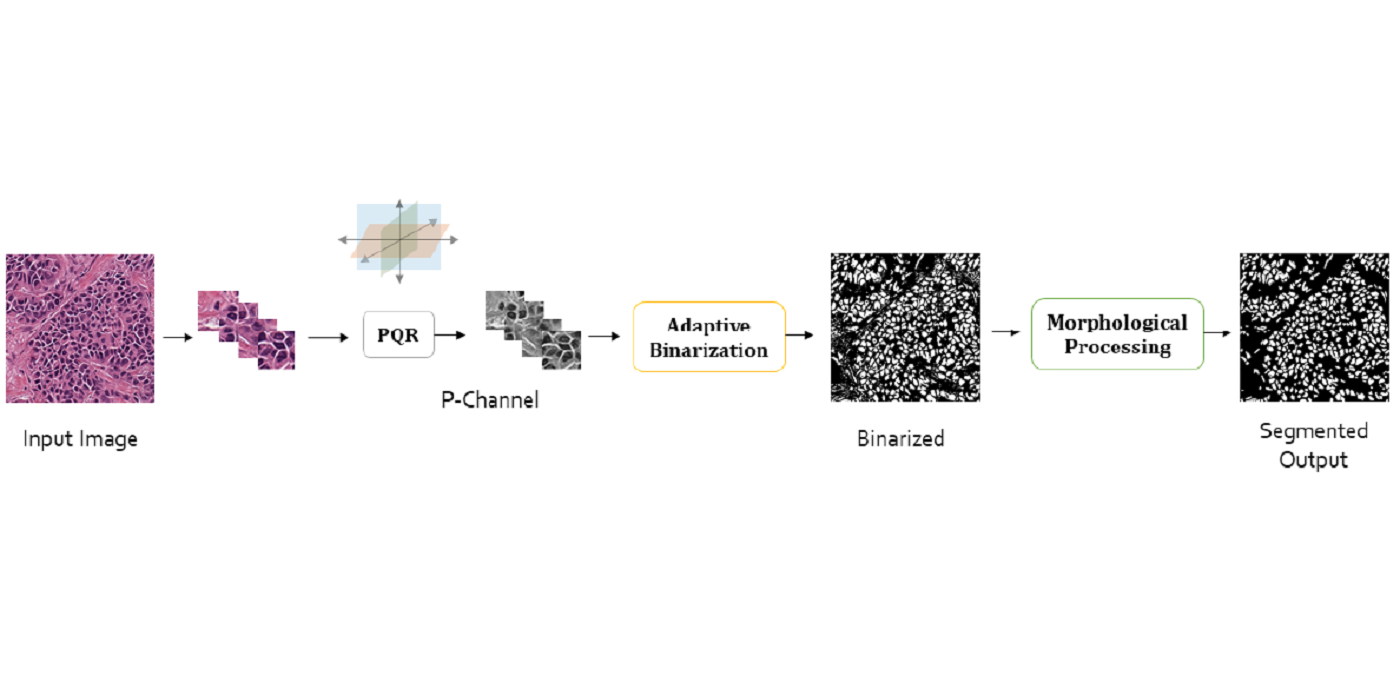

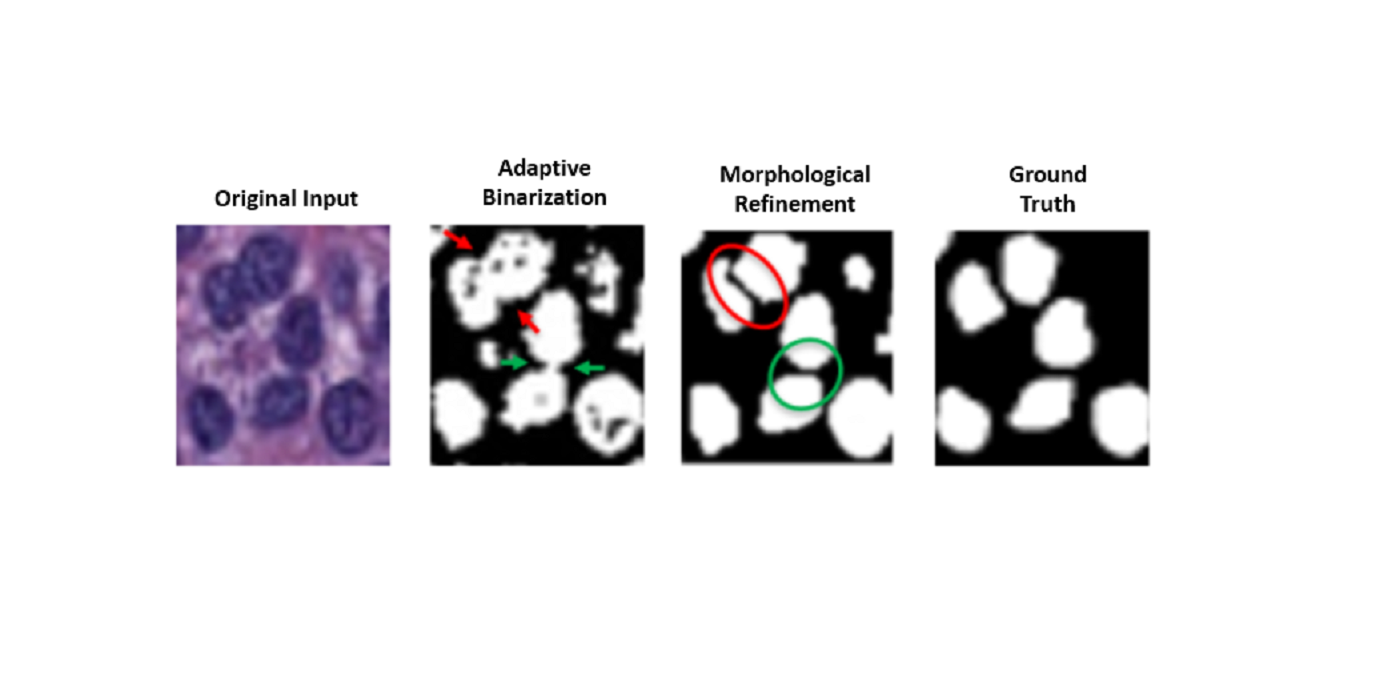

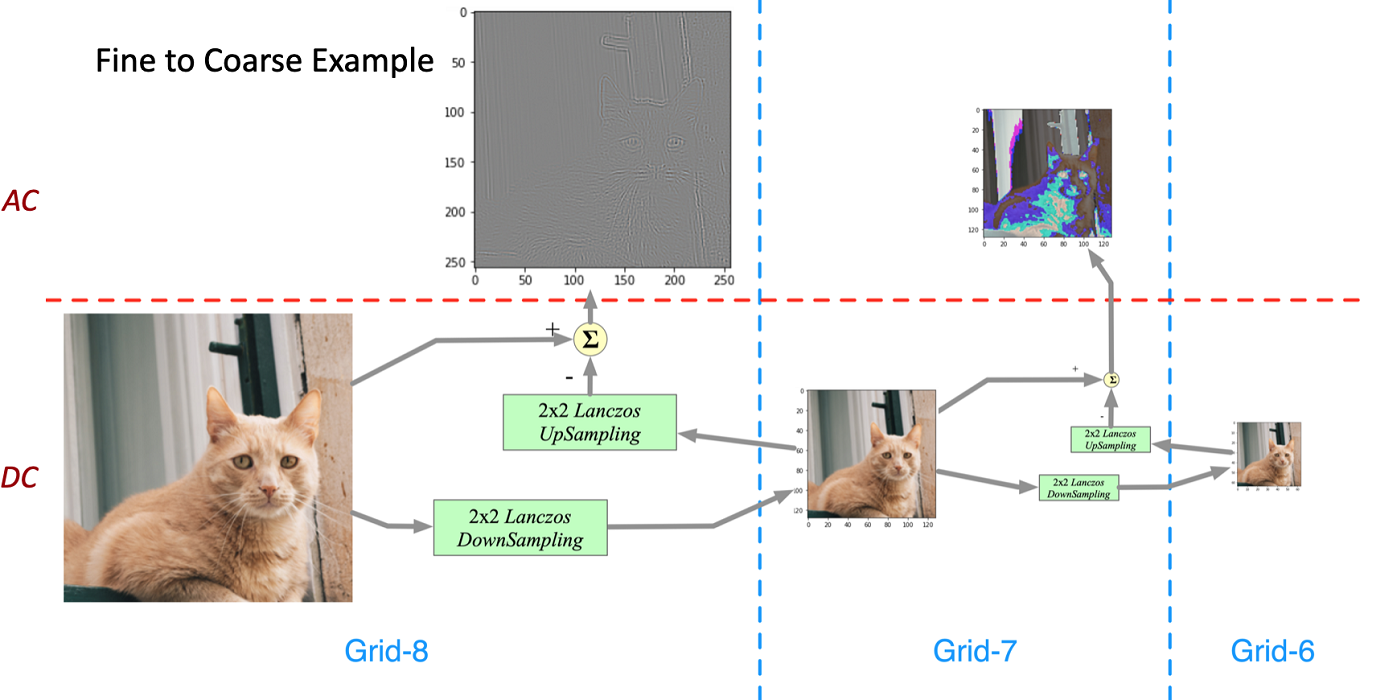

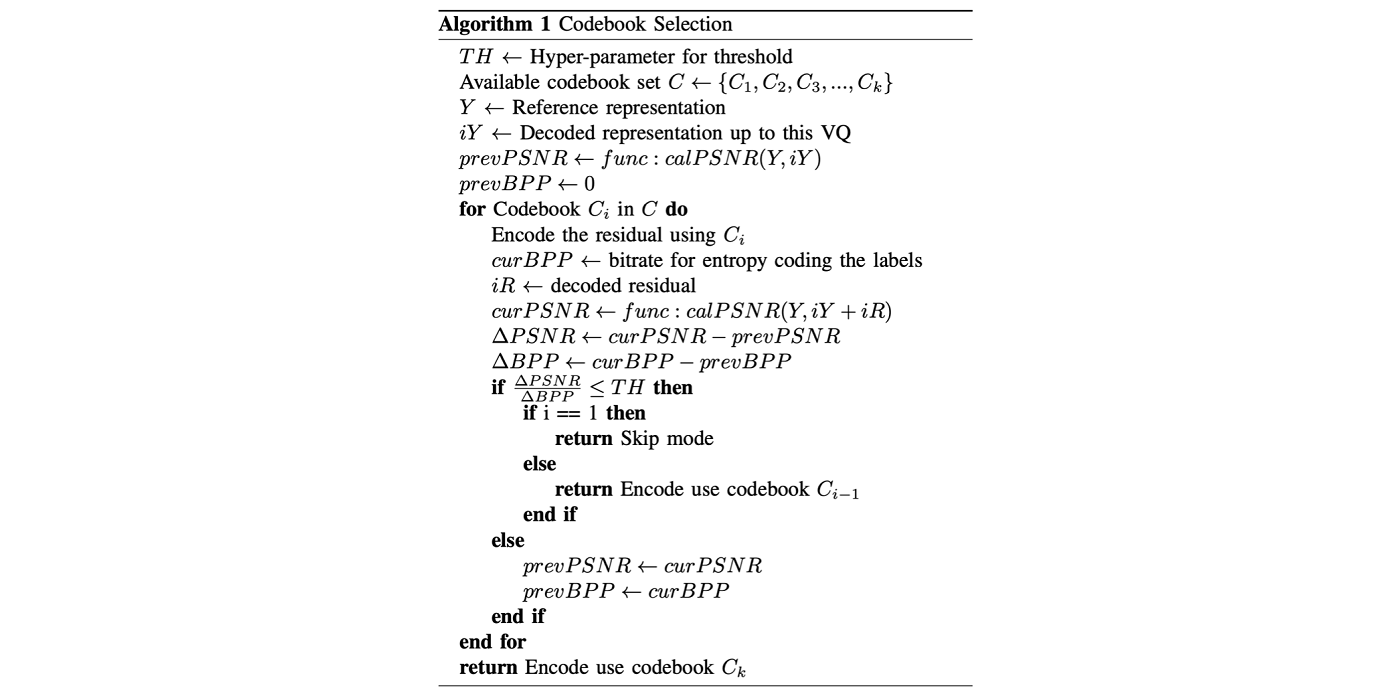

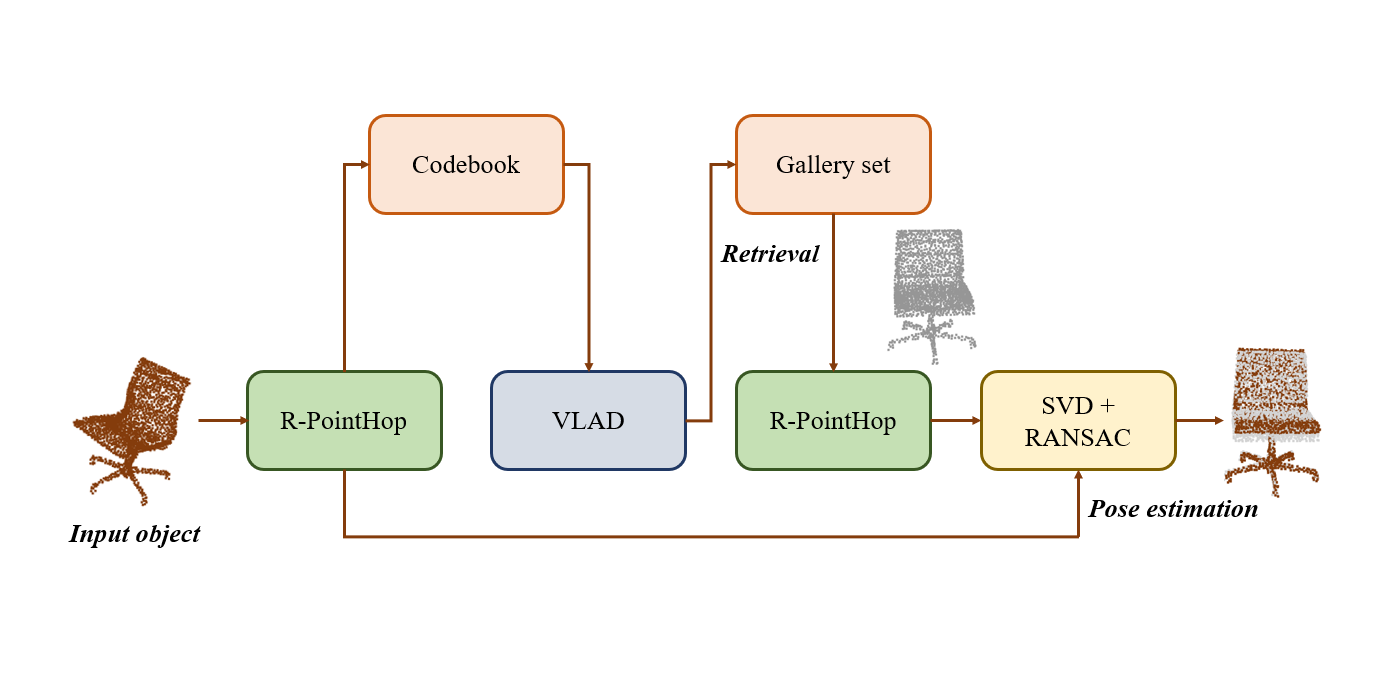

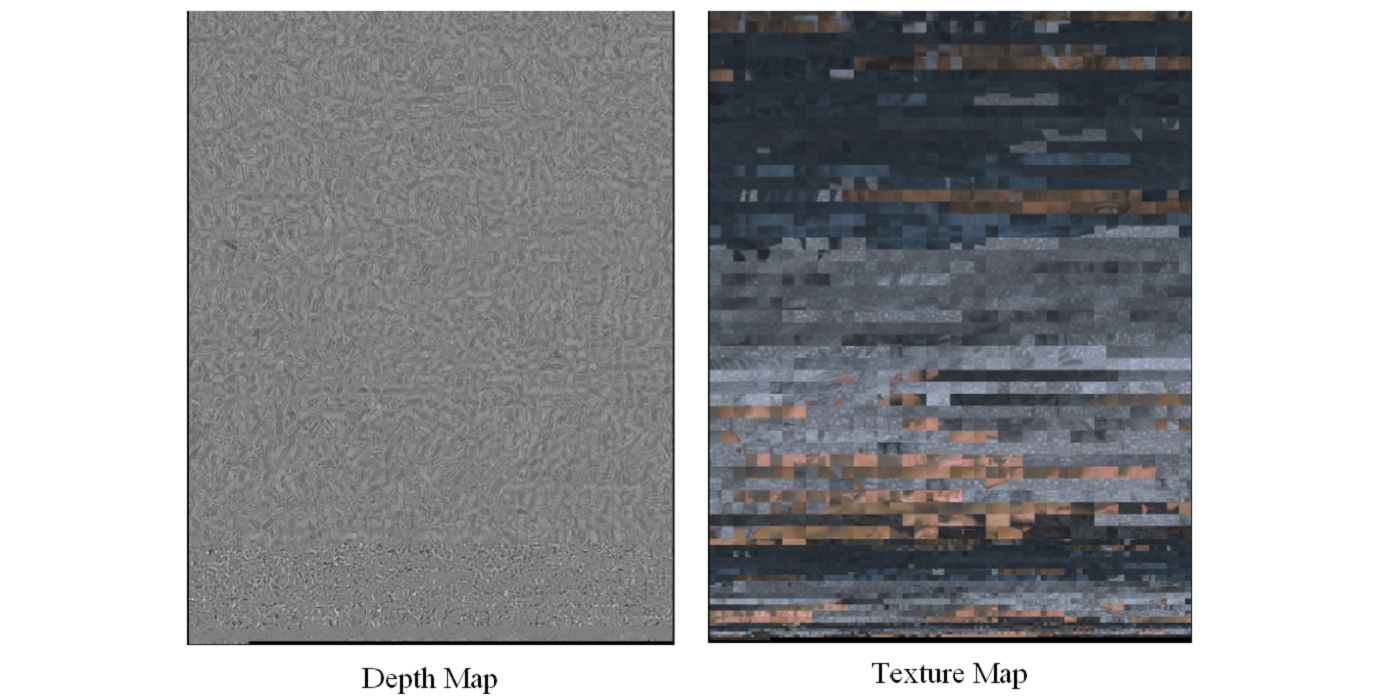

Recently, under the collabration between Dynamic Imaging Science Center (DISC) and MCL, an approach for regional functional lung ventilation mapping using real-time MRI has been developed. It leverages the improved lung imaging and improved real-time imaging capability at 0.55T, without requiring contrast agents, repetition, or breath holds. In the image acquisition, a sequence of MRI in the time series representing several consecutive respiratory cycles is captured. To resolve the regional lung ventilation, an unsupervised non-rigid image registration is applied to register the lungs from different respiratory states to the end-of-exhalation. Deformation field is extracted to study the regional ventilation. Specifically, a data-driven binarization algorithm for segmentation is firstly applied to the lung parenchyma area and vessels, separately. A frame-by-frame salient point extraction and matching are performed between the two adjacent frames to form pairs of landmarks. Finally, Jacobian determinant (JD) maps are generated using the calculated deformation fields after a landmark-based B-spline registration.

In the study, the regional lung ventilation is analyzed on three breathing patterns. Besides, posture-related ventilation differences are also demonstrated in the study. It reveals that real-time image acquisition [...]