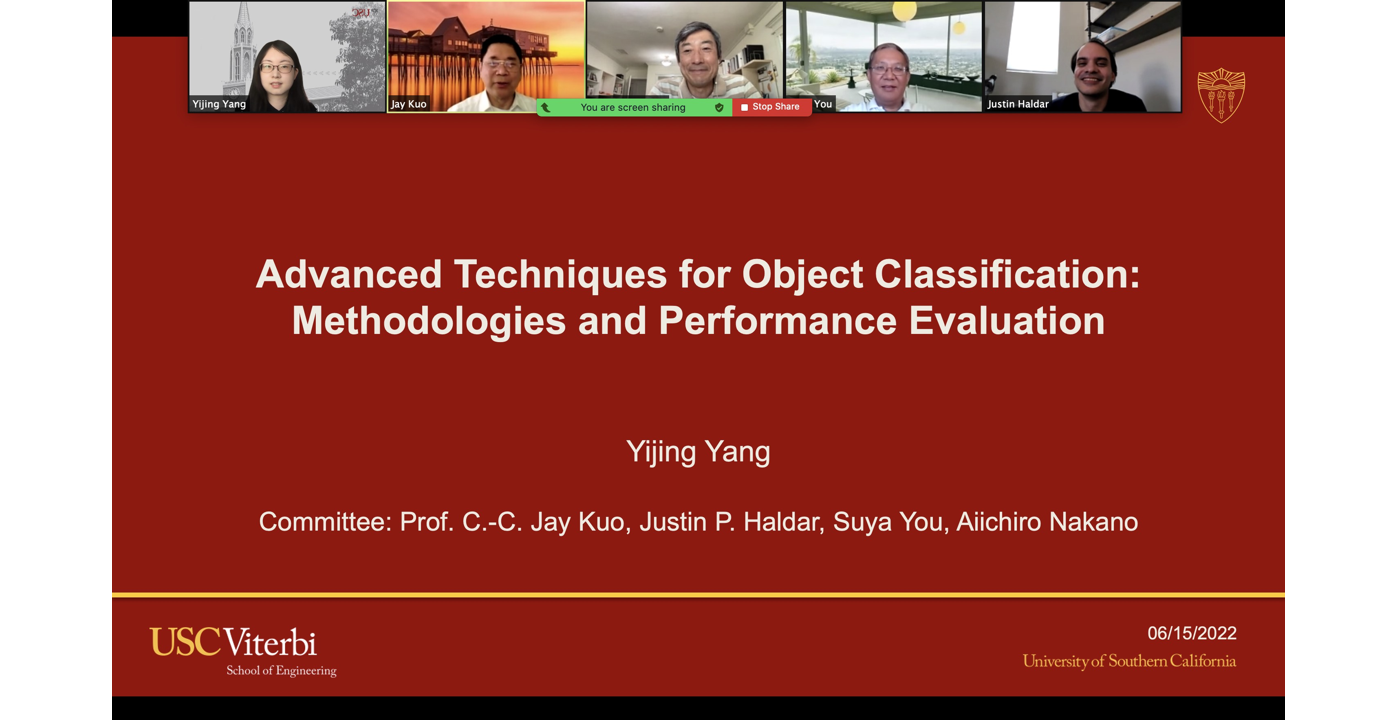

Congratulations to Yijing Yang for Passing Her Defense

Congratulations to Yijing Yang for passing her defense on June 15, 2022. Her PhD dissertation is titled with “Advanced Techniques for Object Classification: Methodologies and Performance Evaluation”. Her Dissertation Committee members include Jay Kuo (Chair), Justin Haldar, Suya You, and Aiichiro Nakano (Outside Member). All committee members were very pleased with the depth and fundamental nature of Yijing’s research. We are glad to invite Yijing here to share the overview of her thesis study. We wish Yijng all the best for her future career and life!

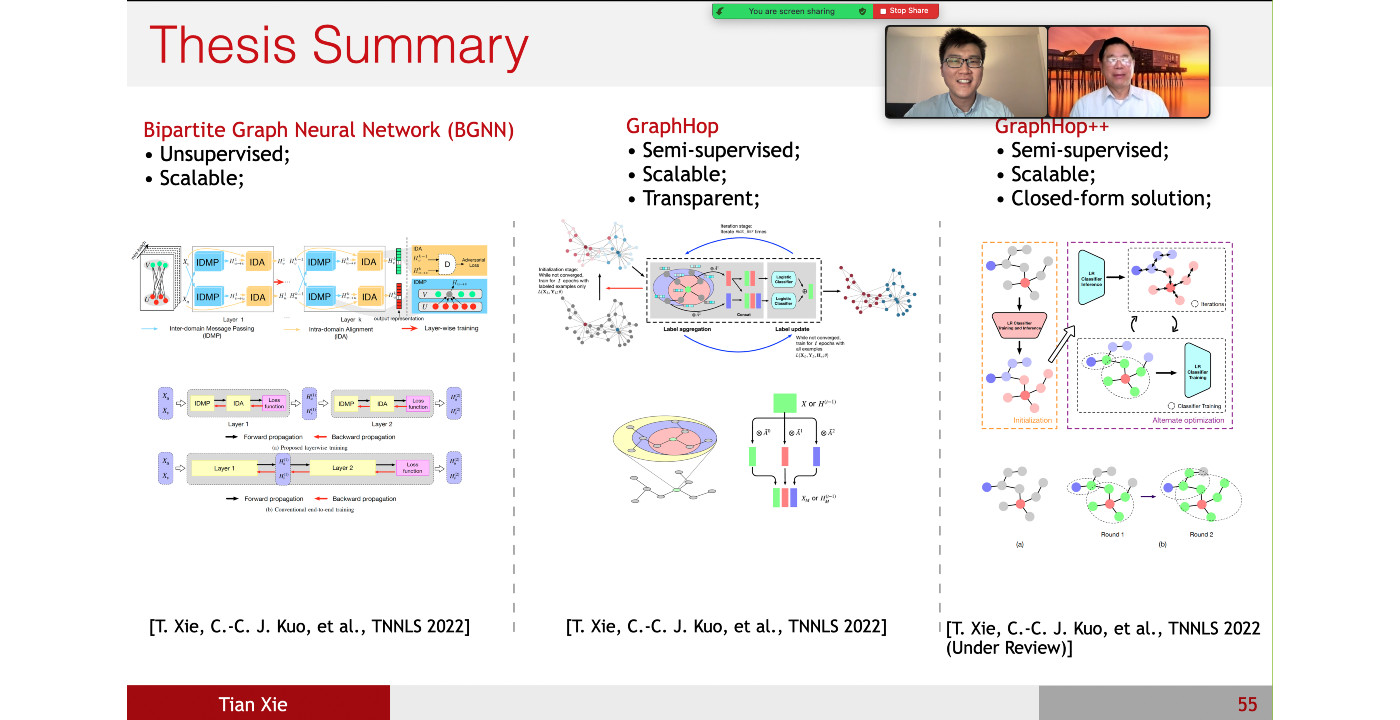

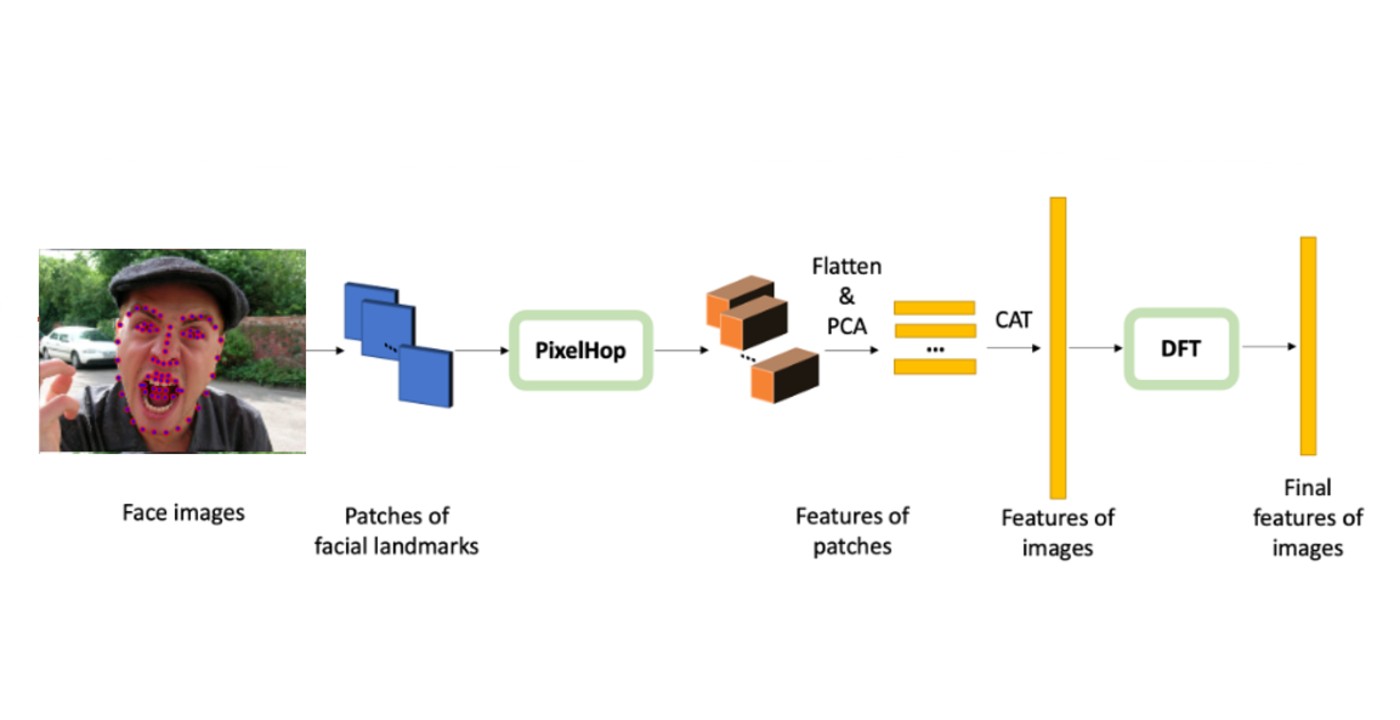

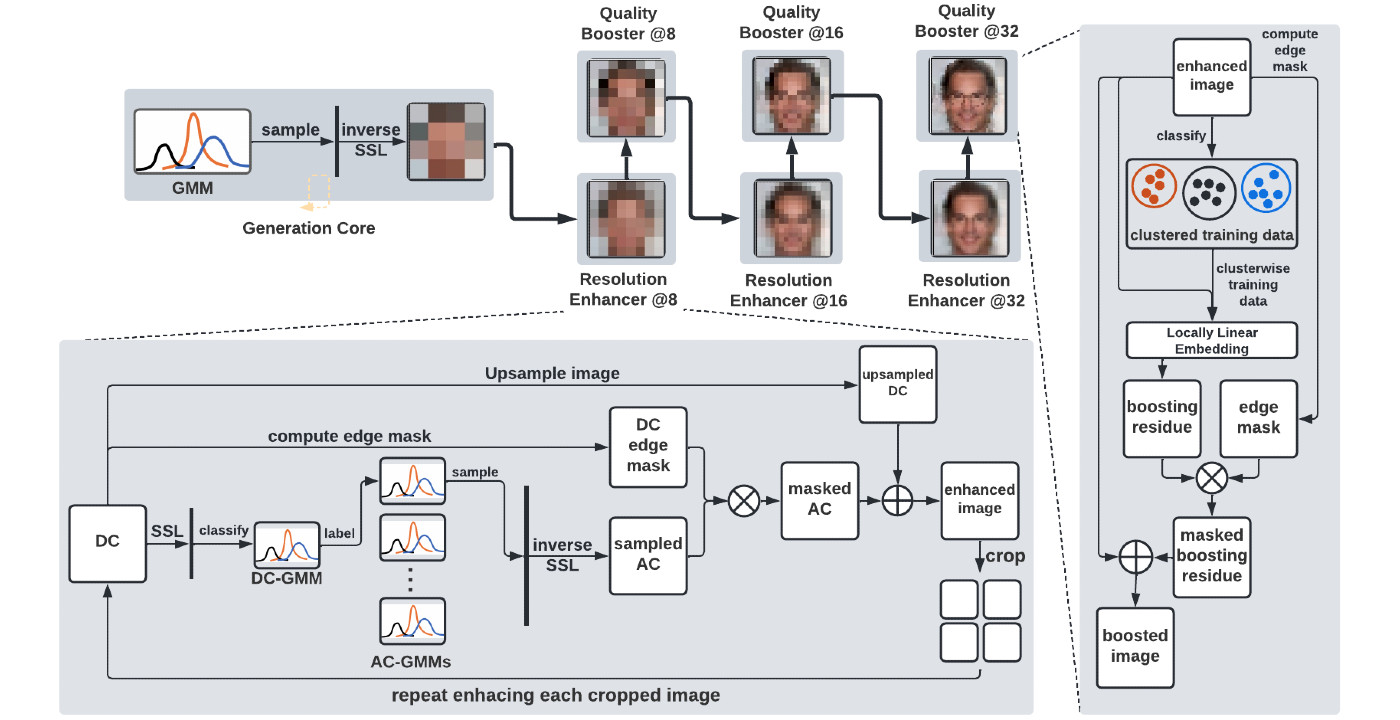

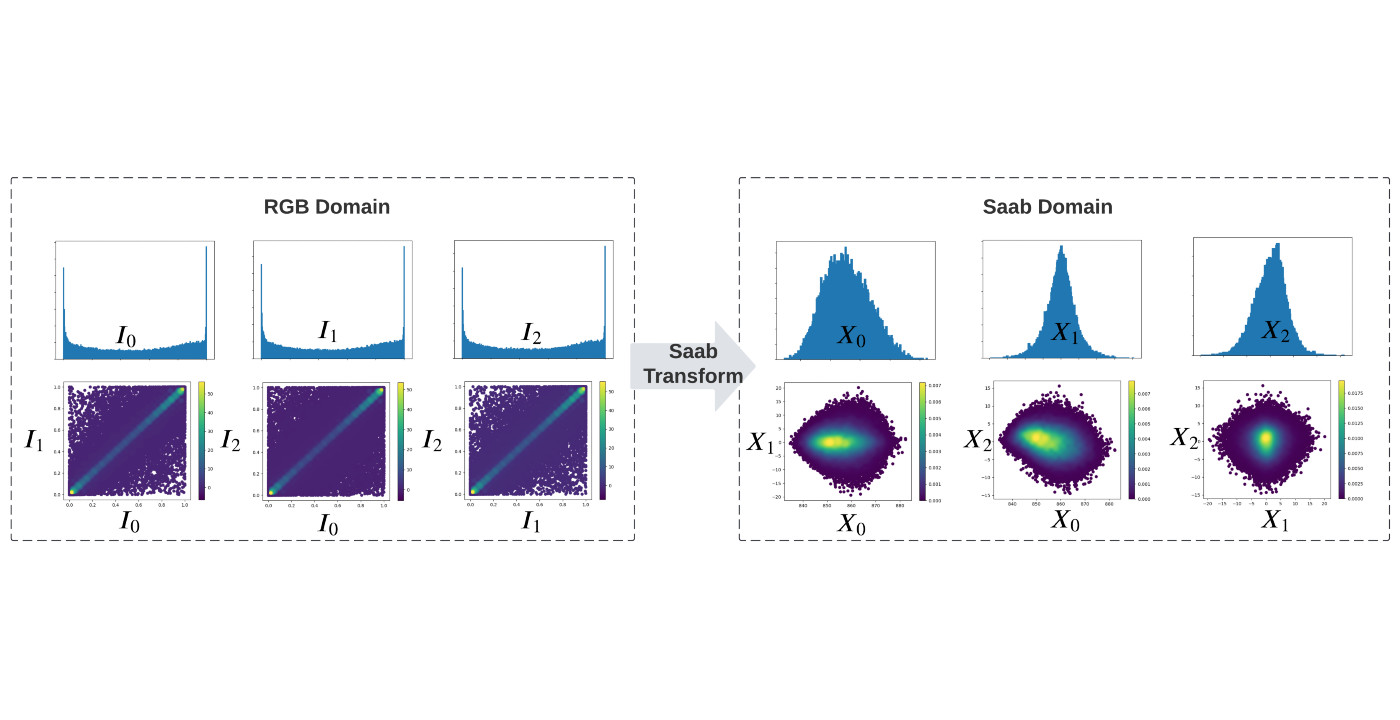

“Object classification has been studied for many years as a fundamental problem in computer vision. With the development of convolutional neural networks (CNNs) and the availability of larger scale datasets, we see a rapid success in the classification using deep learning. Although being effective, deep learning demands a high computational cost. Another challenge is the amount of accessible labeled data. How the quantity of labeled samples affects the performance of learning systems is an important question in the data-driven era. In this dissertation, we investigate and propose new techniques based on successive subspace learning (SSL) methodology to shed light on the above problems. It can be decomposed into four aspects: 1) improving the performance of SSL-based multi-class classification, 2) improving the performance of resolving confusing sets, 3) enhancing the quality of the learnt feature space by conducting a novel supervised feature selection, and 4) designing supervision-scalable learning systems.

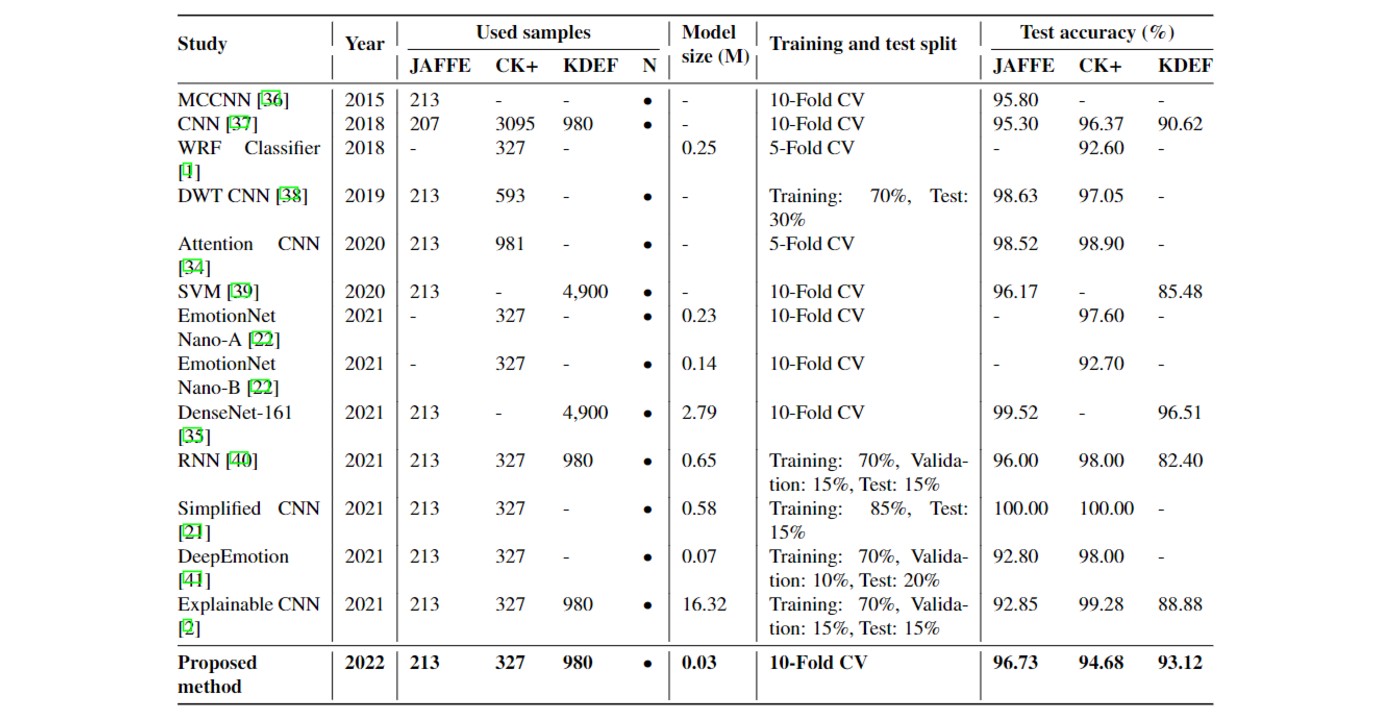

Specifically, in the first two aspects, soft-label smoothing (SLS), hard sample mining, and a new SSL-based attention localization method are proposed to improve the classification performance. In the third part, a novel supervised feature selection methodology is proposed to enhance the learnt feature space, including the discriminant feature test (DFT) and the [...]