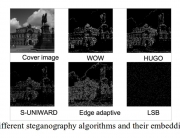

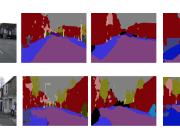

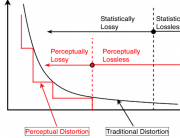

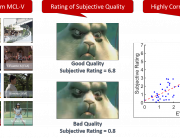

Saliency detection research predominantly falls within two categories: human eye fixation prediction, which involves the prediction of human gaze locations on images where attention is most concentrated [1], and salient object detection (SOD) [2], which aims to identify salient object regions within an image. Our study specifically focuses on the former saliency prediction, which predicts human gaze from visual stimuli.

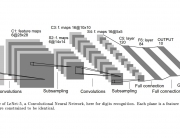

Saliency detection constitutes a crucial task in predicting human gaze patterns from visual stimuli. The escalating demand for research in saliency detection is driven by the growing necessity to incorporate such techniques into various computer vision tasks and to understand human visual system. Many existing saliency detection methodologies rely on deep neural networks (DNNs) to achieve good performance. However, the extensive model sizes associated with these approaches impede their integration with other modules or deployment on mobile devices.

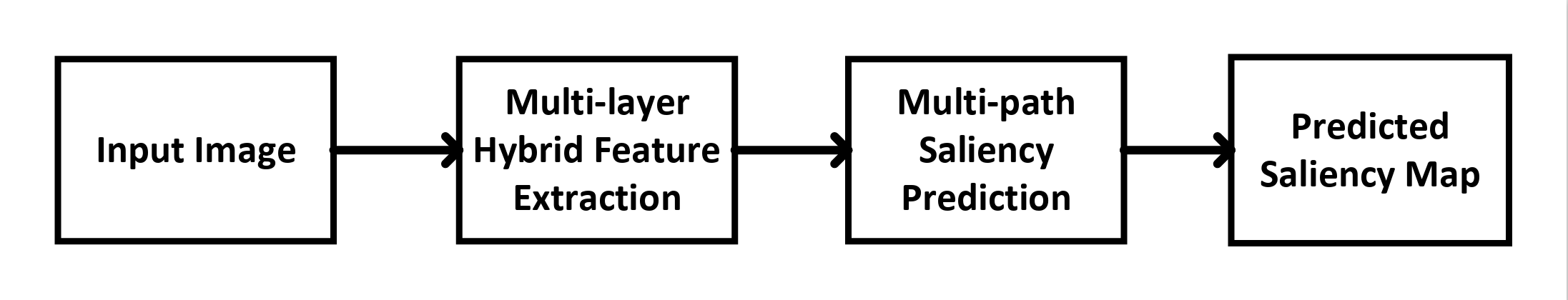

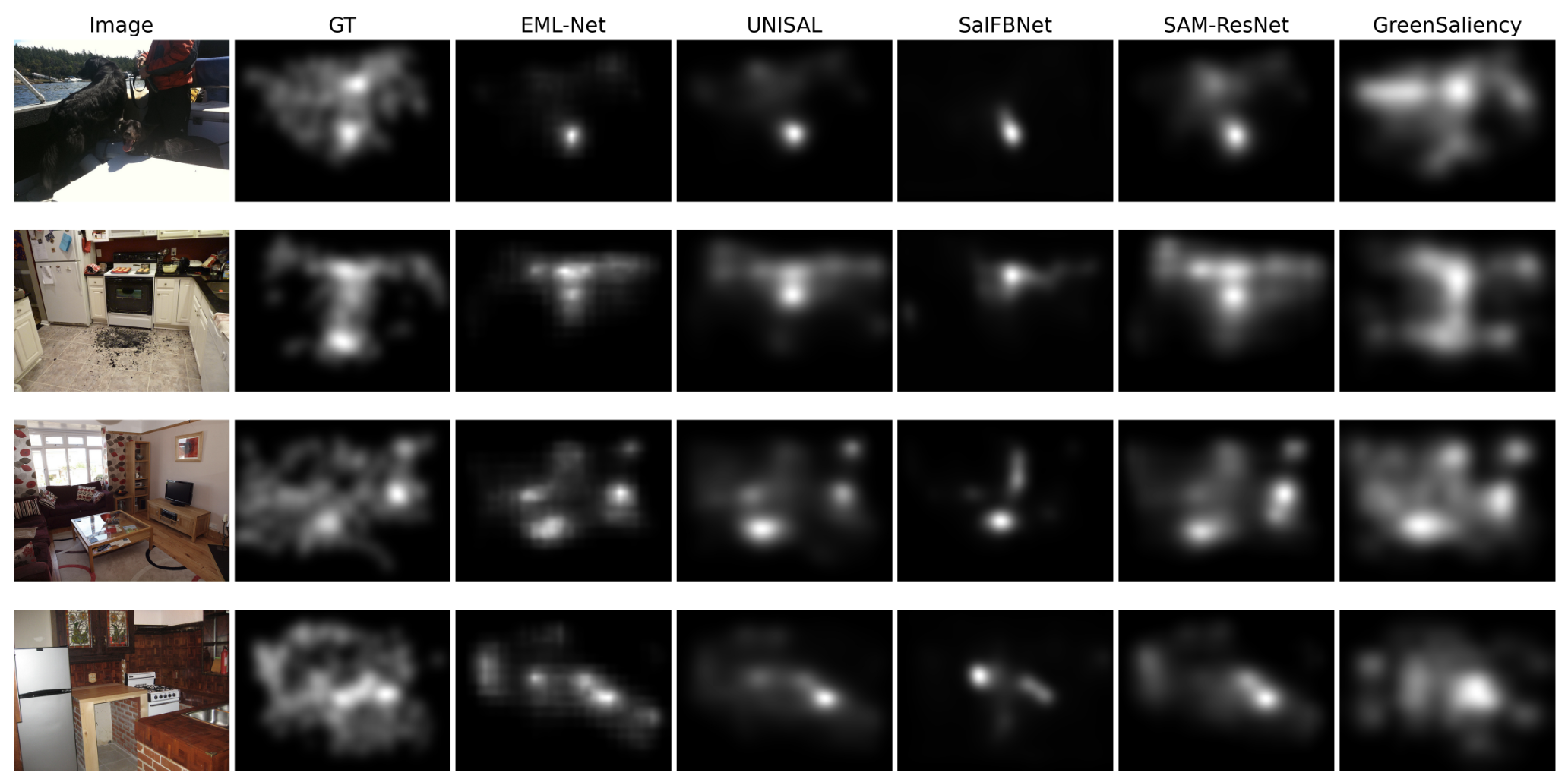

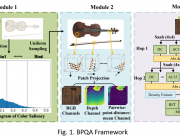

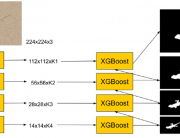

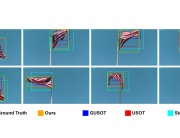

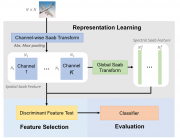

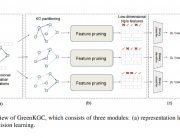

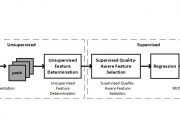

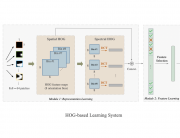

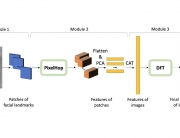

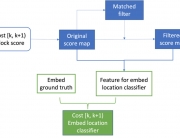

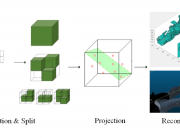

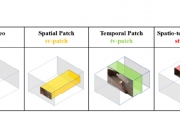

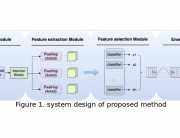

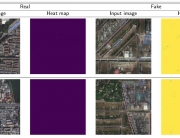

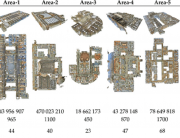

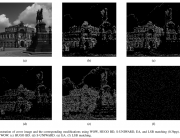

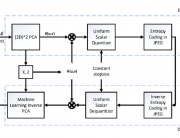

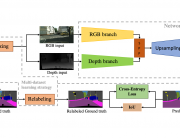

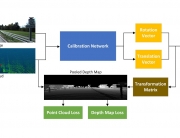

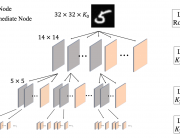

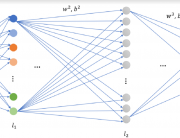

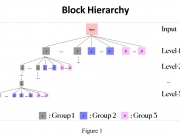

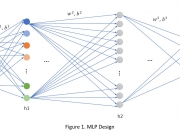

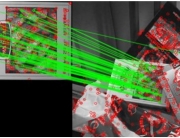

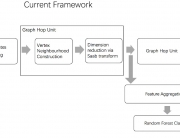

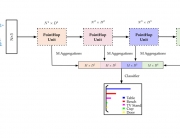

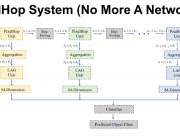

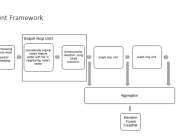

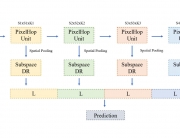

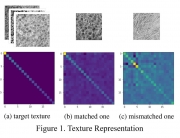

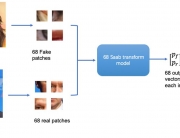

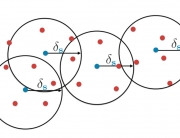

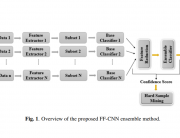

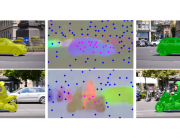

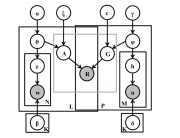

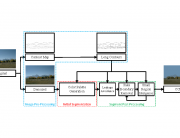

To address this need, our study introduces a novel saliency detection method, named “GreenSaliency”, which eschews the use of DNNs while emphasizing a small model footprint and low computational complexity. GreenSaliency comprises two primary steps: 1) multi-layer hybrid feature extraction, and 2) multi-path saliency prediction. Empirical findings demonstrate that GreenSaliency achieves performance levels comparable to certain deep-learning-based (DL-based) methods, while necessitating a considerably smaller model size and significantly reduced computational complexity.

[1] Zhaohui Che, Ali Borji, Guangtao Zhai, Xiongkuo Min, Guodong Guo, and Patrick Le Callet, “How is gaze influenced by image transformations? dataset and model”, IEEE Transactions on Image Processing, 29, 2287–300.

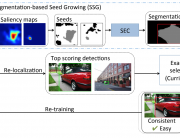

[2] Dingwen Zhang, Junwei Han, Yu Zhang, and Dong Xu, “Synthesizing supervision for learning deep saliency network without human annotation”, IEEE transactions on pattern analysis and machine intelligence, 42(7), 1755–69.