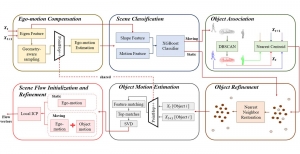

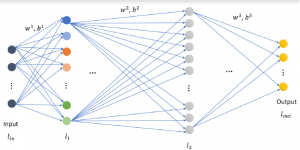

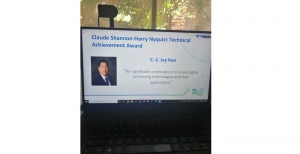

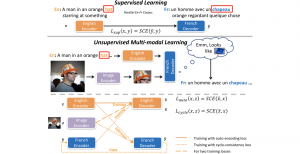

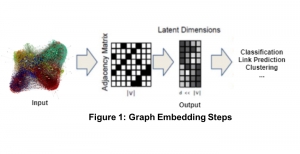

The deep learning technologies such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have great impacts on modern machine learning due to their impressive performance in many application fields that involve learning, modeling, and processing of complex sensing data. Yet, the working principle of deep learning remains mysterious. Furthermore, it has several well-known weaknesses: 1) vulnerability to adversarial attacks, 2) demanding heavy supervision, 3) generalizability from one domain to the other. Professor Kuo and his PhD students at Media Communications Lab (MCL) have been working on explainable deep learning since 2014 and published a sequence of pioneering papers on this topic.

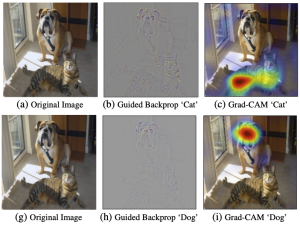

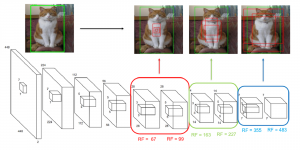

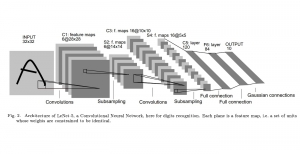

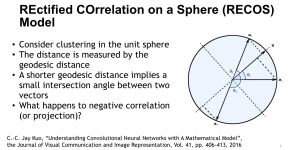

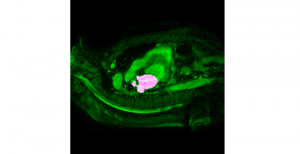

Explanation of nonlinear activation, convolutional filters and discriminability of trained features of CNNs [1]-[3]. The role of CNN’s nonlinear activation function is well explained in [1] at the first time. That is, the nonlinear activation operation is used to resolve the sign confusion problem due to the cascade of convolutional operations in multiple layers. This work received the 2018 best paper award from the Journal of Visual Communication and Image Representation. The convolutional filters is viewed as a rectified correlations on a sphere (RECOS) and CNN’s operation is interpreted as a multi-layer RECOS transform in [2]. The discriminability of trained features of a CNN at different convolution layers is analyzed using two quantitative metrics in [3] – the Gaussian confusion measure (GCM) and the cluster purity measure (CPM), The analysis is validated by experimental results.

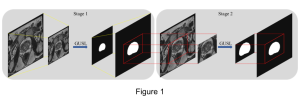

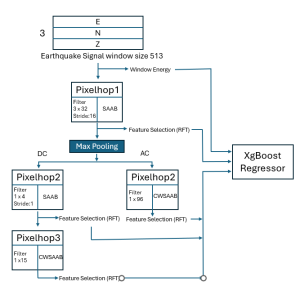

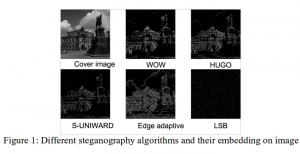

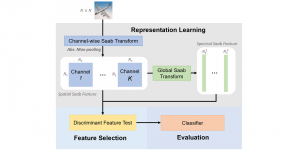

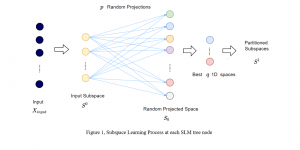

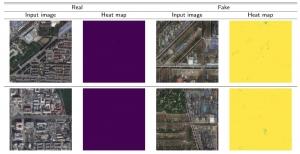

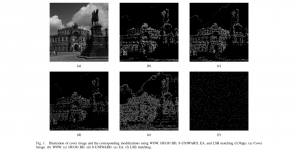

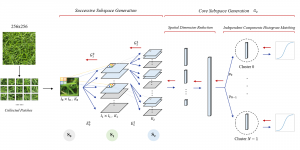

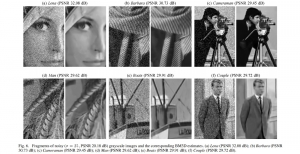

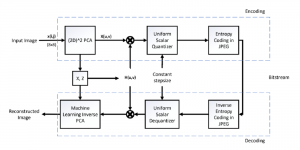

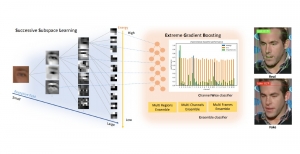

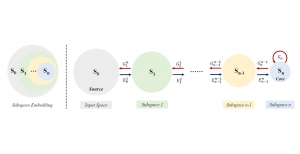

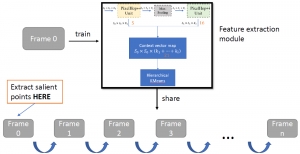

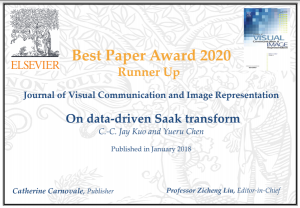

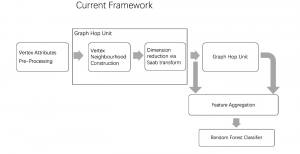

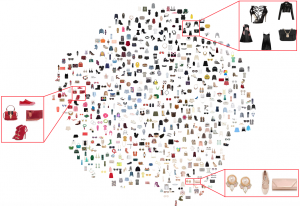

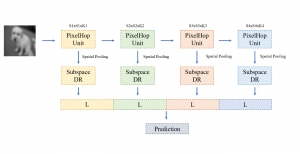

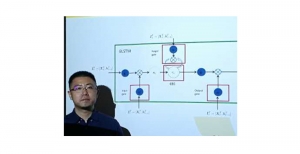

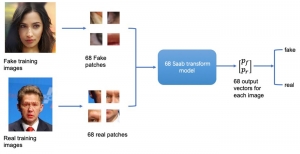

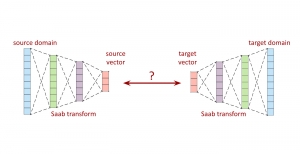

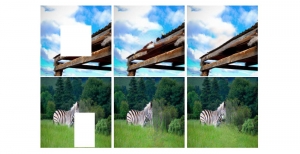

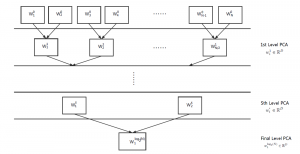

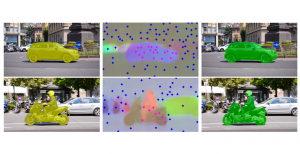

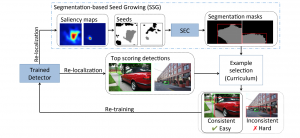

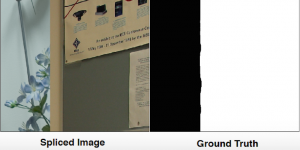

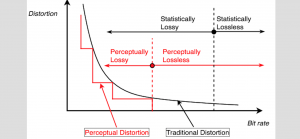

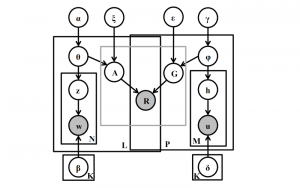

Saak transform and its application to adversarial attacks [4]-[5]. Being inspired by deep learning, we develop a new mathematical transform called the Saak (Subspace approximation with augmented kernels) transform in [4]. The Saak and inverse Saak transforms provide signal analysis and synthesis tools, respectively. CNNs are known to be vulnerable to adversarial perturbations, which imposes a serious threat to CNN-based decision systems. As an application, we propose to apply the lossy Saak transform to adversarially perturbed images as a preprocessing tool to defend against adversarial attacks in [5].

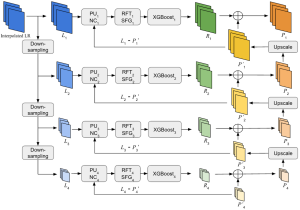

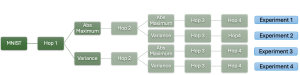

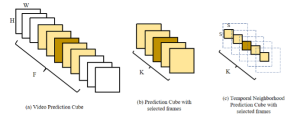

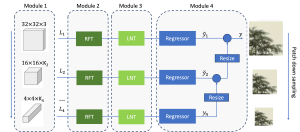

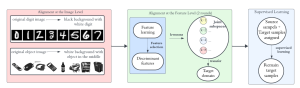

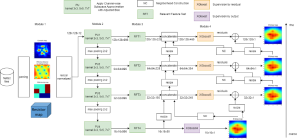

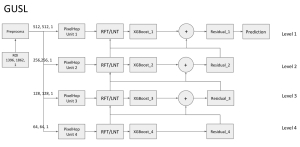

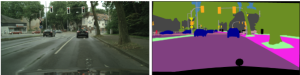

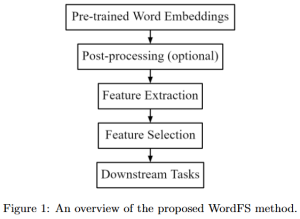

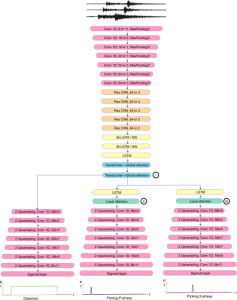

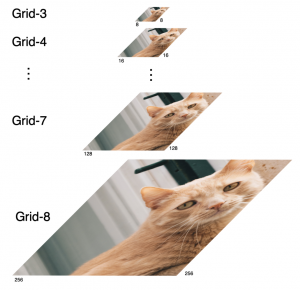

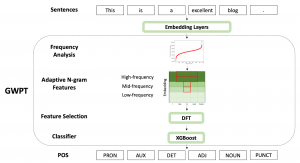

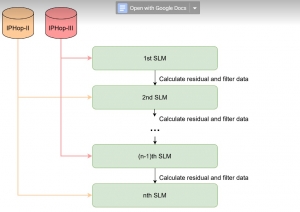

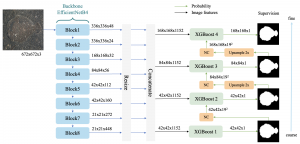

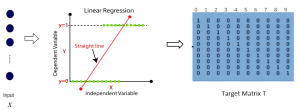

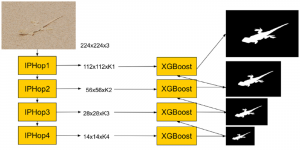

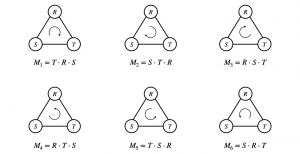

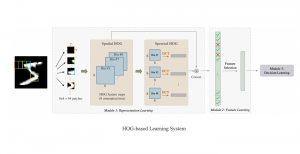

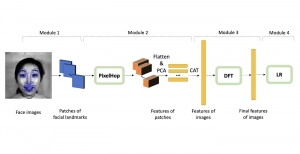

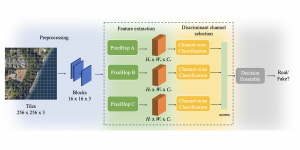

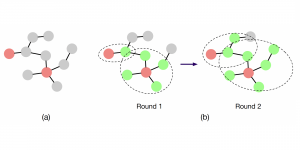

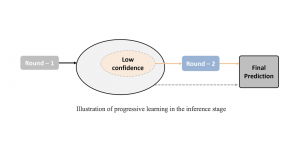

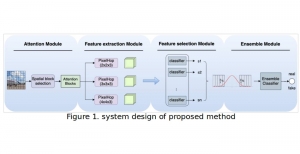

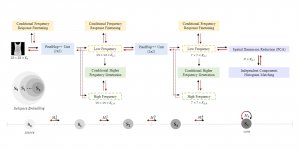

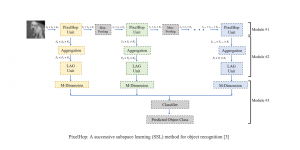

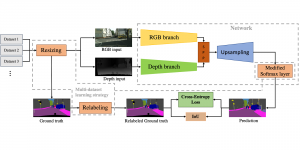

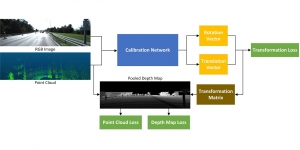

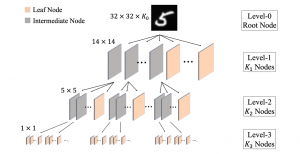

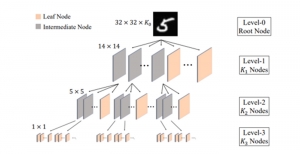

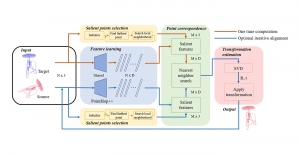

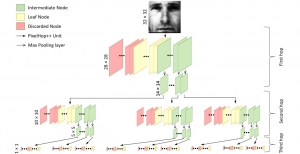

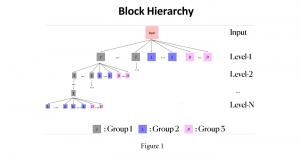

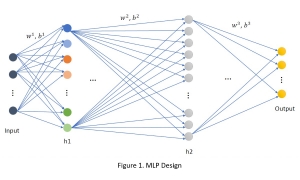

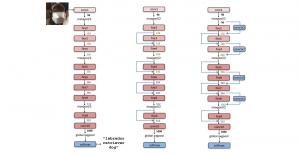

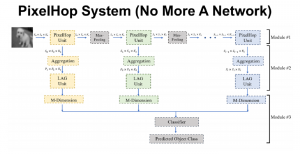

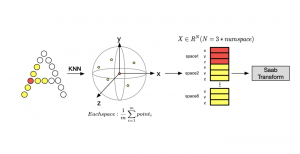

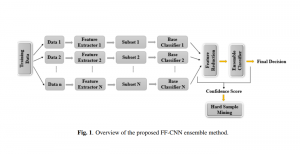

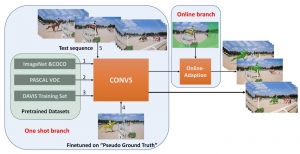

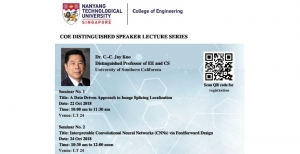

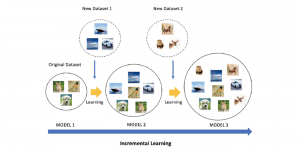

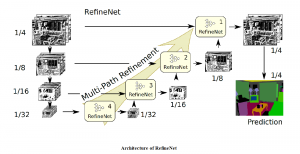

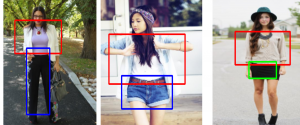

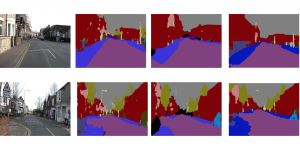

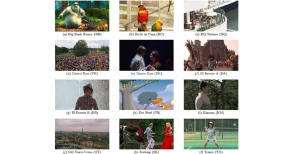

Interpretable CNN with feedforward design [6]-[7]. The model parameters of convolutional neural networks (CNNs) are deter-mined by backpropagation (BP). In [6], we propose an interpretable feedforward (FF) design without any BP. The FF design adopts a data-centric approach. It derives network parameters of the current layer based on data statistics from the output of the previous layer in a one-pass manner. The training complexity of FF-designed CNNs is significantly lower than that of BP-designed CNNs at the cost of lowered classification performance. However, this shortcoming can be overcome using an ensemble method of multiple FF-designed CNNs. Various ensemble ideas are discussed in [7]. An ensemble system of FF-designed CNNs can outperform a single BP-designed CNN in object classification for MNIST and CIFAR-10 datasets.

Further research on explainable deep learning. Explainable deep learning with the feedforward design is still at its infancy. Many challenges remain. For example, since it abandons the non-convex optimization framework for explainability, it may sacrifice the target performance (e.g. classification, segmentation, detection, etc.) Although an ensemble idea can be used to compensate for performance loss in simple datasets, it remains to be explored for larger datasets such as CIFAR-100 and ImageNet. It demands a substantial amount of additional efforts to push explainable deep learning to a mature stage.

References

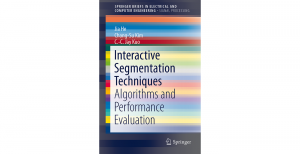

- C.-C. Jay Kuo, “Understanding convolutional neural networks with a mathematical model,” the Journal of Visual Communications and Image Representation, Vol. 41, pp. 406-413, November 2016.

- C.-C. Jay Kuo, “The CNN as a guided multi-layer RECOS transform,” the IEEE Signal Processing Magazine, Vol. 34, No. 3, pp. 81-89, May 2017.

- Hao Xu, Yueru Chen, Ruiyuan Lin and C-C Jay Kuo, “Understanding convolutional neural networks via discriminant feature analysis,” APSIPA Transactions on Signal and Information Processing, Vol. 7, December 2018.

- C.-C. Jay Kuo and Yueru Chen, “On data-driven Saak transform,” the Journal of Visual Communications and Image Representation, Vol. 50, pp. 237-246, January 2018.

- Sibo Song, Yueru Chen, Cheung, N.M. and C.-C. Jay Kuo, “Defense against adversarial attacks with Saak transform,” arXiv preprint arXiv:1808.01785, 2018.

- C.-C. Jay Kuo, Min Zhang, Siyang Li, Jiali Duan and Yueru Chen, “Interpretable convolutional neural networks via feedforward design,” arXiv preprint arXiv: 1810.02786, 2018.

- Yueru Chen, Yijing Yang, Wei Wang and C-C Jay Kuo “Ensembles of feedforward-designed convolutional neural networks,” arXiv preprint arXiv: 1901.021154, 2019.