Congratulations to Junting Zhang for Passing His Qualifying Exam on 01/17/19. Her thesis proposal is titled with “IMAGE KNOWLEDGE TRANSFER WITH DEEP LEARNING TECHNIQUES”. Her Qualifying Exam committee includes: Jay Kuo (Chair), Sandy Sawchuk, Keith Jenkins, Panos Georgiou and Ulrich Neumann (Outside Member).

Abstract of thesis proposal:

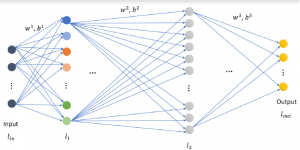

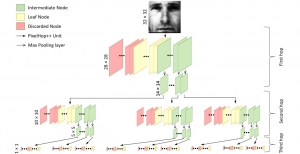

In recent years, we have witnessed tremendous success in training deep neural networks to learn a surprisingly accurate mapping from input signals to outputs, whether they are images, languages, genetic sequences, etc. from large amounts of labeled data. One fatal characteristics of the current dominant learning paradigm is that it learns in isolation: given a carefully constructed training dataset, it runs a machine learning algorithm on the dataset to produce a model that is then used in its specific intended application. It has no intention to exploit the dependencies and relations among different tasks and domains, nor the effective techniques to retain, accumulate, and transfer knowledge gained from past learning experiences to solve new problems in the new scenarios.

The learning environments are typically static and strictly constrained. For supervised learning, labeling of training data is often done manually, which is prohibitively expensive in terms of labor resource and time, especially when the required label is fine-grained or it requires knowledge from a domain expert. Considering the real world is too complex with infinite possible tasks, it is almost impossible to label sufficient number of examples for every possible task or application. Furthermore, the world also changes constantly, and appearance of instances or the label of the same instance may vary from time to time, the labeling thus needs to be done continually, which is a daunting task for humans.

On the contrary, humans learn in a different way, where transfer of knowledge plays a critical role in human learning. We accumulate and maintain the knowledge learned from previous tasks and use it seamlessly in learning new tasks and solving new problems. Whenever we encounter a new situation or problem, we are good at discovering the relation between it and our past experience, finding some aspects that we have seen in the past in some other contexts, adapting our past reusable knowledge to deal with the new situation, and just learning the newly encountered aspects. True intelligence means the capability that goes far beyond simply memorize and repeating materials we have learned, and we are able to digest old knowledge and experiences and then apply them to a new concept, and to use both the new and old knowledge to solve a problem that we may have never encountered before. In order to build the ultimate artificial intelligence (AI) that learns like humans, the idea of knowledge transfer allows us to deal with the complex and ever-changing world.

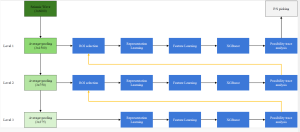

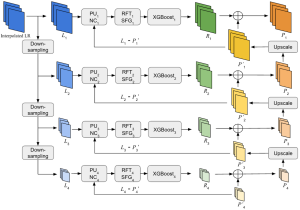

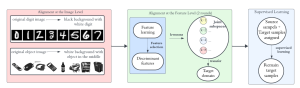

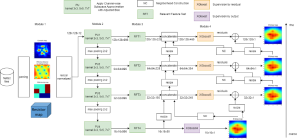

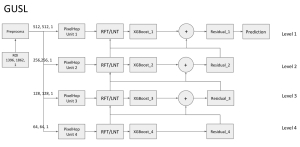

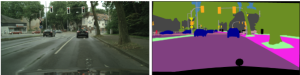

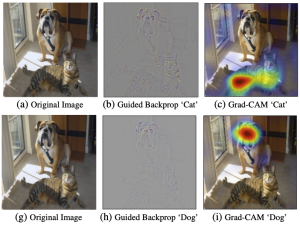

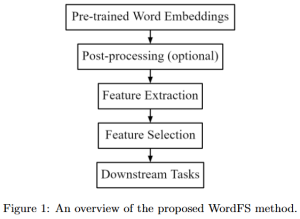

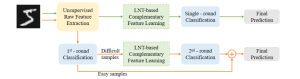

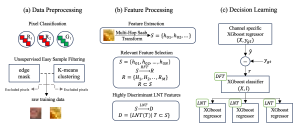

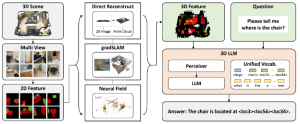

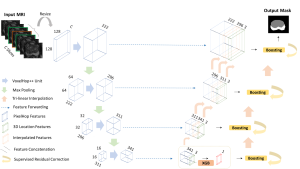

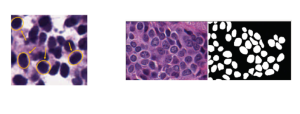

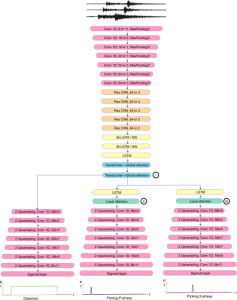

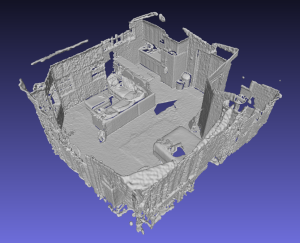

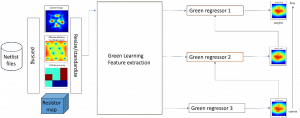

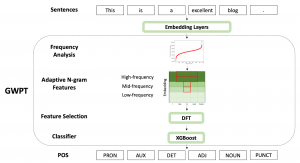

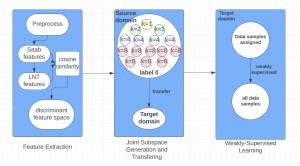

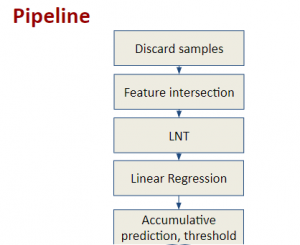

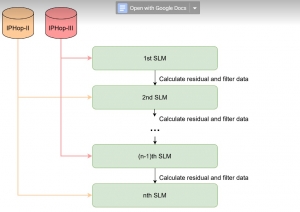

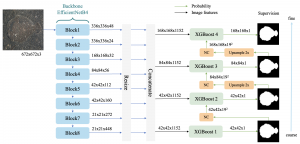

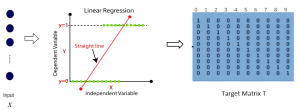

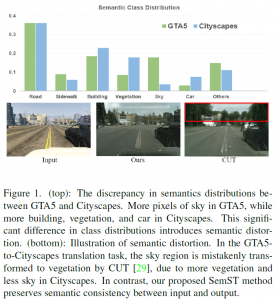

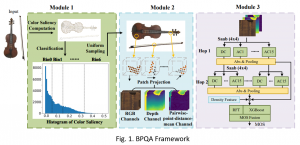

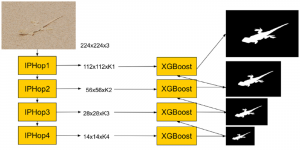

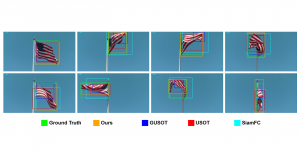

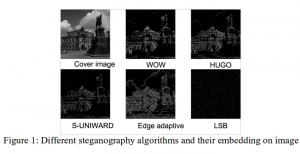

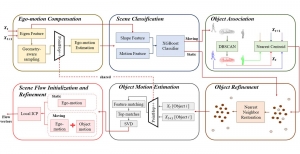

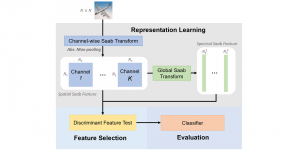

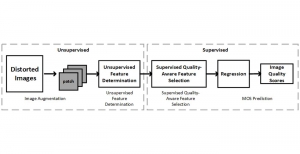

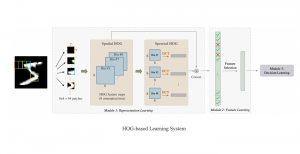

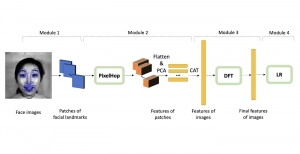

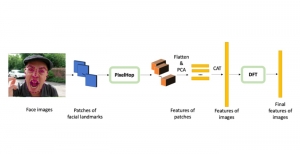

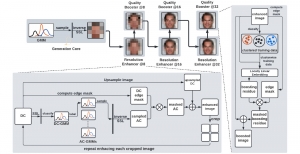

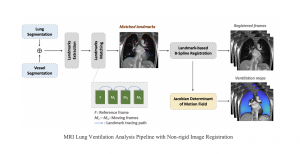

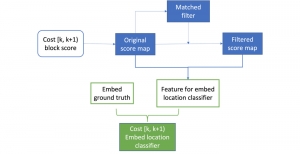

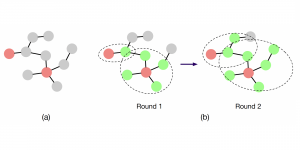

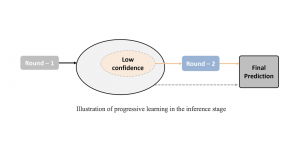

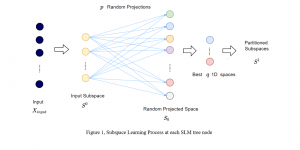

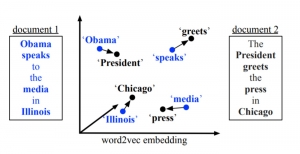

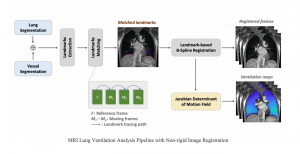

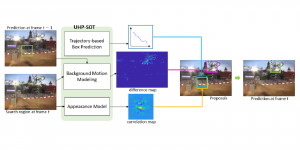

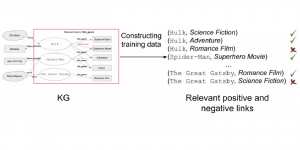

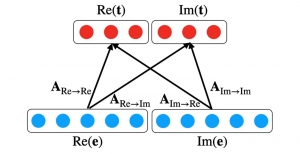

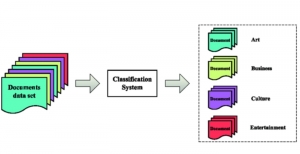

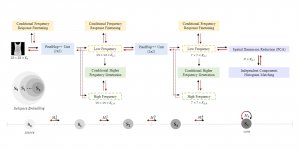

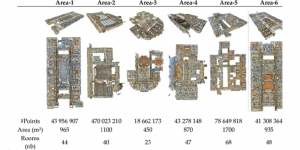

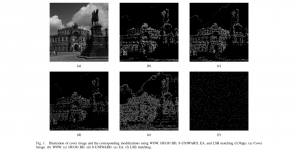

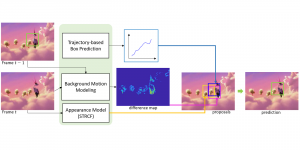

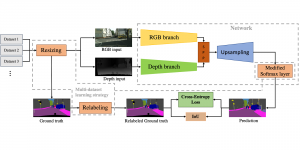

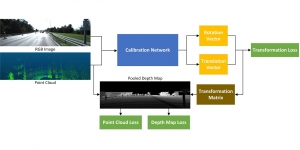

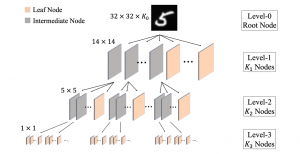

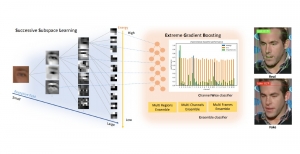

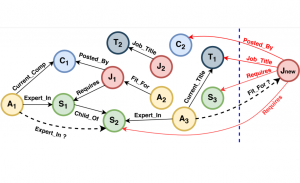

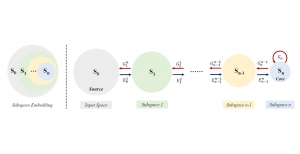

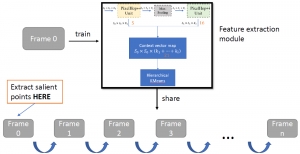

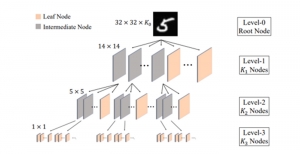

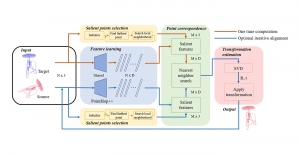

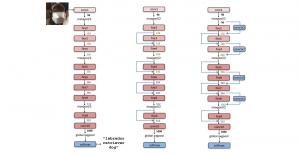

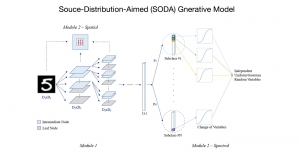

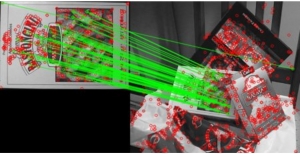

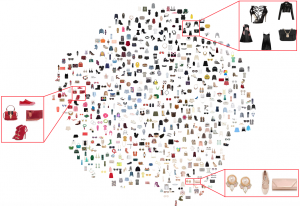

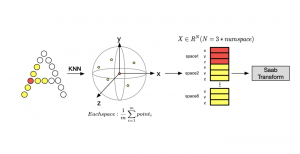

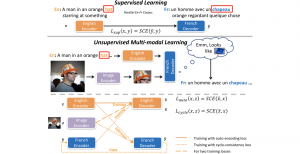

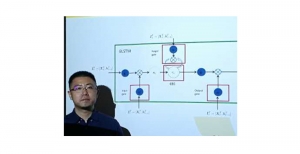

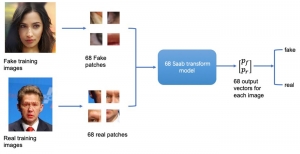

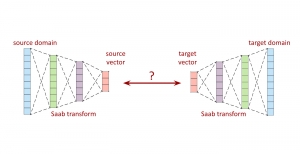

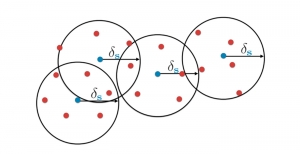

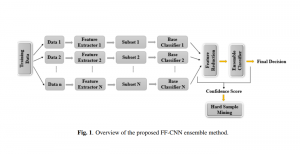

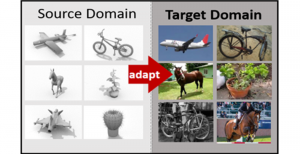

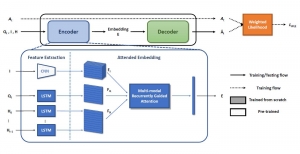

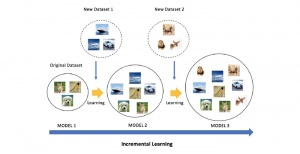

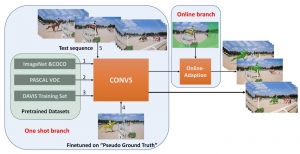

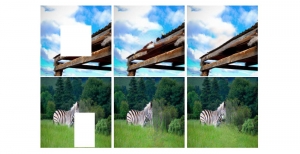

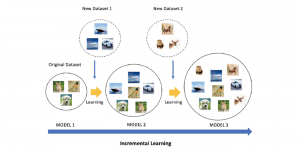

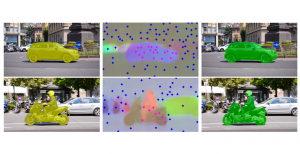

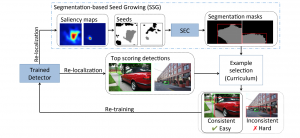

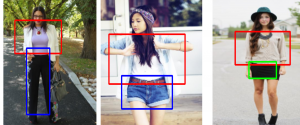

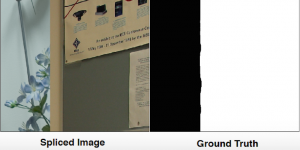

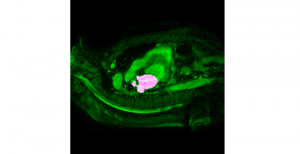

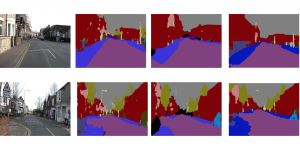

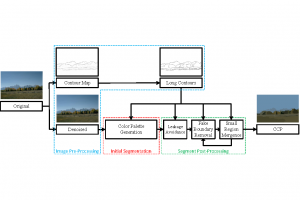

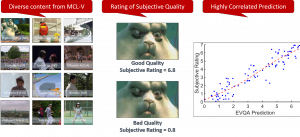

We studied three different types of knowledge transfer scenarios that could facilitate the learning process of machines: 1) incremental learning — we transfer knowledge from old task(s) to new task as training data becomes available gradually over time; 2) domain adaptation — we aim to conduct the same task in different visual domains, where the labeled training data in the source domain is much easier to acquire; 3) knowledge transfer across applications to improve robustness of the target application. Specifically, we considered these scenarios in computer vision applications, but note that many ideas we developed may generalize to a broader range of machine learning applications.