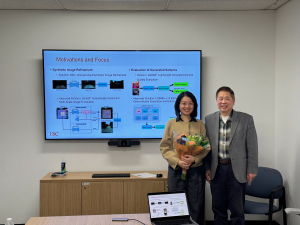

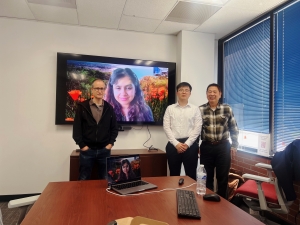

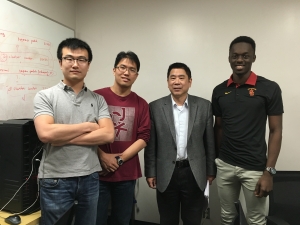

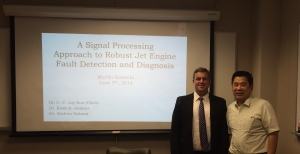

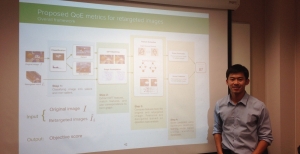

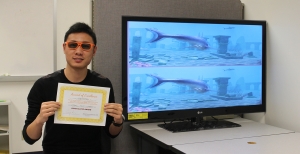

Congratulations to Max Chen for passing his defense today. Max’ thesis is titled “A Green Learning Approach to Deepfake Detection and Camouflage and Splicing Object Localization.” His Dissertation Committee includes Jay Kuo (Chair), Shrikanth Narayanan, and Aiichiro Nakano (Outside Member). The Committee members highly praised the quality of his work. MCL News team invited Max for a short talk on her thesis and PhD experience, and here is the summary. We thank Max for his kind sharing, and wish him all the best in the next journey.

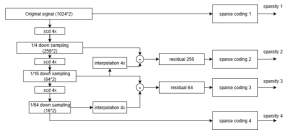

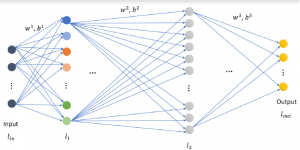

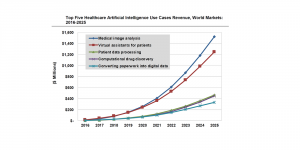

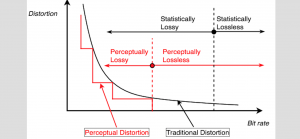

“In the current technological era, the advancement of AI models has not only driven innovation but also heightened concerns over environmental sustainability due to increased energy and water usage. For context, the water consumption equivalent to a 500ml bottle is tied to 10 to 50 responses from a model like GPT-3, and projections suggest that by 2027, AI could be using an estimated 85 to 134 TWh per year, potentially surpassing the water withdrawal of half of the United Kingdom. In light of these challenges, there is an urgent call for AI solutions that are environmentally friendly, characterized by lower energy consumption through fewer floating-point operations (FLOPs), more compact designs, and the ability to run independently on mobile devices without depending on server-based infrastructures.

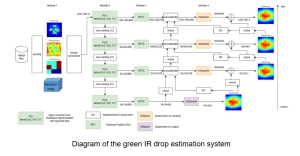

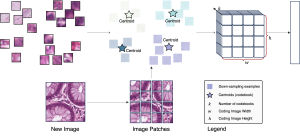

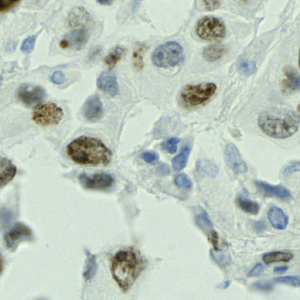

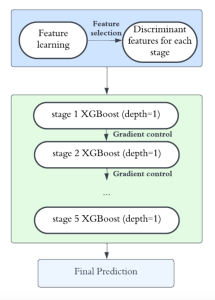

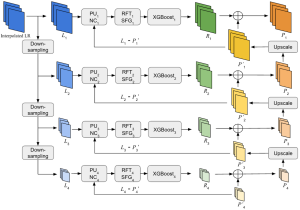

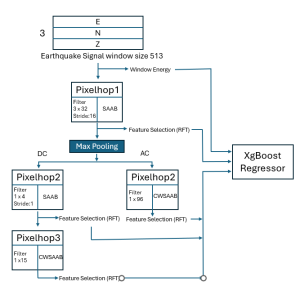

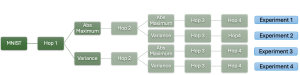

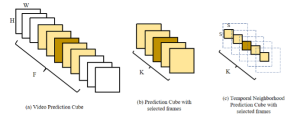

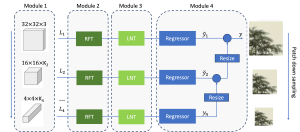

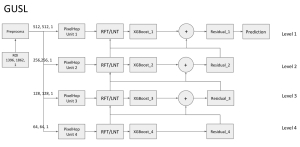

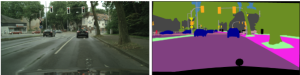

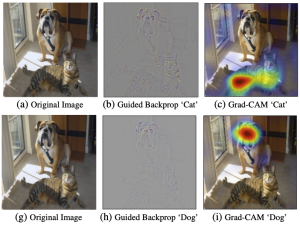

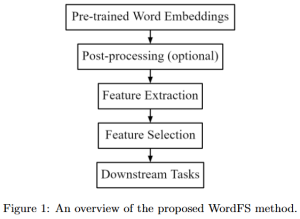

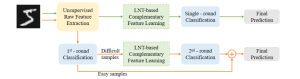

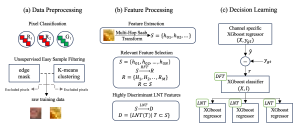

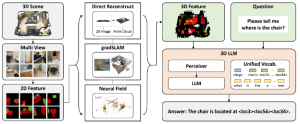

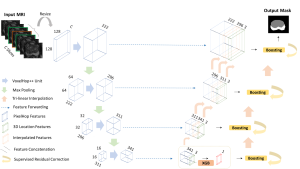

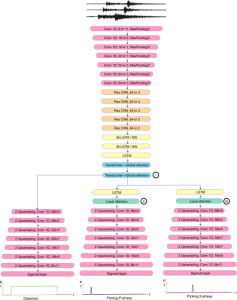

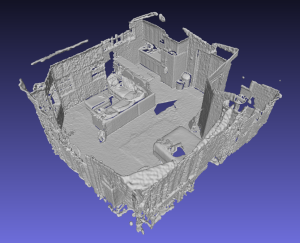

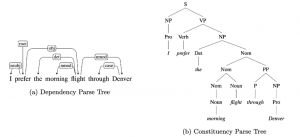

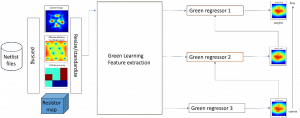

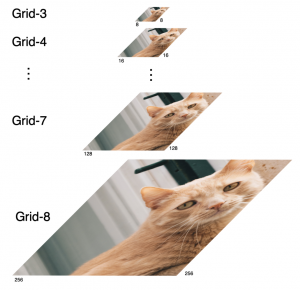

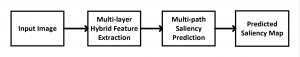

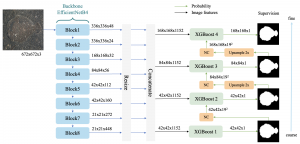

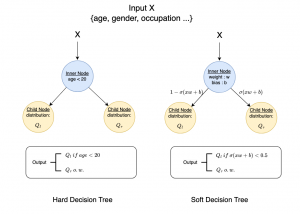

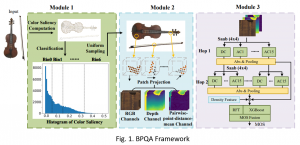

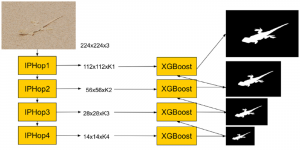

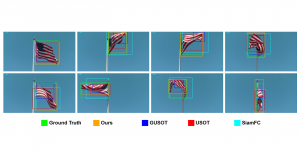

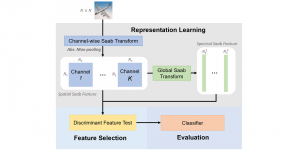

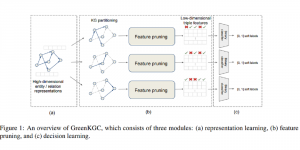

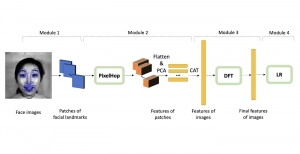

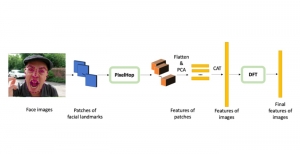

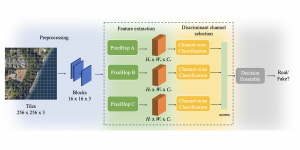

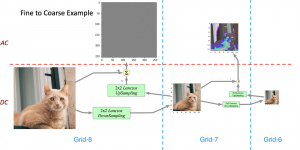

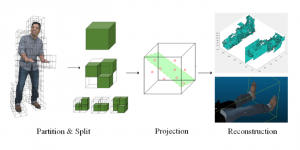

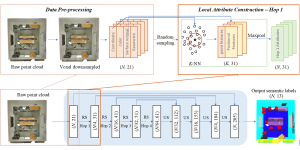

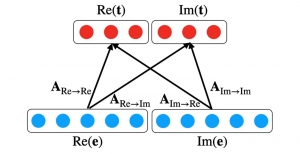

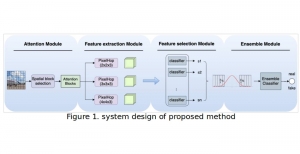

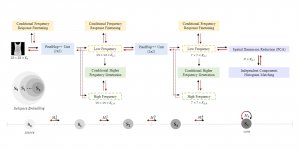

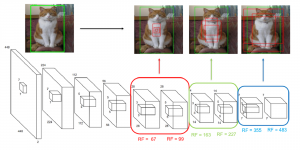

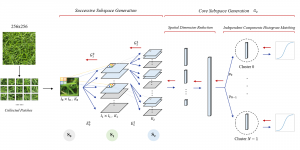

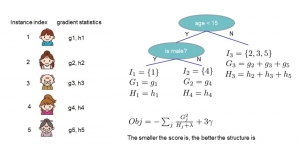

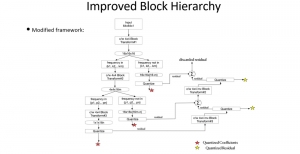

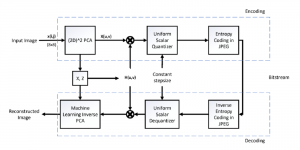

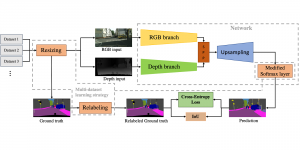

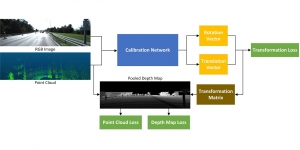

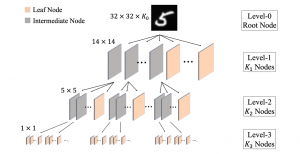

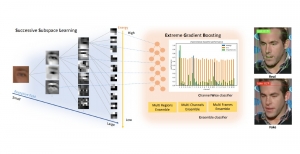

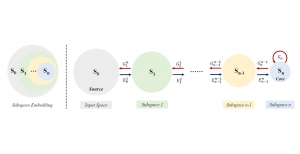

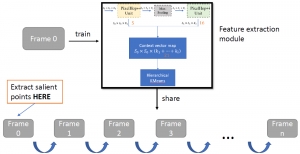

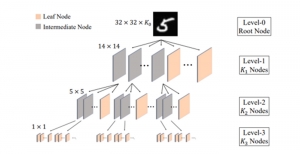

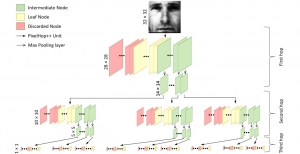

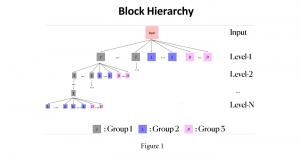

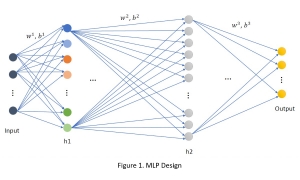

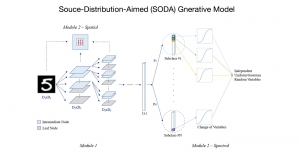

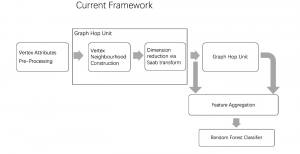

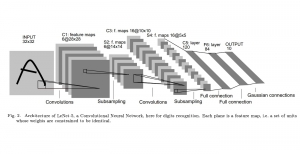

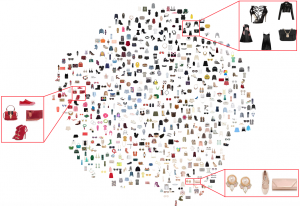

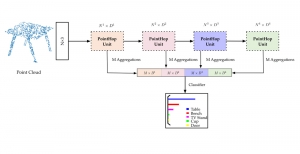

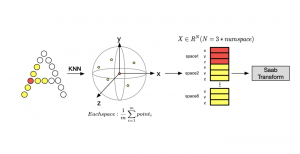

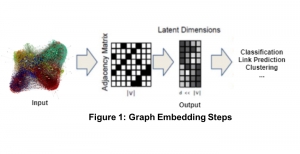

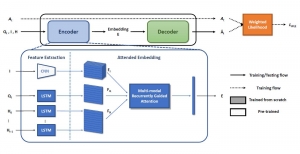

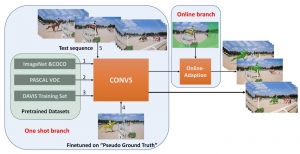

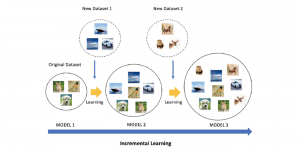

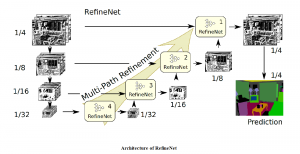

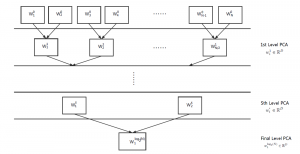

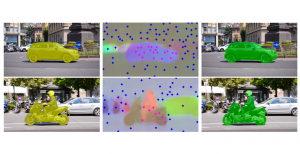

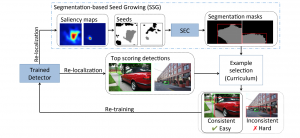

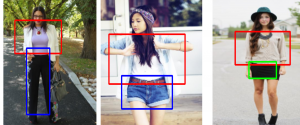

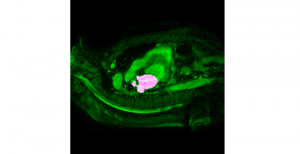

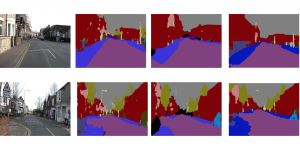

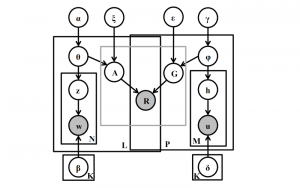

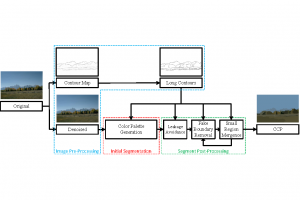

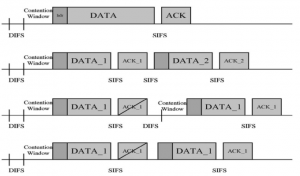

This thesis introduces a novel approach for Camouflaged Object Detection, termed “GreenCOD.” GreenCOD combines the power of Extreme Gradient Boosting (XGBoost) with deep features. Contemporary research often focuses on devising intricate DNN architectures to enhance the performance of Camouflaged Object Detection. However, these methods are typically computationally intensive and show marginal differences between models. Our GreenCOD model stands out by employing gradient boosting for detection tasks. With its efficient design, it requires fewer parameters and FLOPs than leading-edge deep learning models, all while maintaining superior performance. Notably, our model undergoes training without the use of back-propagation and provides interpretability.

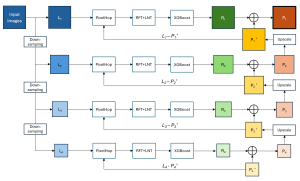

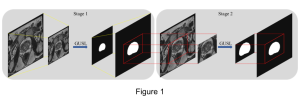

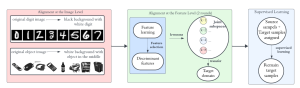

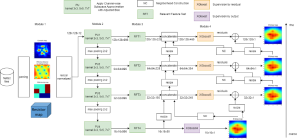

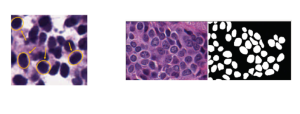

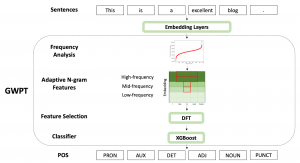

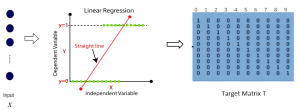

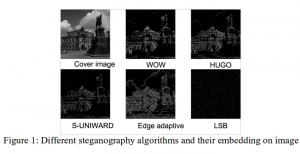

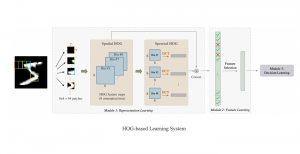

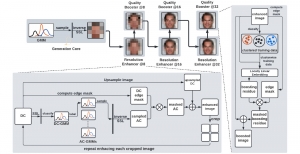

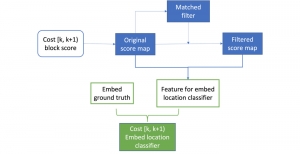

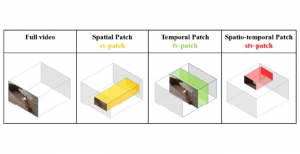

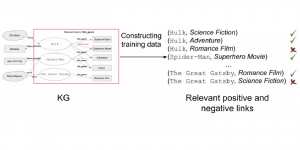

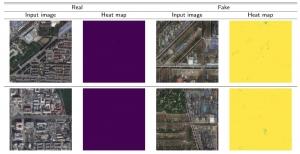

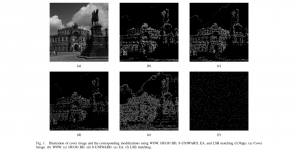

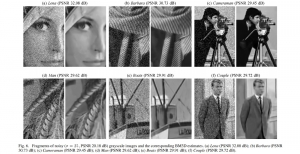

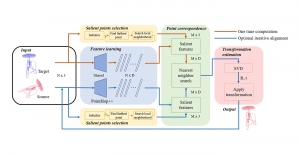

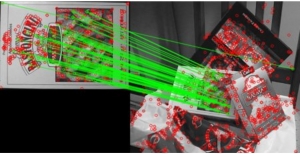

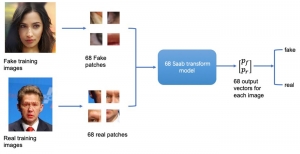

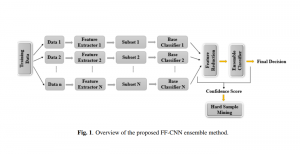

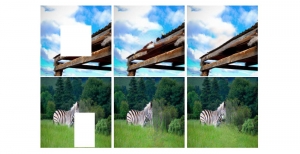

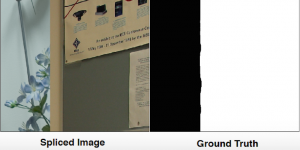

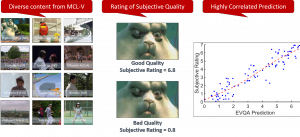

In addition, we present the Green Image Forgery Technique (GIFT). GIFT is a pioneering gradient-boosting method tailored for detecting multiple image forgeries. It operates without the necessity for back-propagation or end-to-end training. Besides benefiting from the direct supervision of the ground truth forgery mask, our method incorporates edge supervision, which refines our decision-making concerning forgery boundaries. Extensive experiments across multiple datasets validate GIFT’s superior performance over many state-of-the-art methods, even with its reduced computational demands.”

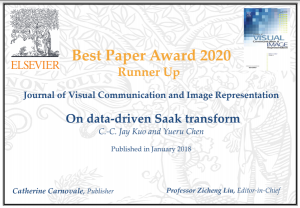

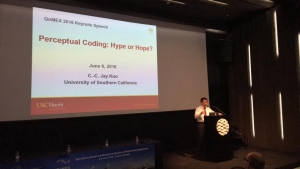

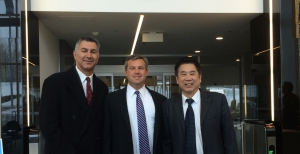

I would like to express my deepest appreciation to Professor C.-C. Jay Kuo, whose expertise, understanding, and patience, added considerably to my PhD experience. I am extremely grateful for the guidance and support he has provided me throughout my studies and the process of researching. His willingness to give his time so generously has been very much appreciated. I am particularly thankful for the opportunity to collaborate with him during my PhD study, which was instrumental in shaping my critical thinking and research skills. Over the past five years, we have embarked on research in Green Learning, achieving a multitude of astonishing and unforgettable accomplishments. For this and so much more, I am forever thankful to Professor C.-C. Jay Kuo. It has been an honor and a privilege to be his student.

To all my colleagues at the MCL laboratory, your support and camaraderie have made my PhD journey a remarkable experience. A special thanks to Kaitai Zhang, my first mentor in the lab, with whom I co-authored my first publication—a milestone that set the tone for my research career. My gratitude extends to Yun-Cheng (Joe) Wang, Xiou Ge, Chengwei Wei, Kevin Yang, and Chee-An Yu for the research discussions and the shared personal milestones.

This thesis is not just a reflection of my efforts but a testament to the collective support and many others who have been part of this journey. To each of you, I am profoundly grateful.