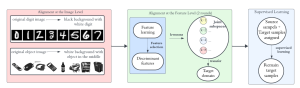

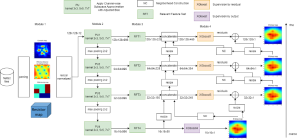

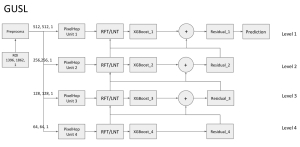

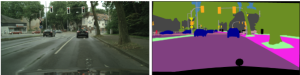

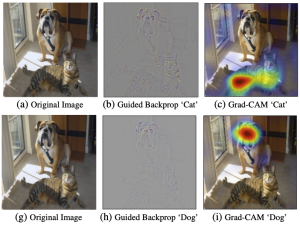

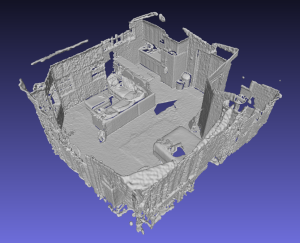

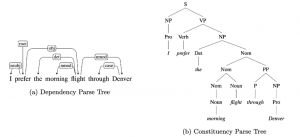

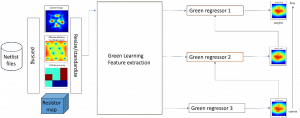

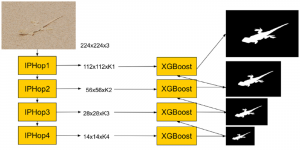

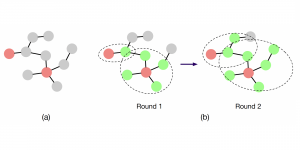

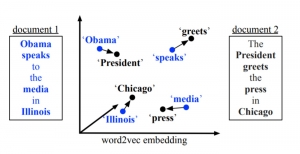

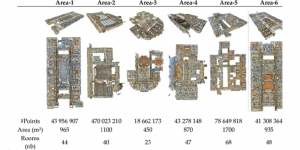

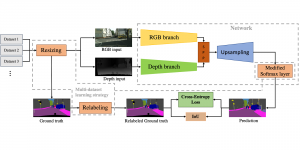

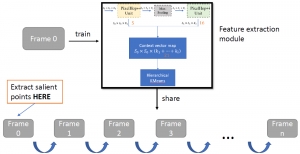

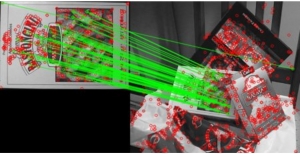

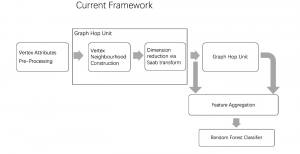

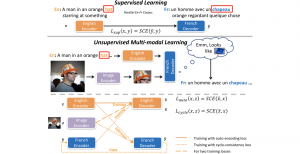

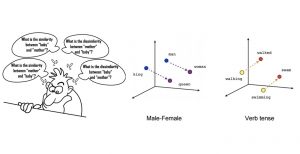

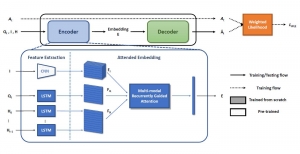

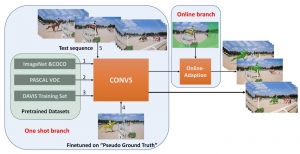

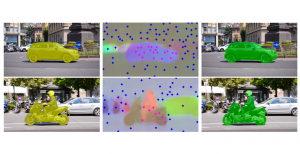

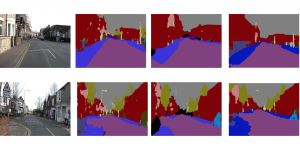

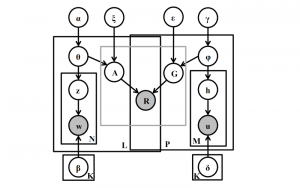

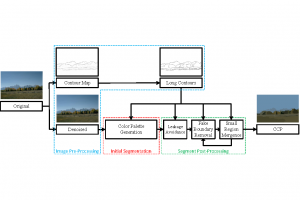

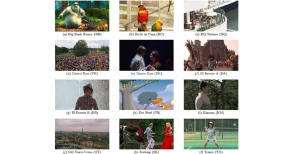

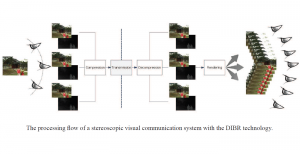

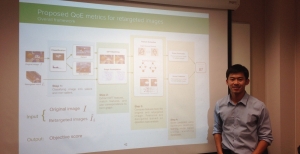

Imagine a robot navigating across rooms following human instructions: “Turn left and take a right at the table. Take a left at the painting and then take your first right. Wait next to the exercise equipment”, the agent is expected to first execute the action “turn left” and then locates “the table” before “taking a right”. However, in practice, the agent might well turn right in the middle of the trajectory before a table is observed, in which case the follow-up navigation would definitely fail. Human on the other hand, has the ability to relate visual input with language semantics. In this example, human would locate visual landmarks such as table, painting, exercise equipment before making a decision (turn right, turn left and stop). We endow our agent with similar reasoning ability by equipping our agent with a synthesizer module that implicitly aligns language semantics with visual observations. The poster is available online: https://davidsonic.github.io/summary/Poster_3d_indoor.pdf and the demonstration video is available at https://sites.google.com/view/submission-2019.

–By Jiali Duan